- Find Answers

- :

- Using Splunk

- :

- Other Using Splunk

- :

- Alerting

- :

- Why is a triggered alert reporting 286 events (<30...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark Topic

- Subscribe to Topic

- Mute Topic

- Printer Friendly Page

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

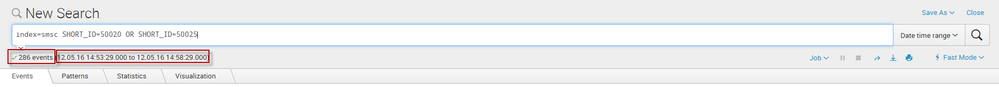

Why is a triggered alert reporting 286 events (<300), but I see 2444 events when I refresh the search?

Hi

I created a simple alert which is triggered when the number of results is less than 300 events in 5 minutes. I received an email saying the alert was triggered. When I click on the link "View result", I see 286 events.

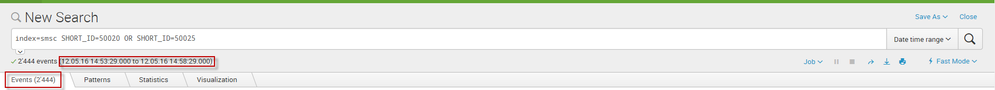

But if I refresh the search command, I find 2444 events.

My search: index=xxx SHORT_ID=yyy OR SHORT_ID=zzz

My alert: realtime, number of result less than 300 results in 5 minutes

Is it because Splunk didn't finish indexing the logs that my alert reports only 286 events? How can I fix the problem?

Thank you

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The problem is that your alert is real-time and there is latency in the delivery of your events into Splunk. At the time the alert fired, some events which will eventually fall into the real-time window HAVE NOT ARRIVED INTO SPLUNK YET. This is one of MANY reasons not to ever use real-time. If you run your search every 2 minutes from -10m@m to -5m@m, you will have a nice alert that performs WAY better resource-wise and also is FAR more accurate. The amount of time to "wait" (in this case 5 minutes) depends on the latency of your events, which can be calculated like this:

index=smsc | eval lagSecs = _indextime - _time | stats min(lagSecs) max(lagSecs) avg(lagSecs) by host source

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

You might be on to something with regards to indexing lag. Try these searches and see if your lag is greater than a couple minutes.

* | eval lag_sec=_indextime-_time | timechart span=1h min(lag_sec) avg(lag_sec) max(lag_sec) by source

* | eval lag_sec=_indextime-_time | stats min(lag_sec) avg(lag_sec) max(lag_sec) by host

If it is, then you need to put some sort of trailing data range on your command versus the realtime search... something like this:

... _index_earliest=-15m@m _index_latest=-10m@m ... which would snap to the minute and look at the count of events that came in between 15 and 10 minutes ago.

That or figure out what is causing the lag, and address it... like maybe you need more indexers to handle the load, maybe the storage is too slow, maybe network issues, etc.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I'm sorry, but the behavior implied here is clearly a bug. If Splunk is querying for realtime alerts by using the timestamp indicated in the logs instead of the timestamp when Splunk received/recorded the event, then it's not really "Realtime."

When a user says realtime, we mean we want to know if an event matches an alert condition the moment Splunk knows, not the moment Splunk would know if we lived in some imaginary world where all events arrive in the order they happen, or what I'm going to go ahead and coin as "Faketime"

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi!

Thank you for your answers! Indead, some hosts have a latency of 500 seconds. So I will do my search between -15mn and 10mn.

If I well understood, I shouldn't use alerts on "Real Time" ? I have to use "Run on Cron Schedule" every 2 minutes for example, right ?

index=xxx (SHORT_ID=yyy OR SHORT_ID=zzz) _index_earliest=-15m@m _index_latest=-10m@m

When I create the scheduled cron, there are also the "earliest" and "latest" fields, should I use these fields instead of using _index_earliest and _index_latest in the command line ?

Thank you

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

It depends on if you want to use the timestamp of the event or when it was actually indexed. Your choice... And yes realtime searches are for sales demos, not very practical in real life unless you're watching your data flow in during development, etc.