- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi everyone,

I have been facing a wired question about our alerts.

Basically the we have an alert triggers when the log contains error. The syntax looks like below:

index=[Index] _index_earliest=-15m earliest=-15m

(host=[Hostname]) AND (level=ERR OR tag IN (error) OR ERR)

We had alert action set up to send message to Teams when it triggers.

The wired thing is: The alert doesn't trigger but the search can still matches events manually. Like in the past 24 hours, we have 50 events can be matched by the search, but no alerts triggered.

When I went and searched internal logs, I found the search dispatched successfully but shows

result_count=0, alert_actions=""

It looks likes the search never picked up the event to trigger an alert, but my manual search can find events. Anyone has had similar problem before?

Much appreciated

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thank you so much for your help and looking into the problem. We have finally resolved the issue. It's a real odd one.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@freddy_Guo - One of the reasons that I could think of an incorrectly extracted time or difference in timestamp and ingest time.

index=[Index] _index_earliest=-15m

| eval indextime=strftime(_indextime, "%F %T")

| eval diff_in_min=round((indextime-_time)/60, 2)

| table _time, indextime, diff_in_minRun the above search to find out how much diff there is between indexed time and timestamp.

I hope this helps!!! Upvote would be appreciated!!

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

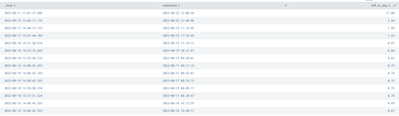

Thank you so much for you answer! I have just ran the search and did a quick sort.

It looks like that we are getting some index time delay as you can see in the screenshot.

Would you mind share how to fix this error please?

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I would suggest updating the query like this to handle ingestion delay:

index=[Index] _index_earliest=-15m earliest=-1h

(host=[Hostname]) AND (level=ERR OR tag IN (error) OR ERR)

I hope this helps!!! Upvote would be appreciated!!

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Vatsal,

Thanks for the response!

I have updated the alert to

_index_earliest=-15m earliest=-1h

It doesn't solve the problem by the look of. It's still not triggering

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@freddy_Guo - Run this to find out the largest time diff:

index=[Index]

(host=[Hostname]) AND (level=ERR OR tag IN (error) OR ERR)

| eval indextime=strftime(_indextime, "%F %T")

| eval diff_in_min=round((indextime-_time)/60, 2)

| table _time, indextime, diff_in_min

| sort - diff_in_minRun this in last 24 hours.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Below is a screenshot of the last 24 hours.

Actually, I found something interesting. Yesterday I have updated _index_earliest from -15m to -30m. It looks like the alert is triggering okay for last night. I'm confused by how it works.

Index=[index] search logic _index_earliest=-30m earliest=-1h

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@VatsalJagani Hi man, thank you so much for your help so far.

I have another example which is another alert that's having similar issue.

That one search logic is even simpler:

Something like this:

index=[DefenderATPIndex] sourcetype="ms:defender:atp:alerts" _index_earliest=-5m earliest=-7d

Then I also have ran search like this in the past 15 days.

index=[DefenderATPIndex] sourcetype="ms:defender:atp:alerts"

| eval indextime=strftime(_indextime, "%F %T")

| eval diff_in_day=round((_indextime-_time)/60/60/24, 2)

| table _time, indextime, diff_in_day

| sort - diff_in_dayI found this:

If you have any suggestions like this, it would be fantastic.

Thank you sooo much.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@freddy_Guo - This data source has a delay, but following in your query should be able to handle it properly.

- _index_earliest=-5m earliest=-7d

Please also look at other areas which could have issues:

- Check whether the alert is triggering or not.

- index=_internal sourcetype=scheduler "<alert name or some keywords>" result_count=*

- If the alert has results and triggered an email or other alert action, please check whether there was any issue sending an email or notification.

- index=_internal (source=*splunkd.log OR source=*python.log)

I hope this helps!!!

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thank you so much for your help and looking into the problem. We have finally resolved the issue. It's a real odd one.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@freddy_Guo - Indeed an odd issue.

I'm glad you are able to figure out and resolve it.!!!

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I'm very grateful that you spent you time on helping me as well.

Thank you.