- Find Answers

- :

- Using Splunk

- :

- Other Using Splunk

- :

- Alerting

- :

- Why am I receiving too many Splunk logs on audit.l...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark Topic

- Subscribe to Topic

- Mute Topic

- Printer Friendly Page

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi everyone!

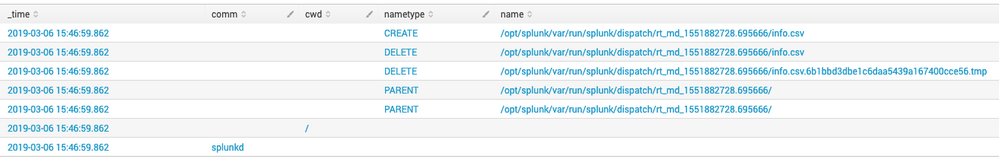

i logged into my search head and found that the main indexer was at 98% of the total capacity. So i started to look for which host/sourcetype was causing this. I found the search head itself was indexing too many events from audit.og (it has installed the splunk_TA_nix addon). Some examples are:

type=PATH msg=audit(1551884420.778:495054861): item=4 name="/opt/splunk/var/run/splunk/dispatch/scheduler_[some_data]__search__[some_data]/[some_file_name].csv" inode=35130128 dev=ca:60 mode=0100600 ouid=0 ogid=0 rdev=00:00 nametype=CREATE

type=PATH msg=audit(1551884420.778:495054861): item=3 name="/opt/splunk/var/run/splunk/dispatch/scheduler_[some_data]___search__[some_data]__at_1551884400_30928/[some_file_name]_.csv" inode=35129880 dev=ca:60 mode=0100600 ouid=0 ogid=0 rdev=00:00 nametype=DELETE

So i made the next search to check which services were generating this logs

index=main host="MY_SEARCH_HEAD" source="/var/log/audit/audit.log"

| eval PATH=case(name LIKE "%/opt/splunk/%", "OPT Splunk", name LIKE "%/volr/splunk%", "Volr Splunk")

| stats count by PATH

| eventstats sum(count) as perc

| eval perc=round(count*100/perc,2)

The result is the next one:

PATH: OPT Splunk Count: 2749262+ perc: 75%

PATH: Volr Splunk Count: 2749262+ perc: 24%

Why are this logs being generated, and how can i not overload my indexer?

Thanks for reading!

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The issue you have is this.

You have installed the ta-nix app which monitors audit events on your Splunk server.

When a file is modified on the Splunk server, an event is generated in audit.log

Splunk then indexes audit.log, and writes the result to the Splunk index, in /opt/Splunk/var/....

This in turn generates a new event in audit.log which...you guessed it, gets indexed, and written, and triggers another event in audit.log

As well as writing index files, your searches are also creating objects in the same path, so your Splunk server is eating itself!

Two solutions:

A.) reconfigure the auditd service to ignore changes in the /opt/Splunk/var/ paths

B.) configure the ta-nix app to ignore audit.log on Splunk servers.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The issue you have is this.

You have installed the ta-nix app which monitors audit events on your Splunk server.

When a file is modified on the Splunk server, an event is generated in audit.log

Splunk then indexes audit.log, and writes the result to the Splunk index, in /opt/Splunk/var/....

This in turn generates a new event in audit.log which...you guessed it, gets indexed, and written, and triggers another event in audit.log

As well as writing index files, your searches are also creating objects in the same path, so your Splunk server is eating itself!

Two solutions:

A.) reconfigure the auditd service to ignore changes in the /opt/Splunk/var/ paths

B.) configure the ta-nix app to ignore audit.log on Splunk servers.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

This is becoming nasty...i found CSV are not the only files. The mission of audit.log is to log any user action taken (or system action also) So i belive the best solution to this problem y to directly except this eventos from being indexed over audit.log

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

i have a single instance that is acting as indexer and search head. but in this case, the events come from the same Search Head. So i would like to know what kind of events are those that i share and how can i except them to not be indexed. I need to know what caused this amount of data to start being indexed. Meaby debug mode or something?

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I assume you have a single instance acting as SH and indexer. You would need to look at all data sources sending data to 'index=main' and the frequency of the data [ e.g. interval parameter in inputs.conf]. If you need to adjust them to reduce the events, that could reduce the events/sec indexed.

Additionally you would also need to setup a retention policy/size on your 'main' and other indexes, so you do not run out of disk space.

https://docs.splunk.com/Documentation/Splunk/7.2.4/Indexer/Setaretirementandarchivingpolicy