Are you a member of the Splunk Community?

- Find Answers

- :

- Using Splunk

- :

- Other Using Splunk

- :

- Alerting

- :

- Re: How to compare current data with data from 24 ...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark Topic

- Subscribe to Topic

- Mute Topic

- Printer Friendly Page

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I want to create a splunk alert in such a way that it compares current data with yesterdays during the same time period. For e.g today at 1:00 PM will compare with yesterday 1:00 PM.

then I want to calculate the change percent of those two results. On basis of what we get want set the alert if change percent exceeds certain threshold (say X).

e.g. todays error count at x hour is 500, yesterday's error count at x hour was 300. change percent = 500-300=200X.01=2%

if threshold is set to 1%, alert is triggered.

Any help would be appreciated.

thanks

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

This should do it:

yoursearchhere -earliest=-25h@h -latest=@h

| eval WhichDay = case(_time>=relative_time(now(),"-1h@h"),"Today",

_time<=relative_time(now(),"-24h@h"),"Yesterday",

1==1,null())

| stats count(eval(WhichDay=="Today")) as Today count(eval(WhichDay=="Yesterday")) as Yesterday

| eval ChangePercent = (Today - Yesterday) / 100

Here is an example of how the times work:

The search starts at 2:07 - this is the value of now()

@h = 2:00 - the end of today's hour

-1h@h = 1:00 - the beginning of today's hour

-24h@h = yesterday at 2:00 - the end of yesterday's hour

-25h@h = yesterday at 1:00 - the beginning of yesterday's hour

_time = the timestamp of the event

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

This should do it:

yoursearchhere -earliest=-25h@h -latest=@h

| eval WhichDay = case(_time>=relative_time(now(),"-1h@h"),"Today",

_time<=relative_time(now(),"-24h@h"),"Yesterday",

1==1,null())

| stats count(eval(WhichDay=="Today")) as Today count(eval(WhichDay=="Yesterday")) as Yesterday

| eval ChangePercent = (Today - Yesterday) / 100

Here is an example of how the times work:

The search starts at 2:07 - this is the value of now()

@h = 2:00 - the end of today's hour

-1h@h = 1:00 - the beginning of today's hour

-24h@h = yesterday at 2:00 - the end of yesterday's hour

-25h@h = yesterday at 1:00 - the beginning of yesterday's hour

_time = the timestamp of the event

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Couldn't this be more efficient with appendcols? I have a hard time believing the inefficiency of a sub-search will eclipse the inefficiency of returning 25 hours worth of records and only using 2 of them, especially if we're talking about a dataset with millions of events.

some search earliest=-1h@h latest=@h |

stats count as Today |

appendcols [search some search earliest=-25h@h latest=-24h@h |

stats count as Yesterday ] |

eval ChangePercent = (Today - Yesterday) / 100

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @twinspop , @lguinn2 ,

What I want is : "If the current pendingMsg count is greater than or equal to the last one hour count, then display the queueName with label - 'Queue with no processing since last one hour' "

(OR we can say the outTotalMsgs is same for now and last one hour event)

Example :

My basic search no dedup applied, but currently I have written only one queueName :

..... | xmlkv | table _time, qName, pendingMsgCount, inTotalMsgs, outTotalMsgs

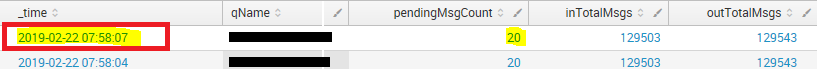

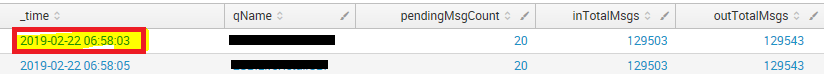

Timestamp (last 60 minutes) - (22/02/2019 06:58:00.000 to 22/02/2019 07:58:13.000)

Results : only one queueName (124 events)

first two :

last two :

So, for this queueName, the pendingMsg count OR outMessges Count is same and hence it should be displayed in results for dashboard - 'Queue with no processing since last one hour'.

I am not able to achieve this, please help!

Thanks in advance!

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I agree with that....

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thank you for the response.

When i ran this query it just showed me data for today but nothing for yesterday.

thank you.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I just corrected a typo... sorry.