- Splunk Answers

- :

- Using Splunk

- :

- Splunk Search

- :

- Concatenating an Arbitrary Number of Fields into S...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark Topic

- Subscribe to Topic

- Mute Topic

- Printer Friendly Page

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Concatenating an Arbitrary Number of Fields into Single Field

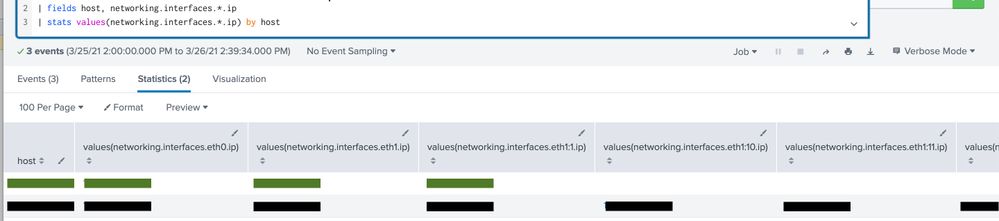

We log Puppet facts in a large JSON payload, and I want to combine the values of all fields matching a wildcarded expression into a single field to process further.

A given server may have any number of IP addresses associated with it, eg:

networking.interfaces.eth0.ip

networking.interfaces.eth1.ip

networking.interfaces.eth1:1.ip

networking.interfaces.eth1:10.ip

networking.interfaces.eth1:11.ip

networking.interfaces.eth1:12.ip

networking.interfaces.eth1:13.ip

networking.interfaces.eth1:14.ip

networking.interfaces.eth1:15.ip

networking.interfaces.eth1:16.ip

networking.interfaces.eth1:17.ip

networking.interfaces.eth1:2.ip

networking.interfaces.eth1:3.ip

networking.interfaces.eth1:4.ip

networking.interfaces.eth1:5.ip

networking.interfaces.eth1:6.ip

networking.interfaces.eth1:7.ip

networking.interfaces.eth1:8.ip

networking.interfaces.eth1:9.ip

Where each of these fields is an IP address, I'd like to append them into a single field; I've tried this with coalesce, but that doesn't support wildcards; mvappend... just doesn't seem to do anything, or perhaps I'm just using it incorrectly with foreach.

tl;dr: given a variable number of fields networking.interfaces.*.ip, how do I concatenate them all into delimited field ip_addresses, a la '10.11.12.13 | 10.11.12.14 | 10.11.12.15 | ...' ?

Thanks.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

To paraphrase the great Eric Morecombe, you are playing all the right commands just not necessarily in the right order!

Try something like this:

| makeresults | eval _raw="networking.interfaces.eth0.ip

networking.interfaces.eth1.ip

networking.interfaces.eth1:1.ip

networking.interfaces.eth1:10.ip

networking.interfaces.eth1:11.ip

networking.interfaces.eth1:12.ip

networking.interfaces.eth1:13.ip

networking.interfaces.eth1:14.ip

networking.interfaces.eth1:15.ip

networking.interfaces.eth1:16.ip

networking.interfaces.eth1:17.ip

networking.interfaces.eth1:2.ip

networking.interfaces.eth1:3.ip

networking.interfaces.eth1:4.ip

networking.interfaces.eth1:5.ip

networking.interfaces.eth1:6.ip

networking.interfaces.eth1:7.ip

networking.interfaces.eth1:8.ip

networking.interfaces.eth1:9.ip"

| multikv noheader=t

| fields - _raw _time linecount

| eval ip=(random() % 255).".".(random() % 255).".".(random() % 255).".".(random() % 255)

| transpose 0 header_field=Column_1

| where column="ip"

| foreach *.ip [

eval allips=mvappend(allips,'<<FIELD>>') ]

| eval concat=mvjoin(allips," | ")

| fields concat allips- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

From your description, I had assumed that you already had the fields *.ip extracted. Perhaps if you shared some raw data and possibly the fields which have already been extracted, we might be able to help you more?

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The fields are extracted; it's a JSON payload that Splunk parses just fine. It's the concatenation that's tripping me up, where new field ip_addresses = all matches of 'networking.interfaces.*.ip'

Rather like looping through the values of all keys in networking.interfaces.*.ip and appending them to a linear array.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Again, I am guessing that you have used spath to get a full extraction of all the fields (or they have been extracted on indexing). Perhaps you need to break down the extraction to get networking.interfaces as a multivalue field, then you might be able to mvexpand that or possibly mvmap to extract the ip addresses. It would help to see an example of the JSON but if you can't do that, hopefully you get the gist of what I am suggesting

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I guess I'm confused by why it should be so easy to coalesce fields when there's a known and numerable range of keys, but it's seemingly non-trivial to handle a variable, wildcarded number of them. Surely there's a way to handle something like this inline?

Your tacit suggestion that this may be handled better further upstream does sound promising, though. You may have name-checked Morcambe, but you're clearly wise. 😉

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I am not sure why foreach doesn't work for you, but the null values will break mvappend which is probably why it didn't work.

How about this

| makeresults | eval _raw="networking.interfaces.eth0.ip

networking.interfaces.eth1.ip

networking.interfaces.eth1:1.ip

networking.interfaces.eth1:10.ip

networking.interfaces.eth1:11.ip

networking.interfaces.eth1:12.ip

networking.interfaces.eth1:13.ip

networking.interfaces.eth1:14.ip

networking.interfaces.eth1:15.ip

networking.interfaces.eth1:16.ip

networking.interfaces.eth1:17.ip

networking.interfaces.eth1:2.ip

networking.interfaces.eth1:3.ip

networking.interfaces.eth1:4.ip

networking.interfaces.eth1:5.ip

networking.interfaces.eth1:6.ip

networking.interfaces.eth1:7.ip

networking.interfaces.eth1:8.ip

networking.interfaces.eth1:9.ip"

| multikv noheader=t

| fields - _raw _time linecount

| eval servera=(random() % 255).".".(random() % 255).".".(random() % 255).".".(random() % 255)

| eval serverb=(random() % 255).".".(random() % 255).".".(random() % 255).".".(random() % 255)

| transpose 0 header_field=Column_1

| regex column="server"

| transpose 0 header_field=column

| streamstats count as row

| eval serverb=if(row < 10,serverb,null)

| fields - row

| transpose 0 header_field=column

| rename column as host

| transpose 0 header_field=host

| eval column="allips"

| stats values(*) as * by column

| foreach * [ eval <<FIELD>>=mvjoin('<<FIELD>>', " | ") ]

| transpose 0 header_field=column

| rename column as host