Are you a member of the Splunk Community?

- Find Answers

- :

- Splunk Administration

- :

- Getting Data In

- :

- Is my current architectural design a legitimate de...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark Topic

- Subscribe to Topic

- Mute Topic

- Printer Friendly Page

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

My company is deciding to use Splunk in a Small Enterprise Deployment.

I already read a bit about scaling, the infrastructure design, and the amount of components.

I'm assigned the task to think about and design our deployment.

So.... I want to ask if my thoughts so far make any sense.

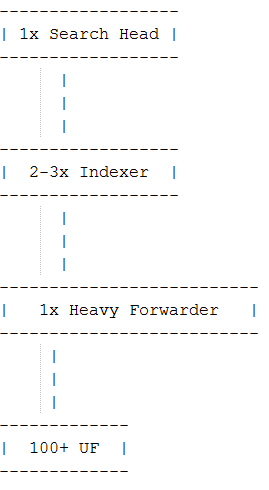

My plan is to build an infrastructure that looks like the attached picture.

I would use a Heavy Forwarder in the deployment to filter data that is coming into the deployment before it gets indexed. I might not need this feature today, but maybe later.

Is this a legit deployment?

Is it ok if I configure the Universal Forwarders to send data to the HF first?

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Your design looks all right to me but there are lots of other things you need to consider, such as:

- Number of final users (this will increase the load on search heads and therefore indexers)

- Data you are ingesting every day

- Resiliency: if your SH is down you are blind so what's your DR plan here? Same goes for the HF

- Physical location of your data

- etc

If your budget is limited and assuming you are indexing less than 200GB/day I would do the following:

- Get rid of the HF. Your indexers can do the filtering too and they also provide resiliency. Your HF is a unique point of failure in your diagram

- Go for a virtual Search Head (make sure you allocate enough CPU cores and memory) and use your virtual infrastructure to provide backup and DR for this component

Hope that helps.

Thanks,

J

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Your design looks all right to me but there are lots of other things you need to consider, such as:

- Number of final users (this will increase the load on search heads and therefore indexers)

- Data you are ingesting every day

- Resiliency: if your SH is down you are blind so what's your DR plan here? Same goes for the HF

- Physical location of your data

- etc

If your budget is limited and assuming you are indexing less than 200GB/day I would do the following:

- Get rid of the HF. Your indexers can do the filtering too and they also provide resiliency. Your HF is a unique point of failure in your diagram

- Go for a virtual Search Head (make sure you allocate enough CPU cores and memory) and use your virtual infrastructure to provide backup and DR for this component

Hope that helps.

Thanks,

J

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Is it a viable strategy to buy an ESX-Server and run all the components on a virtual server infrastructure?

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thank you all! 🙂

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content