Are you a member of the Splunk Community?

- Find Answers

- :

- Using Splunk

- :

- Other Using Splunk

- :

- Alerting

- :

- 8.0.4.1 on Ubuntu 20.04LTS: Why are emails failing...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark Topic

- Subscribe to Topic

- Mute Topic

- Printer Friendly Page

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

2020-07-07 21:45:15,136 +0000 ERROR sendemail:1435 - [HTTP 404] https://127.0.0.1:8089/servicesNS/admin/search/saved/searches/_new?output_mode=json

Traceback (most recent call last):

File "/opt/splunk/etc/apps/search/bin/sendemail.py", line 1428, in <module>

results = sendEmail(results, settings, keywords, argvals)

File "/opt/splunk/etc/apps/search/bin/sendemail.py", line 261, in sendEmail

responseHeaders, responseBody = simpleRequest(uri, method='GET', getargs={'output_mode':'json'}, sessionKey=sessionKey)

File "/opt/splunk/lib/python2.7/site-packages/splunk/rest/__init__.py", line 577, in simpleRequest

raise splunk.ResourceNotFound(uri)

ResourceNotFound: [HTTP 404] https://127.0.0.1:8089/servicesNS/admin/search/saved/searches/_new?output_mode=json

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I appreciate this discussion! Discovered that indeed the Dev/Test license only allows one user login, which is the main admin account. If that admin account is given a username other than 'admin', Splunk will not send alerts. If you cat the passwd file for your instance (cat /opt/splunk/etc/passwd), you'll see your main username listed with a hashed password, and the Administrator defined as 'admin', not as the main admin user.

Splunk alerts are sent from the 'admin' administrator account, which apparently will work on a non-dev/test license even if the admin account you set up as something other than 'admin'.

Solution: edit the passwd file to change the name of your user account to 'admin', then restart Splunk.

Please note, I also discovered if you delete the dev/test license and restart Splunk, Splunk will no longer recognized your admin account unless it is named 'admin'... in fact it will say there are no users for this deployment and won't allow you to log out, add/remove/modify users, etc. Again, the issue can be resolved by updating the passwd file and restarting Splunk.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Yes, permissions can get pretty messy. This also happens if Splunk is normally run by the Splunk user but is then started from root (usually using sudo).

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Agreed! I had reproduced the issue again on another instance and wanted to document the steps to changing ownership. It's a little different depending on whether the original search was shared or not.

Here's a recap of my findings:

- Alerts on Dev/Test licenses do work if the account for the instance IF:

- The login account is named 'admin' (the Dev/Test license only allows one user account, and it must be named 'admin'); AND

- In Advance Settings for the alert, the server is changed from 'localhost' to your organization's email server (for me, mail.splunk.com).

- To change the name of the login account to 'admin', update the passwd file to reference ":admin:" at the start of it instead of your original username (example: ":choppedliver:"), then restart Splunk.

- To copy the search history and saved searches / alerts over to the admin account:

- copy the history and local directories from $SPLUNK_HOME/etc/users/<originalusername> over to $SPLUNK_HOME/etc/users/admin. You can delete them from the <originalusername> folder also if you wish. NOTE: this instruction assumes your admin account is fresh and doesn't already have a history or local directory. If you have been using the admin account, decide which search history you want to keep. Combining them doesn't seem to work. If you already created saved searches for the admin account, copy the contents of the local/savedsearches.conf file from your original user over to the savedsearches.conf file currently existing for your admin account.

- For shared alerts, update the local.meta file to assign shared alerts from <originalusername> to admin ($SPLUNK_HOME/etc/apps/search/metadata/local.meta)

- Once again, restart Splunk for these changes to go into effect.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@trashyroadz wrote

- In Advance Settings for the alert, the server is changed from 'localhost' to your organization's email server (for me, mail.splunk.com).

I think this depends on your local email setup. In my case, my local machine is running it's own mail server (Postfix) and has an upstream server that it talks to for delivery off the local machine. It works fine with either localhost or mymachine.example.com:25 in that field.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

This is likely a local issue so I would start with the most basic checks.

First try telnet 127.0.0.1 8089 and telnet localhost 8089 to see if the management port of splunk is accessible, and check the IP binding settings, and adjust if necesary.

https://docs.splunk.com/Documentation/Splunk/8.0.6/Admin/BindSplunktoanIP

You can also try to access that resource with CURL and with proper server IP to see if you still get 404, something like this..probably...

curl -k -u admin:pass https://ServerIP:8089/servicesNS/admin/search/saved/searches/_new?output_mode=json

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @jorjiana88 ,

The splunk management port is accessible on 8089. Using curl, I get this:

{"messages":[{"type":"ERROR","text":"Action forbidden."}]}To verify that the credentials were correct, I intentionally used the wrong password and then got this:

{"messages":[{"type":"ERROR","text":"Unauthorized"}]}

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Were you able to reproduce the 404 with curl in any way? for example when using the ip 127.... ?

The issue is that splunk is not able to access that URL, but with curl you were able to.. how? did you write different ip or used another user than what splunk uses, with different permissions?

These errors action forbidden and unauthorized when using curl are normal, we just wanted to find out if you get 404.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I was not able to get the 404 error. I used the exact curl command you suggested, going to 127.0.0.1 using the same userid/password that I use to log in. Splunk itself runs as root on my machine, so I can't use that for credentials, but the account that I did use does have full admin rights.

I know very little about how things work via the API, should there always be something there to retrieve or does that only happen for some period after the search/schedule report runs?

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I understand from the debug logs is that the result file which will be created by Splunk after search is complete is not available that’s why you are getting an error. Splunk enforced their commands to use python3 after Splunk Enterprise version 8 but I still see your sendemail command using python2.7.

Let me know if you have still problem. I can simulate the issue.

If this helps, give a like below.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I do still have the issue @thambisetty.

@peterm30 mentioned that it might be a permissions issue, so I checked the directory where Splunk is installed. After a little digging, it looks there are directories with results under $HOME/var/run/splunk/dispatch that are owned by root with permissions of 700, meaning the splunk user wouldn't be able to see the contents. I noticed files named results.csv.gz and looking at them (as root), these seem to be the output of the searches.

If this is the issue, what is causing those to be written by root instead of splunk? Note that $HOME/var/run/splunk/dispatch is owned by splunk (not root).

Thanks to both of you for getting back to me.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Just stop splunk, crown -R splunk: splunk /opt/splunk or whatever your installation directory was and then start splunk as user splunk unless you are using systemd.

r. Ismo

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I've tried this as a solution without any success.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

There are a large number of files under $SPLUNK_HOME owned by root, though not all.

On my machine, splunkd is running through systemd as root. Would I still need to change the ownership of those files?

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

My understanding is that Splunk 8 still uses Python 2 for some features, including those involved in this error (specifically, alert actions). https://docs.splunk.com/Documentation/Splunk/8.0.5/Python3Migration/ChangesEnterprise

That said, I'm no Splunk developer and might be interpreting that incorrectly. In the interest of troubleshooting I did change the default interpreter from 2.7 to 3.7 by setting "python.version = python3" in /opt/splunk/etc/system/local/server.conf and restarting Splunk. Re-running the same command resulted in nearly the same error, only with python3.7 being referenced.

2020-08-01 08:51:01,165 -0400 ERROR sendemail:1454 - [HTTP 404] https://127.0.0.1:8089/servicesNS/admin/search/saved/searches/_new?output_mode=json

Traceback (most recent call last):

File "/opt/splunk/etc/apps/search/bin/sendemail.py", line 1447, in <module>

results = sendEmail(results, settings, keywords, argvals)

File "/opt/splunk/etc/apps/search/bin/sendemail.py", line 262, in sendEmail

responseHeaders, responseBody = simpleRequest(uri, method='GET', getargs={'output_mode':'json'}, sessionKey=sessionKey)

File "/opt/splunk/lib/python3.7/site-packages/splunk/rest/__init__.py", line 577, in simpleRequest

raise splunk.ResourceNotFound(uri)

splunk.ResourceNotFound: [HTTP 404] https://127.0.0.1:8089/servicesNS/admin/search/saved/searches/_new?output_mode=json

I do see that it's looking for a results file that is not available. However, I don't understand how this could occur or where to start looking for the issue.

If I had to make a guess, my gut feeling is that it's a Linux file permissions issue somewhere. Splunk is trying to create a file in a location that it does not have write access to. The file is never created, and a subsequent attempt to read the file fails. I have absolutely nothing to base that on though, it's just the only reason I can think of that Splunk is unable to find a file that it should be creating.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I don't know if you've figured this out yet, but I'm running into the exact same problem, and your thread seems to be the only one with the exact same error message. I'm also using a dev/test license, however, I'm trying to send through a third party mail provider (I tried two in fact, in case that was the issue). I'm running Splunk 8.0.5 on Ubuntu 18.04.4

2020-07-31 22:38:49,185 -0400 ERROR sendemail:1454 - [HTTP 404] https://127.0.0.1:8089/servicesNS/admin/search/saved/searches/_new?output_mode=json

Traceback (most recent call last):

File "/opt/splunk/etc/apps/search/bin/sendemail.py", line 1447, in <module>

results = sendEmail(results, settings, keywords, argvals)

File "/opt/splunk/etc/apps/search/bin/sendemail.py", line 262, in sendEmail

responseHeaders, responseBody = simpleRequest(uri, method='GET', getargs={'output_mode':'json'}, sessionKey=sessionKey)

File "/opt/splunk/lib/python2.7/site-packages/splunk/rest/__init__.py", line 577, in simpleRequest

raise splunk.ResourceNotFound(uri)

ResourceNotFound: [HTTP 404] https://127.0.0.1:8089/servicesNS/admin/search/saved/searches/_new?output_mode=json

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Yes, I have a similar scenario / environment (Ubuntu Server 20.04, Splunk Enterprise 8.0.6, dev / test license, single user, etc) and problem too.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

This is what I've found so far.

https://docs.splunk.com/Documentation/Splunk/8.0.6/Alert/Emailnotification constantly says that alerts should be created "in the Search and Reporting app" so I tried recreating mine there but doing so didn't make a difference.

According to https://docs.splunk.com/Documentation/Splunk/8.0.6/RESTUM/RESTusing#Namespace, the URI format is https://<host>:<mPort>/servicesNS/<user>/<app>/* and supports wildcards ("-").

In my case:

- I confirmed that command curl -k -u "<username>:<password>" https://127.0.0.1:8089/servicesNS/-/TA-<app name>/saved/searches/<alert name>?output_mode=json completed successfully, due to the wildcard.

- That explains the error because I don't have a user named admin so the URI literally doesn't exist. But why is that being used in the first place?

I read through /opt/splunk/etc/apps/search/bin/sendemail.py and, from lines 161 to 187, found that the URI is effectively built by splunk.entity.buildEndpoint(entityClass, namespace=settings['namespace'], owner=settings['owner']) for which I can't really find any documentation.

Modifying the Python script is far from ideal because (1) it causes file integrity check failures and (2) it'll probably get overwritten by updates in the future. However, for short-term diagnostic purposes, I added some logging to the script and found that, on line 1437, splunk.Intersplunk.getOrganizedResults() is executed which sets the value of settings (alert data) which includes 'owner': 'admin'. This explains the URI but is strange because the actual owner of the alert is me@mydomain.com.

I tried effecting this behaviour by modifying /opt/splunk/etc/apps/TA-<app name>/metadata/local.meta → [savedsearches/<alert name>] → owner:

- From me@mydomain.com to admin. This caused the alert to become orphaned.

- Removing it completely. This caused the owner to become nobody but did not change settings or, therefore, the generated URI.

It occurred to me that this could be a limitation of the dev / test license considering Splunk's default username is admin and the license only allows one user but https://www.splunk.com/en_us/resources/personalized-dev-test-licenses/faq.html → "What are the software feature and deployment limitations in the personalized Dev/Test License?" doesn't mention anything about alerts or emailing.

I'll be continuing my investigation tomorrow and I'll post back here with my findings.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I have found that these errors can be resolved by modifying file /opt/splunk/etc/apps/search/bin/sendemail.py and adding line settings["owner"] = "-" between lines 1437 (results, dummyresults, settings = splunk.Intersplunk.getOrganizedResults()) and 1438 (try:). This is good to know but, as previously mentioned, isn't a long-term fix.

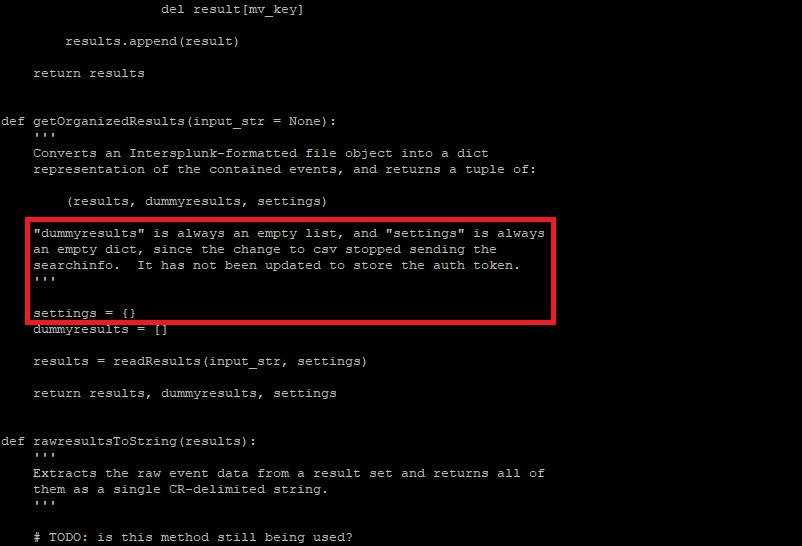

Given that the value of settings is set by splunk.Intersplunk.getOrganizedResults(), I looked for its file and found /opt/splunk/lib/python3.7/site-packages/splunk/Intersplunk.py but the function getOrganizedResults literally contains the line settings = {} and a comment saying that "settings" is always an empty dict:

Using command grep --include=\*.* --exclude=\*.{log,js} -RsnI /opt/splunk/etc/apps/TA-<app name>/ -e "owner\|admin", I found that file /opt/splunk/etc/apps/TA-<app name>/local/restmap.conf contained line [admin:TA_<app name>] so I modified it replacing admin with me@mydomain.com but that just seemed to break it even more.

So, I'm still no closer to understanding where the values of settings / an owner of admin comes from.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I tried upgrading to Splunk v8.1.0 (latest as of writing) and found that:

- It did overwrite file /opt/splunk/etc/apps/search/bin/sendemail.py, thereby removing the workaround.

- It didn't resolve the problem either.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Regarding your earlier comments about the hard-coded username in the code, on my system I've changed the default name of the administrative user. To be honest, I don't recall if I was prompted to do that or if I did it for some other reason.

Could that be somehow involved?

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

It could very well be. I'm currently setting up a new virtual instance to test that theory.