- Splunk Answers

- :

- Using Splunk

- :

- Splunk Search

- :

- Re: New field defined by time ranges

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark Topic

- Subscribe to Topic

- Mute Topic

- Printer Friendly Page

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

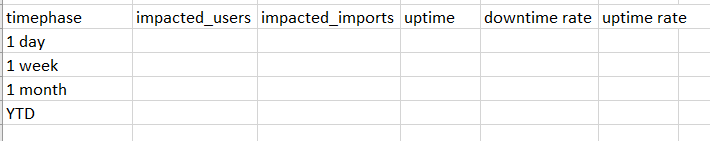

I'm trying to create the below search with the following dimensions. I'm struggling to create the 'timephase' column.

The 'timephase' field would take the same logic as the date range pickers in the global search, but only summon the data applicable in that timephase (ie. 1 day would reflect data of subsequent columns for 1 day ago, etc). I tried to approach it with a eval case, but would run into a mutual exclusion problem (the data captured in "1 day" would be excluded from "1 week", even thought it should be counted).

Does anyone have any recommendation for approaches to this?

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

If you only need those 4 groupings you can do that with a series of evals before your stats that will create the groups. Here's a run anywhere example that demonstrates the method to accomplish this:

| makeresults count=1000

| eval random=random() % 5616000

| eval _time=_time-random

| sort 0 + _time

| addinfo

| eval timephase1=if(_time>=relative_time(info_max_time, "-1d@d"), "1 day", null()), timephase2=if(_time>=relative_time(info_max_time, "-1w@d"), "1 week", null()), timephase3=if(_time>=relative_time(info_max_time, "-1mon@d"), "1 month", null()), timephase4=if(_time>=relative_time(info_max_time, "@y"), "YTD", null()), timephase=mvappend(timephase1, timephase2, timephase3, timephase4)

| stats count by timephase

| eval sorter=case(timephase="1 day", 1, timephase="1 week", 2, timephase="1 month", 3, timephase="YTD", 4)

| sort sorter

| fields - sorter

The query starts by creating four separate fields that represent each bucket of time. This is assuming you only need the four that you have listed in your example. The timephase field is made into a multi-valued aggregation of those four fields since a single event can fall into multiple buckets. Finally the query creates a table that shows the count of events that fall into each of those buckets. You see that YTD will always equal 1,000 due to the query only creating 1,000 events. The other numbers will be random based on what the random() function produces. The sorter field is used to ensure it shows up in the order of your mock up.

I used the addinfo command to ensure that it's always doing comparative time buckets based on the maximum searched time period in case you use this query on any time period that doesn't end now()

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

If you only need those 4 groupings you can do that with a series of evals before your stats that will create the groups. Here's a run anywhere example that demonstrates the method to accomplish this:

| makeresults count=1000

| eval random=random() % 5616000

| eval _time=_time-random

| sort 0 + _time

| addinfo

| eval timephase1=if(_time>=relative_time(info_max_time, "-1d@d"), "1 day", null()), timephase2=if(_time>=relative_time(info_max_time, "-1w@d"), "1 week", null()), timephase3=if(_time>=relative_time(info_max_time, "-1mon@d"), "1 month", null()), timephase4=if(_time>=relative_time(info_max_time, "@y"), "YTD", null()), timephase=mvappend(timephase1, timephase2, timephase3, timephase4)

| stats count by timephase

| eval sorter=case(timephase="1 day", 1, timephase="1 week", 2, timephase="1 month", 3, timephase="YTD", 4)

| sort sorter

| fields - sorter

The query starts by creating four separate fields that represent each bucket of time. This is assuming you only need the four that you have listed in your example. The timephase field is made into a multi-valued aggregation of those four fields since a single event can fall into multiple buckets. Finally the query creates a table that shows the count of events that fall into each of those buckets. You see that YTD will always equal 1,000 due to the query only creating 1,000 events. The other numbers will be random based on what the random() function produces. The sorter field is used to ensure it shows up in the order of your mock up.

I used the addinfo command to ensure that it's always doing comparative time buckets based on the maximum searched time period in case you use this query on any time period that doesn't end now()

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @dmarling , thanks for your solution. I am using the same logic by phasing two different times "Last_month" for the month of Feb and "This_month" for the month of Mar. The intention is to compare the two months performance.

The problem I am facing with is

- The total number are the same for both months.

- It takes ages to complete they query.

Below is the query:

main search....

| addinfo

| eval timephase1=if(_time>=relative_time(info_max_time, "-2mon@mon"), "last", null()), timephase2=if(_time>=relative_time(info_max_time, "-1mon@mon"), "this", null()), timephase = mvappend(timephase1, timephase2)

| stats count(timephase1) as last_month count(timephase2) as this_month by apps

| sort - this_month

| head 10

Many thanks in advance!

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

1. Why not just

| stats count by timephase apps

2. If I generate some fake data, I get proper results. Maybe your data just contains the same number of events for both those periods?

3. Check your job inspector but I suppose that you have many events over those two months of yours so it simply takes time to process all of them. You could probably use some summary indexing if you need to do this stuff often.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thank you,

For some reason I get the total for each app for each month differently, but Feb -2mon@mon data is nearly double the month of Mar -1mon@mon.

Would you please advise if -2mon@mon is extracting Feb data only?

| eval timephase1=if(_time>=relative_time(info_max_time, "-2mon@mon"), "last", null()), timephase2=if(_time>=relative_time(info_max_time, "-1mon@mon"), "this", null())Many thanks!

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Honestly, I have no clue. You need to check your data and verify how much of it you have for each month.

Anyway, if you're using sort command in your search before all this clasifying, as was in @dmarling 's original solution, don't. Sorting doesn't help here (you still just clasify the data into separate sets with stats command) but will move further processing from indexers to search-heads so you lose any parallelism you might have had up to this point.

Just for test do

<your search>

| bin _time span=1mon

| stats count by app _time

And check how many events you have for each month and verify if your "summarized" results are right or wrong.

Anyway, I'm not sure if instead of the original solution I wouldn't try to do that which I just posted above and then summarize the results to match multiple months. (the approach might not work with different aggregation function than sum() since you obviously can't just - for example - add averages).

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @PickleRick again,

Many thanks for your responses. TO make make it simple, our requirements are to display the top most used 10 apps by MoM. As per my test the records would exceed around 700k -800k per month records. Appreciated mate!

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@PickleRick Apologise for the late reply. The reason I need to sort is because the requirements are to extract the top 10 used apps and compare them with last two months MoM.

Hence I use sort followed by | head =10. Thank you!

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

really clever @dmarling, thank you