- Splunk Answers

- :

- Using Splunk

- :

- Splunk Search

- :

- Re: How to work out Percentage above an average th...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark Topic

- Subscribe to Topic

- Mute Topic

- Printer Friendly Page

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Experts,

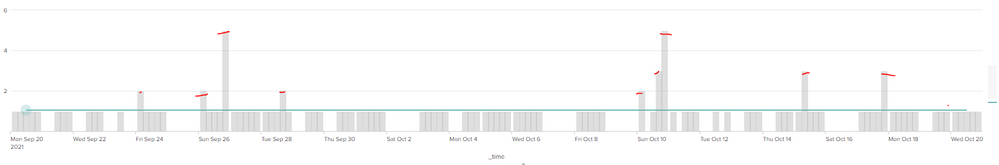

As part of an new initiative looking at SLO metrics. I have created the below query which nicely counts the amount of errors per day over a 30 day window and also provides a nice average level on the same graph using an overlay for easy viewing.

earliest=-30d@d index=fx ERROR sourcetype=mysourcetype source="mysource.log"

| rex field=source "temp(?<instance>.*?)\/"

| stats count by _time instance

| timechart span=1d max(count) by instance

| appendcols [search earliest=-30d@d index=fx ERROR sourcetype=mysourcetype source="mysource.log"

| rex field=source "temp(?<instance>.*?)\/"

| stats count by _time instance

| stats avg(count) AS 30d_average]|filldown 30d_average

I wanted to somehow work out the percentage of good results (anything that is lower then the average value) and the percentage of bad results (above the average) and show in a stats table for each instance.

Help needed! thanks in advance

Theo

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I don't know your detailed data but this search seems plain wrong.

You usually don't do stats by _time. Unless you have a very well-aligned input data, it will just give you pointless results.

Besides, there's not much point in using appendcols to re-run most of the original search just to add different aggregation. You're wasting one search. It's more convenient to do a eventstats.

The percentage would be quite easily "statsable" by firstly doing eventstats to add a general count of events, then doing a conditional count(eval(count>your_average)) and then dividing those two. But of course it's a completely different value than those parameters you calculate here so it wouldn't "mix" well in one chart or graph.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I don't know your detailed data but this search seems plain wrong.

You usually don't do stats by _time. Unless you have a very well-aligned input data, it will just give you pointless results.

Besides, there's not much point in using appendcols to re-run most of the original search just to add different aggregation. You're wasting one search. It's more convenient to do a eventstats.

The percentage would be quite easily "statsable" by firstly doing eventstats to add a general count of events, then doing a conditional count(eval(count>your_average)) and then dividing those two. But of course it's a completely different value than those parameters you calculate here so it wouldn't "mix" well in one chart or graph.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Rick,

The current timechart(as shown in the example) seems to work fine I.E shows the Daily ERROR counts per day and then when looking across 30 days of data I can see which counts breach the 30 avg threshold.

That all seems to work fine. The problem Im having is I want to work what percentage of good events (per day bucket) meaning what error counts are below the average threshold to bad event days over the threshold into a seperate table (not on the same timechart) The timechart I posted is just for visual purposes to show any days of interest.

Theo

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Ok. It's your data aftet all 😉

All I'm saying is that you usually don't do stats by time because it would generate separate stat value for every single value of time value. That's why you do timecharts because that command bins and aggregates data automaticaly on time spans.

But if your data is precisely aligned, that might work - it's just that you very rarely see such case.

The rest - I already addressed - do the eventstats, then sum it up with stats (or you can filter the events first to get only the matching subset and then do the counting if you don't want to do eval-based stats).

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Please clarify the requirements. The current query produces 30 events (1 per day) with an unknown number of fields (instances) in each event. Is the percentage of good/bad counts supposed to be for each day or a total over all 30 days? If the latter, what is the expected visualization?

If this reply helps you, Karma would be appreciated.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Richard,

So the current visualization looks as attached. What i'm trying to do is work out the percentage of bad event counts per day (the ones in the image that are above the threshold line ) and then generate an overall percentage over a 30 day time duration vs good event counts (below the average) into a stats table showing "30 day duration Bad event count (overall bucket per day) = x% vs good event counts over 30 day duration"