- Splunk Answers

- :

- Using Splunk

- :

- Splunk Search

- :

- Re: How to timechart on a single set of logs each ...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark Topic

- Subscribe to Topic

- Mute Topic

- Printer Friendly Page

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi there- I have a simple dashboard that allows me to see growth around the number of Live / Archived accounts we manage in Google.

We currently have a daily pull of the directory service into Splunk, which allows for the following query to be run (I have a few like this with Archived / Live being the adjustments I make):

index="google" sourcetype="*directory*" "emails{}.address"="*@mydomain.com"

| timechart count by archived span=1d cont=FALSE

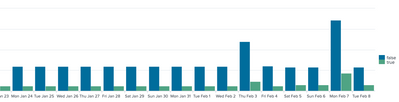

In the last week or so we have had some issues in that sometimes we get two or three directory pulls into Splunk, which results in the graph displaying double / triple the count of data (see attached image)

My question is as follows:

Are there any additional variables I can add into my query to ONLY interpret one data pull per 24 hour period? This will allow for consistent reporting in the face of inconsistent directory pulls into Splunk.

I have poked around a bit with Timechart but feel I perhaps I should be using a stats command instead...? any direction on which approach to use is appreciated!

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Can you instead try a distinct count, assuming the archived account values are what is unique?

Something like this:

index="google" sourcetype="*directory*" "emails{}.address"="*@mydomain.com"

| timechart dc(archived) span=1d cont=FALSE

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Can you instead try a distinct count, assuming the archived account values are what is unique?

Something like this:

index="google" sourcetype="*directory*" "emails{}.address"="*@mydomain.com"

| timechart dc(archived) span=1d cont=FALSE

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

It depends what is unique and what is duplicated in the events pulled on the same day

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

in this case, it is a full directory dump of a few thousand account names and email address- with it being a point in time reference to an existing directory at the time it was exported.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Do any of dumps overlap in time? Some rough ideas if not:

| All records in a dump have same timestamp | Use earliest of the day |

| Dumps are periodic | Bucket time according to period, then use the first period of the day |

| Dumps are random but sufficiently separate from one another | Use a time-based transaction (expensive) |

| Each dump has a unique identifier | Use earliest of the day |

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

the good news is that they do not have time overlaps- looking at the directory dumps they seem to come in every hour or so- so I feel we are on the right track for "use earliest of the day"- now I can do a bit more digging to figure out the code for that.

I am going to explore variables in the query a bit more to see if there are some extra flags that can reference the earliest entry in a day.....let's see how far I can get!

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

If that hourly cycle is reliable, first-hour-of-the-day events can be filtered by

| where date_hour=0date_hour is a meta field that Splunk automatically provides. No need to addinfo.