- Splunk Answers

- :

- Using Splunk

- :

- Splunk Search

- :

- How to run 10 unrelated searches as one scheduled ...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark Topic

- Subscribe to Topic

- Mute Topic

- Printer Friendly Page

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

How to run 10 unrelated searches as one scheduled search?

I have several searches, about 10, each of which produces a CSV file as an output. I would like to run this as scheduled jobs on a weekly/monthly basis. Is there a way to combine all these searches into one so that I can run only one scheduled search instead of 10?

A sample search will look like below:

index=index1 sc_status=200 pdf1.pdf | timechart span=1d count | eval _time=strftime(_time, "%d/%m/%Y") | rename _time as Date count as Downloads | addcoltotals labelfield=Date label="Total PDF Downloads" | outputcsv pdf1.csv

I looked at multisearch command , not sure if that is an answer.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

If you have 10 CSV files and you need to send them to 10 people, you can do it like this. First, make sure that you have a lookupfile defined as CSVfilenameToEmailAddress that has a 10 lines, each one having a CSV filename called attachment and an email address called email.

Your base search that generates 10 CSV files here (see my previous answer) | eval Dr0pM3=1 | search NOT Dr0pM3=1

| append [|inputlookup CSVfilenameToEmailAddress]

| map search="|inputcsv $attachment$ |sendemail sendresults=true inline=false to=\"email\" subject=\"$attachment$\" message=\"This report was generated from Splunk and should have an attachment.\""

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

If all the reports use the SAME input data, then you can use multireport like this:

My Common Base Search Here | multireport

[ My 1st transformation | outputcsv myOutputCSV_1.csv ]

...

[ My 10th transformation | outputcsv myOutputCSV_10.csv ]

If all the reports use different input data, then you can use multisearch like this:

| multisearch

[ My 1st base search| outputcsv myOutputCSV_1.csv ]

...

[ My 10th base search| outputcsv myOutputCSV_10.csv ]

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

It would be helpful if you can share some sample events. With current info, try like this

index=index1 sc_status=200 pdf | rex "(?<filename>\w+\.pdf)"| timechart span=1d count by filename | eval Date=strftime(_time, "%d/%m/%Y") | fields - _time | table Date * | addcoltotals labelfield=Date label="Total PDF Downloads" | outputcsv pdf1.csv

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I guess you could use map. For instance, take a look at the following example:

| stats count

| eval n = mvrange(1,11)

| mvexpand n

| eval file_in = "pdf" + n + ".pdf"

| eval file_out = "pdf" + n + ".csv"

| fields - n, count

| map search="search index=_internal earliest=-1h | head 1 | table index, sourcetype | eval myfile=$file_in$"

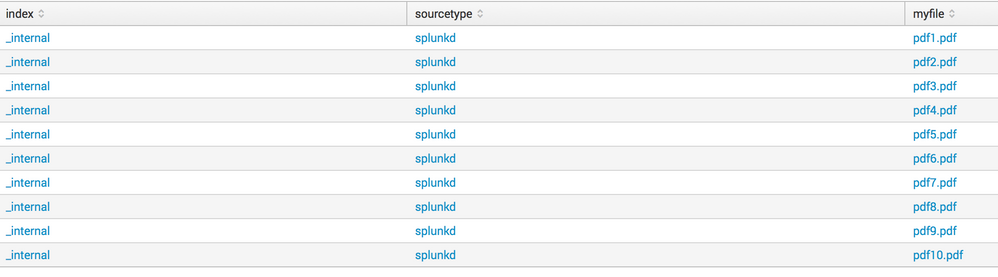

Output (see picture)

If we apply the same logic to your example maybe you could do something like this (if your file names are not incremental then simply hardcode those names with eval):

| stats count

| eval n = mvrange(1,11)

| mvexpand n

| eval file_in = "pdf" + n + ".pdf"

| eval file_out = "pdf" + n + ".csv"

| fields - n, count

| map search = "

search index=index1 sc_status=200 $file_in$

| timechart span=1d count

| eval _time=strftime(_time, \"%d/%m/%Y\")

| rename _time as Date count as Downloads

| addcoltotals labelfield=Date label=\"Total PDF Downloads\"

| outputcsv $file_out$

"

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Pdf files are not necessarily numbered and does not follow any pattern. It could be pd1fxyz.pdf or pdf22pqr.pdf or somethign else.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

That shouldn't be a problem.

You could populate a CSV that contains the names of the files you want to search. For instance:

pdf_files.csv

-------------------

filename

pd1fxyz.pdf

pdf22pqr.pdf

And then simply run the following query (keep in mind map is not the fastest option so if your queries are expensive you might want to look for alternatives):

| inputcsv pdf_files.csv

| eval file_in = filename

| eval file_out = filename + ".csv"

| map maxsearches=50 search = "

search index=index1 sc_status=200 $file_in$

| timechart span=1d count

| eval _time=strftime(_time, \"%d/%m/%Y\")

| rename _time as Date count as Downloads

| addcoltotals labelfield=Date label=\"Total PDF Downloads\"

| outputcsv $file_out$

"

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks javiergn. Its close.

When I run this search, the output is displayed on the screen rather than saving it in csv files as it is supposed to do through outputcsv command. Any idea?

[map]: Could not write to file '"BushFireSurvivalPlan.pdf.csv"': Failed to open file for writing.

The above error repeats for all others filenames in the inputcsv file

However, the output on the screen seems to be correct.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

That might be because outputcsv doesn't like the quotes in your file name.

It's a tricky one because of the way outputcsv and tokens work.

You could try the following alternative instead.

It'll output all your content into one big csv. If you add a fieldname with the name of the file that generated that log you might then be able to split this if you wanted to.

Give it a go and let me know:

| inputcsv pdf_files.csv

| eval file_in = filename

| map maxsearches=50 search="

search index=index1 sc_status=200 $file_in$

| timechart span=1d count

| eval _time=strftime(_time, \"%d/%m/%Y\")

| eval filename = $file_in$

| rename _time as Date count as Downloads

| addcoltotals labelfield=Date label=\"Total PDF Downloads\"

"

| outputcsv MyReport.csv

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

What's the particular reason you want to do that? Just trying to understand the use case.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Business unit wants report in CSV file for various pdf download on a monthly/weekly basis, I can run 10 jobs and send 10 emails with one attachment in each email, but that's not great. I don't think I can send one email with 10 attachment from splunk. Next bets option is to run one job (instead of 10, or in future it could be 50) and dump files in a common location for business unit to grab.