- Find Answers

- :

- Using Splunk

- :

- Splunk Search

- :

- How to create a dashboard of XML file?

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark Topic

- Subscribe to Topic

- Mute Topic

- Printer Friendly Page

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

I have the following event (XML) in Splunk, how can I create a dashboard of this XML?

<JOB

APPLICATION="AFT-DTA"

CREATION_DATE="20191119"

JOBNAME="T-JOBA"

NODEID="10067"

<VARIABLE NAME="%%FTP-ACCOUNT" VALUE="FIC+DBD"/>

<VARIABLE NAME="%%FTP-LOSTYPE" VALUE="Unix"/>

<VARIABLE NAME="%%FTP-CONNTYPE1" VALUE="SFTP"/>

<VARIABLE NAME="%%FTP-LHOST" VALUE="61021"/>

<VARIABLE NAME="%%FTP-LUSER" VALUE="aft"/>

<VARIABLE NAME="%%FTP-ROSTYPE" VALUE="Windows"/>

<VARIABLE NAME="%%FTP-RHOST" VALUE="dbd7006"/>

<VARIABLE NAME="%%FTP-RUSER" VALUE="aftp"/>

<VARIABLE NAME="%%FTP-LPATH1" VALUE="DIRA"/>

<VARIABLE NAME="%%FTP-RPATH1" VALUE="DIRB"/>

</JOB>

<JOB

APPLICATION="AFT-DTA"

CREATION_DATE="20200113"

JOBNAME="A-JOBB"

NODEID="10007"

<VARIABLE NAME="%%FTP-ACCOUNT" VALUE="SDP+FIC"/>

<VARIABLE NAME="%%FTP-LOSTYPE" VALUE="Unix"/>

<VARIABLE NAME="%%FTP-CONNTYPE1" VALUE="SFTP"/>

<VARIABLE NAME="%%FTP-LHOST" VALUE="sdp9009"/>

<VARIABLE NAME="%%FTP-LUSER" VALUE="aftp"/>

<VARIABLE NAME="%%FTP-ROSTYPE" VALUE="Unix"/>

<VARIABLE NAME="%%FTP-CONNTYPE2" VALUE="SFTP"/>

<VARIABLE NAME="%%FTP-RHOST" VALUE="61021"/>

<VARIABLE NAME="%%FTP-RUSER" VALUE="aft"/>

<VARIABLE NAME="%%FTP-LPATH1" VALUE="DIRA"/>

<VARIABLE NAME="%%FTP-RPATH1" VALUE="DIRB"/>

<VARIABLE NAME="%%FTP-LPATH2" VALUE="DIRC"/>

<VARIABLE NAME="%%FTP-RPATH2" VALUE="DIRD"/>

</JOB>

Table should look like:

| ENV | JOBNAME | NODEID | LCON | LHOST | LPATH | RCON | RHOST | RPATH |

| TST | T-JOBA | 10067 | FIC | 61021 | DIRA | DBD | dbd7006 | DIRB |

| ACC | A-JOBB | 10007 | SDP | sdp9009 |

DIRA |

FIC | 61021 | DIRB DIRC |

Where ENV depends on first letter of JOBNAME

Where LCON is the value of FTP-ACCOUNT" before the + sign.

Where RCON is the value of FTP-ACCOUNT" after the + sign.

LPATH / RPATH can have multiple values where

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @ns102

To get you started, here is something you can do to achieve a table of information you want.

There'll be better ways to do it, but this yields what you are after.

This is assuming, you are seeing 2 jobs as a single event. Ideally, each job should be treated as a separate event with its own timestamp. This can be done by updating the sourcetype configuration in props.conf, as part of the data ingestion process.

Secondly, to speed things up I would suggest extracting fields during ingestion period, rather than doing it on search time.

For the ENV value, I would suggest creating a lookup, that checks the jobname and returns the environment value. Furthermore, you can setup an automatic lookup so that ENV field is already present even during search time. This way you can just update and maintain the lookup values to accommodate more environments and jobs as and when you have.

Also, while building dashboards I would recommend building a base-search and using those to get primary set of information to design panels and get insights out of. It will reduce the number of searches with field extractions and get you results as fast as it could.

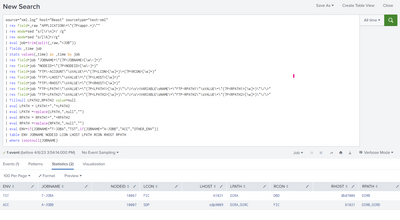

source="xml.log" host="Beast" sourcetype="test-xml"

| rex field=_raw "APPLICATION\=\"(?P<app>.*)\""

| rex mode=sed "s/[\r\n]+/ /g"

| rex mode=sed "s/[\%]+//g"

| eval job=trim(split(_raw,"<JOB"))

| fields _time job

| stats values(_time) as _time by job

| rex field=job "JOBNAME=\"(?P<JOBNAME>[\w\-]+)"

| rex field=job "NODEID=\"(?P<NODEID>[\w\-]+)"

| rex field=job "FTP\-ACCOUNT\"\sVALUE\=\"(?P<LCON>[\w]+)\+(?P<RCON>[\w]+)"

| rex field=job "FTP\-LHOST\"\sVALUE\=\"(?P<LHOST>[\w]+)"

| rex field=job "FTP\-RHOST\"\sVALUE\=\"(?P<RHOST>[\w]+)"

| rex field=job "FTP-LPATH1\"\sVALUE\=\"(?P<LPATH1>[\w]+)\"\/\>\s\<VARIABLE\sNAME\=\"FTP-RPATH1\"\sVALUE\=\"(?P<RPATH1>[\w]+)\"\/\>"

| rex field=job "FTP-LPATH2\"\sVALUE\=\"(?P<LPATH2>[\w]+)\"\/\>\s\<VARIABLE\sNAME\=\"FTP-RPATH2\"\sVALUE\=\"(?P<RPATH2>[\w]+)\"\/\>"

| fillnull LPATH2,RPATH2 value=null

| eval LPATH = LPATH1+","+LPATH2

| eval LPATH =replace(LPATH,",null","")

| eval RPATH = RPATH1+","+RPATH2

| eval RPATH =replace(RPATH,",null","")

| eval ENV=if(JOBNAME="T-JOBA","TST",if(JOBNAME="A-JOBB","ACC","OTHER_ENV"))

| table ENV JOBNAME NODEID LCON LHOST LPATH RCON RHOST RPATH

| where isnotnull(JOBNAME)

~ If the reply helps, an upvote would be appreciated.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Your Q is truncated so I could not do everything but this should get you far enough to finish:

| makeresults

| eval _raw="<JOB

APPLICATION=\"AFT-DTA\"

CREATION_DATE=\"20191119\"

JOBNAME=\"T-JOBA\"

NODEID=\"10067\"

<VARIABLE NAME=\"%%FTP-ACCOUNT\" VALUE=\"FIC+DBD\"/>

<VARIABLE NAME=\"%%FTP-LOSTYPE\" VALUE=\"Unix\"/>

<VARIABLE NAME=\"%%FTP-CONNTYPE1\" VALUE=\"SFTP\"/>

<VARIABLE NAME=\"%%FTP-LHOST\" VALUE=\"61021\"/>

<VARIABLE NAME=\"%%FTP-LUSER\" VALUE=\"aft\"/>

<VARIABLE NAME=\"%%FTP-ROSTYPE\" VALUE=\"Windows\"/>

<VARIABLE NAME=\"%%FTP-RHOST\" VALUE=\"dbd7006\"/>

<VARIABLE NAME=\"%%FTP-RUSER\" VALUE=\"aftp\"/>

<VARIABLE NAME=\"%%FTP-LPATH1\" VALUE=\"DIRA\"/>

<VARIABLE NAME=\"%%FTP-RPATH1\" VALUE=\"DIRB\"/>

</JOB>

<JOB

APPLICATION=\"AFT-DTA\"

CREATION_DATE=\"20200113\"

JOBNAME=\"A-JOBB\"

NODEID=\"10007\"

<VARIABLE NAME=\"%%FTP-ACCOUNT\" VALUE=\"SDP+FIC\"/>

<VARIABLE NAME=\"%%FTP-LOSTYPE\" VALUE=\"Unix\"/>

<VARIABLE NAME=\"%%FTP-CONNTYPE1\" VALUE=\"SFTP\"/>

<VARIABLE NAME=\"%%FTP-LHOST\" VALUE=\"sdp9009\"/>

<VARIABLE NAME=\"%%FTP-LUSER\" VALUE=\"aftp\"/>

<VARIABLE NAME=\"%%FTP-ROSTYPE\" VALUE=\"Unix\"/>

<VARIABLE NAME=\"%%FTP-CONNTYPE2\" VALUE=\"SFTP\"/>

<VARIABLE NAME=\"%%FTP-RHOST\" VALUE=\"61021\"/>

<VARIABLE NAME=\"%%FTP-RUSER\" VALUE=\"aft\"/>

<VARIABLE NAME=\"%%FTP-LPATH1\" VALUE=\"DIRA\"/>

<VARIABLE NAME=\"%%FTP-RPATH1\" VALUE=\"DIRB\"/>

<VARIABLE NAME=\"%%FTP-LPATH2\" VALUE=\"DIRC\"/>

<VARIABLE NAME=\"%%FTP-RPATH2\" VALUE=\"DIRD\"/>

</JOB>"

| rename COMMENT AS "EVERYTHING ABOVE IS CREATING FAKE DATA; EVERYTHING BELOW IS YOUR SOLUTION"

| rex field=_raw mode=sed "s/\<VARIABLE NAME=\"//g s/\"\s+VALUE=/=/g s/\/>//g"

| kv

| rex field=JOBNAME "^(?<ENV>\w+)-(?<JOBNAME>.*)$"

| rex field=FTP_ACCOUNT "^(?<LCON>[^+]+)\+(?<RCON>.*)$"

| eval LPATH="" | foreach FTP_LPATH* [ eval LPATH=mvappend(LPATH, <<FIELD>>) | fields - <<FIELD>> ]

| eval RPATH="" | foreach FTP_RPATH* [ eval RPATH=mvappend(RPATH, <<FIELD>>) | fields - <<FIELD>> ]

| eval LHOST="" | foreach FTP_LHOST* [ eval LHOST=mvappend(LHOST, <<FIELD>>) | fields - <<FIELD>> ]

| eval RHOST="" | foreach FTP_RHOST* [ eval RHOST=mvappend(RHOST, <<FIELD>>) | fields - <<FIELD>> ]

| table ENV JOBNAME NODEID LCON LHOST LPATH RCON RHOST RPATH TST T-JOBA 10067 FIC 61021 DIRA DBD dbd7006 DIRB ACC A-JOBB 10007 SDP sdp9009 *- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @ns102

What have you tried so far?

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

Not much 😉 I'm new to Splunk and really struggling to add <VARIABLE NAME> to my output.

At the moment I have the following. So that's quite basic;

| table DEFTABLE.FOLDER.JOB* |

rename DEFTABLE.FOLDER.JOB{@*} as * |

eval temp=mvzip(JOBNAME,mvzip(NODEID,APPLICATION,"&"),"&")

| table temp

| mvexpand temp

| rex field=temp "(?<JOBNAME>.*)&(?<NODEID>.*)&(?<APPLICATION>.*)" | fields - temp

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @ns102

To get you started, here is something you can do to achieve a table of information you want.

There'll be better ways to do it, but this yields what you are after.

This is assuming, you are seeing 2 jobs as a single event. Ideally, each job should be treated as a separate event with its own timestamp. This can be done by updating the sourcetype configuration in props.conf, as part of the data ingestion process.

Secondly, to speed things up I would suggest extracting fields during ingestion period, rather than doing it on search time.

For the ENV value, I would suggest creating a lookup, that checks the jobname and returns the environment value. Furthermore, you can setup an automatic lookup so that ENV field is already present even during search time. This way you can just update and maintain the lookup values to accommodate more environments and jobs as and when you have.

Also, while building dashboards I would recommend building a base-search and using those to get primary set of information to design panels and get insights out of. It will reduce the number of searches with field extractions and get you results as fast as it could.

source="xml.log" host="Beast" sourcetype="test-xml"

| rex field=_raw "APPLICATION\=\"(?P<app>.*)\""

| rex mode=sed "s/[\r\n]+/ /g"

| rex mode=sed "s/[\%]+//g"

| eval job=trim(split(_raw,"<JOB"))

| fields _time job

| stats values(_time) as _time by job

| rex field=job "JOBNAME=\"(?P<JOBNAME>[\w\-]+)"

| rex field=job "NODEID=\"(?P<NODEID>[\w\-]+)"

| rex field=job "FTP\-ACCOUNT\"\sVALUE\=\"(?P<LCON>[\w]+)\+(?P<RCON>[\w]+)"

| rex field=job "FTP\-LHOST\"\sVALUE\=\"(?P<LHOST>[\w]+)"

| rex field=job "FTP\-RHOST\"\sVALUE\=\"(?P<RHOST>[\w]+)"

| rex field=job "FTP-LPATH1\"\sVALUE\=\"(?P<LPATH1>[\w]+)\"\/\>\s\<VARIABLE\sNAME\=\"FTP-RPATH1\"\sVALUE\=\"(?P<RPATH1>[\w]+)\"\/\>"

| rex field=job "FTP-LPATH2\"\sVALUE\=\"(?P<LPATH2>[\w]+)\"\/\>\s\<VARIABLE\sNAME\=\"FTP-RPATH2\"\sVALUE\=\"(?P<RPATH2>[\w]+)\"\/\>"

| fillnull LPATH2,RPATH2 value=null

| eval LPATH = LPATH1+","+LPATH2

| eval LPATH =replace(LPATH,",null","")

| eval RPATH = RPATH1+","+RPATH2

| eval RPATH =replace(RPATH,",null","")

| eval ENV=if(JOBNAME="T-JOBA","TST",if(JOBNAME="A-JOBB","ACC","OTHER_ENV"))

| table ENV JOBNAME NODEID LCON LHOST LPATH RCON RHOST RPATH

| where isnotnull(JOBNAME)

~ If the reply helps, an upvote would be appreciated.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Wow, I can really go further with this and when I get more experience with Splunk I will make improvements. for now it's a perfect ! Thank you so much!

LPATH and RPATH are still empty but that's because the value can also contain / _ and \ characters. hopefully I'll can figure this out.