- Splunk Answers

- :

- Using Splunk

- :

- Splunk Search

- :

- Re: Error with connecting two search queries with ...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark Topic

- Subscribe to Topic

- Mute Topic

- Printer Friendly Page

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

How do I resolve this :Error with connecting two search queries with appendcols command?

I am currently trying to join two search queries together through the appendcols command in order to display two lines of data in a line graph. I am running across a error through the search that is being appended, that it is displaying the wrong data.

This is the current search with appendcols added.

index=main host=* sourcetype=syslog process=elcsend "\"config " CentOS

| rex "([^!]*!){2}(?P<type>[^!]*)!([^!]*!){4}(?P<role>[^!]*)!([^!]*!){23}(?P<vers>[^!]*)"

| search role=std-dhcp

| eval Total=sum(host)

| timechart span=1d count by Total

| rename NULL as CentOS

| appendcols override=true [search index=os source=ps host=deml* OR host=sefs* OR host=ingg* OR host=us* OR host=gblc*

NOT user=dcv NOT user=root NOT user=chrony NOT user=dbus NOT user=gdm NOT user=libstor+ NOT user=nslcd NOT user=polkitd NOT user=postfix NOT user=rpc NOT user=rpcuser NOT user=rtkit NOT user=colord NOT user=nobody NOT user=sgeadmin NOT user=splunk NOT user=setroub+ NOT user=lp NOT user=68 NOT user=ntp NOT user=smmsp NOT user=dcvsmagent NOT user=libstoragemgmt

| timechart span=1d dc(user) as DCV]

This is the current result.

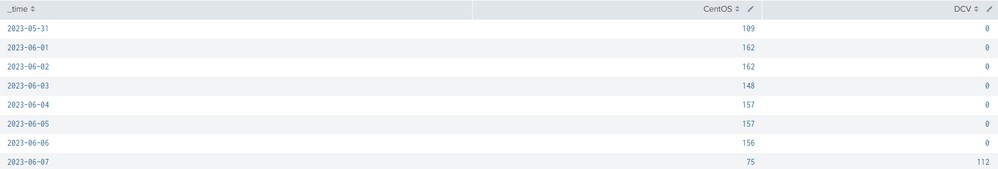

DCV(furthest right) is displaying the wrong count as when the search is ran by itself, it shows around 300 and now once appended, it is only displaying 112 give or take. It is also not displaying over the period of days and only on the most recent day. I am not sure if something is wrong in the search itself or something to do with the fields overlapping.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

If a subsearch produces different results when run on its own than when run as a subsearch, the most typical reason is that it hits limits for a subsearch and is silently finalized before fully finishing its operations. That's one.

Two - I don't see why you use appendcols (which is quite limiting).

Three - I don't get the beginning of your search. The sum(host) makes no sense - unless your hosts are named nummerically, you're gonna get a null every time. Why bother calculating it at all?

Four - all those NOTs in the subsearch. Ugh. I understand that maybe in your case that's the only way to find relevant events but inclusion is generally much better than exclusion.

The overall search can be probably improved (maybe quite a lot) but first analyze the third point above because it makes no sense and I sense some mistake since you deliberately call this field Total.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Subsearches are limited to 50,000 events - this is usually silent (no error message) - is there anything in the search log to show that this may have happened?

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I think that is the issue, as the events in the subsearch reaches millions. Is there an alternative to appendcols that will not have the limit of 50,000?

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

But you don't seem to be returning millions of rows of results. You're returning just a few days. Whether it's calculated from 10, 1000 or 10^10 individual data points doesn't matter. The limit is for the number of rows returned to the outer search. But with so many events to process you might be hitting the other limit - the time limit.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

You appear to be doing all your grouping by days, so you could change the appendcols to a series of appends each with a time constraint e.g. earliest=... latest=... each for a different day. Then you could do stats values on the two fields (Centos and DCV) by _time.