- Splunk Answers

- :

- Using Splunk

- :

- Reporting

- :

- Why do I keep getting an email every hour despite ...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark Topic

- Subscribe to Topic

- Mute Topic

- Printer Friendly Page

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

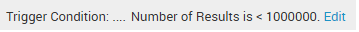

I have a search that shows the number of logs from various indexes for the last 60 mins. I have this saved as an alert to email me IF the event count < 1 million. I keep getting an email every hour despite the trigger condition not being met as the logs total more than 1M for the last 60 mins. What am I doing wrong? I feel like I'm going crazy here.

search

| tstats count WHERE (index=cisco OR index=palo OR index=email) BY index

results

index count

cisco 3923160

palo 21720018

email 7583099

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Your alert condition is matched based on the number of rows returned by the search, not the number of rows scanned. From your tstats search, you'll get one row for each index, so total number of results=total number of indexes, which I'm sure is way less than 1,000,000. Hence the condition is matching all the time and the alert is triggered. You should handle the triggering condition in the alert search itself. E.g. if you want to alert if , for any index, the number of events is <1M for selected time range, then:

Alert Search:

| tstats count WHERE (index=cisco OR index=palo OR index=email) BY index | where count <1000000

Alert COndition: When Number of events is greater than 0.

If all three indexes that you're checking has event count more than 1M, the alert search will give 0 result, hence alert would not trigger.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

You have to create a custom condition. The number of results is going by how many events are physically returned by your search.

Custom Condition

Trigger: search count < 1000000

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Your alert condition is matched based on the number of rows returned by the search, not the number of rows scanned. From your tstats search, you'll get one row for each index, so total number of results=total number of indexes, which I'm sure is way less than 1,000,000. Hence the condition is matching all the time and the alert is triggered. You should handle the triggering condition in the alert search itself. E.g. if you want to alert if , for any index, the number of events is <1M for selected time range, then:

Alert Search:

| tstats count WHERE (index=cisco OR index=palo OR index=email) BY index | where count <1000000

Alert COndition: When Number of events is greater than 0.

If all three indexes that you're checking has event count more than 1M, the alert search will give 0 result, hence alert would not trigger.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Oh ok, I didn't realize it was looking at the # of rows being returned instead of count of each result. I adjusted it and it appears to be working as intended now.