- Splunk Answers

- :

- Splunk Administration

- :

- Monitoring Splunk

- :

- How to change the total disk space use in Splunk (...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark Topic

- Subscribe to Topic

- Mute Topic

- Printer Friendly Page

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello,

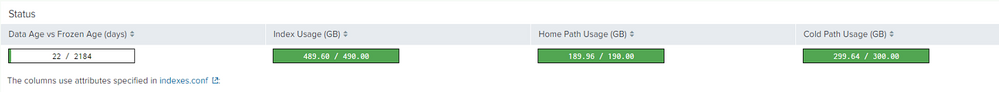

Our Splunk system just got an increase in size as image below (we have a Master, 1:1 indexes cluster struture)

Meaning we have an increase for hot from 500GB -> 1T and cold from 1.5T -> 3T

I have change the stanza in splunk/etc/master-apps/_cluster/local/indexes.conf (where we put our individual indexes config like maxTotalDataSizeMB, homePath.MaxDataSizeMB, coldPath.MaxDataSizeMB) to match the newly provide disk space. But after I restart services for both our indexers and master, it won;t apply the newly assign disk space but still using old one. I suspect I miss something here.

Can anyone point me to where can I config overall setting? (Because I'm not familial with splunk structure)

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

You need to deploy the cluster bundle to the peers. A restart will not apply the new settings

On the master:

splunk apply cluster-bundle

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

You need to deploy the cluster bundle to the peers. A restart will not apply the new settings

On the master:

splunk apply cluster-bundle

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

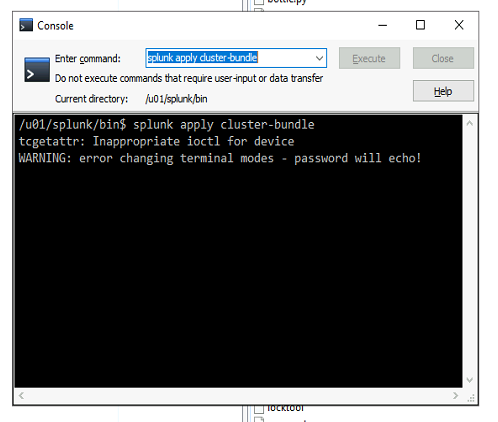

I tried running your command on master, but it show

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Try this in the CLI, path to where splunk is installed on the master indexers:

./splunk apply cluster-bundle

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I don't understand, to be honest. If you only expanded your storage, and raised your limits it doesn't mean that splunk will automatically fill all the storage space.

Firstly, as @richgalloway pointed out - there are volume settings. But even if you're not using volume-based restrictions, storage utilization depends on:

1) ingestion rate (if you're ingesting - for example - 10MB per day, you won't fill a 1TB drive in 10 days)

2) Size-based retention limits

3) Time-based retention limits

You might have your size limits increased but still buckets would get deleted if they got too old.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

1) We ingest 600GB data on a daily basis, and the old storage is too small for our need.

2) Sized base retention limit. There is an index that I want to extend, the old limit was

maxTotalDataSizeMB = 480000

homePath.maxDataSizeMB = 180000

coldPath.maxDataSizeMB = 300000We use this config since the start, and now it's not enough anymore so I set it as

maxTotalDataSizeMB = 1300000

homePath.maxDataSizeMB = 500000

coldPath.maxDataSizeMB = 800000I restart master, index01, index02 but it doesn't apply the new config.

3) We don't have time-based retention limit

And we don't have maxVolumnDataSizeMB in the first place, so why, if I don't add it, the new config won't apply when I change the limit for each index.

So I was wonder if there was a step else where that I missed in this?

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

If you're using volumes (which is a good idea) then you'll need to adjust the maxVolumeDataSizeMB setting.

If this reply helps you, Karma would be appreciated.