- Splunk Answers

- :

- Splunk Administration

- :

- Monitoring Splunk

- :

- High CPU due to High Number of dispatch Jobs (Will...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark Topic

- Subscribe to Topic

- Mute Topic

- Printer Friendly Page

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi

We are suffering form High CPU on a one box set up of Splunk (about 54 cores index and search head all in one). As the issue is complex, i want to know will more hardware help or do we need to do other changes?

We have build a system where we have saved searches that call saved search (Like Java function). We have done this as we use the same core function for alerts and dashboards so we have the same code line.

However this seems to have the impact of increasing the number of jobs dispatch (splunk/var/run/splunk/dispatch).

For example if you call one saved search you are putting 2 jobs into dispatch directory. If you call a lookuptable that is also another jobb etc.. before we know it we have 5,000 jobs! This causes high CPU

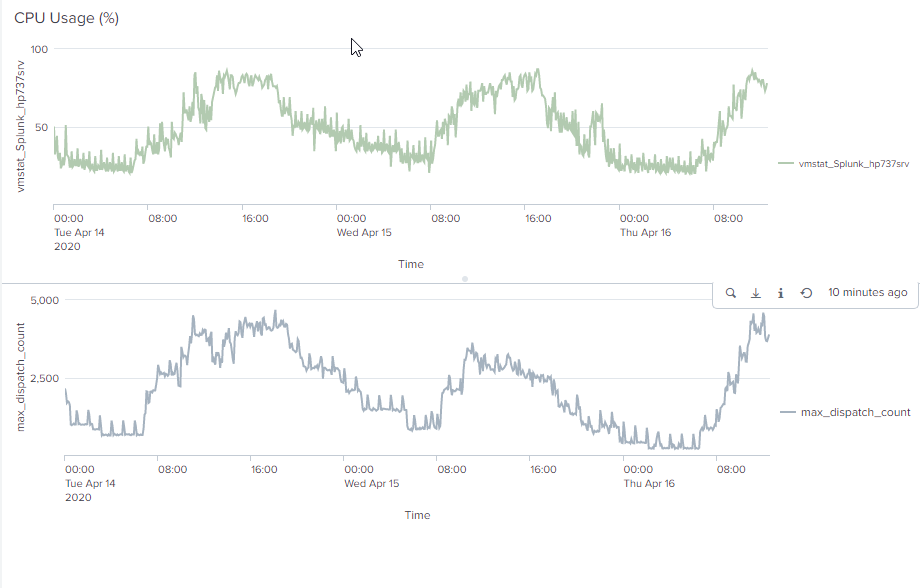

We have put in place scripts to remove these jobs(Non Running jobs) per minutes every minute but still it grows very fast during busy periods, this has an impact of having high CPU. The graph shows the CPU on top and the number of directories in the splunk/var/run/splunk/dispatch. We can see they are closely correlated.

To try and fight this we have developed the below script that will remove the files.However during busy periods it is not enough

#!/bin/bash

dispatch=/hp737srv2/apps/splunk/var/run/splunk/dispatch

splunkdir=/hp737srv2/apps/splunk

find $dispatch -maxdepth 1 -mmin +3 2>/dev/null | while read job; do if [ ! -e "$job/save" ] ; then rm -rfv $job ; fi ; done

find $dispatch -type d -empty -name alive.token -mmin +3 2>/dev/null | xargs -i rm -Rf {}

find $splunkdir/var/run/splunk/ -type f -name "session-*" -mmin +3 2>/dev/null | xargs -i rm -Rf {}

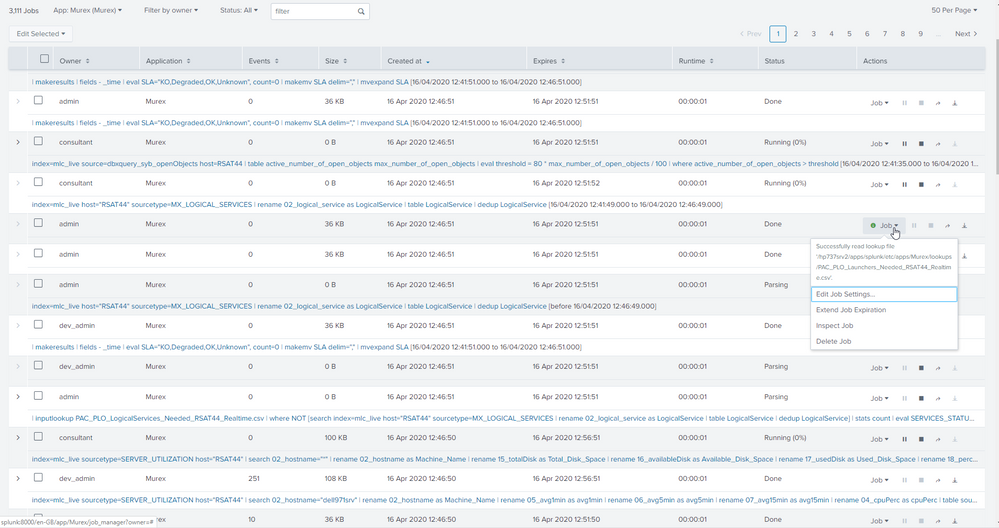

We can see form the running jobs that we have a lot of blank jobs - i think they are the lookuptables etc..

But they are also taking a lot of jobs -

So i am looking for help in knowing, if i add more hardware will this help, i think not unless it is a search-head as the jobs will still be on the search heads. OR will adding more index and making a cluster help this issue?

OR is there a setting i can add to make the one search that call 2 saved search search and 2 lookup file not use 5 searches in the dispatch directory. OR is something else going on that i need to look at!

Thanks in advance

Robbie

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I suggest switching from a standalone Splunk to a distributed Splunk with separate indexer and search head instances.

Rather than have alerts and dashboards invoke saved searches, schedule the saved searches to run at desired intervals. Then use the loadjob command in your alerts and dashboards to display the results of the last run of the saved search. That should reduce the load on your system.

If this reply helps you, Karma would be appreciated.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I suggest switching from a standalone Splunk to a distributed Splunk with separate indexer and search head instances.

Rather than have alerts and dashboards invoke saved searches, schedule the saved searches to run at desired intervals. Then use the loadjob command in your alerts and dashboards to display the results of the last run of the saved search. That should reduce the load on your system.

If this reply helps you, Karma would be appreciated.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi

Thanks for the replay.

A few questions if ok?

We plan to put in 3 indexers and one search head, however if i understand the issue correctly the dispatch folder is on the search head so it will still stay high, so i don't understand how doing this will help?

I will have to look into "loadjob", if i do this i will have to tun off my delete dispatch jobs i am assuming, as the job will be deleted, or is there a way to keep it so my script wont remove it?

Thanks

Robert

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Yes, dispatch folder is on the SH.

Separating SH and indexer divides the work load. Indexing and search move to a different server thus reducing the CPU use on both servers.

You don't want to delete the search results when using loadjob. Better to let Splunk manage them.

Consider also switching to a search head cluster to spread the searches across more servers.

If this reply helps you, Karma would be appreciated.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

ok thanks for the help - we will give it a go