Are you a member of the Splunk Community?

- Find Answers

- :

- Splunk Administration

- :

- Admin Other

- :

- Knowledge Management

- :

- [SmartDtore] How to Analyse the CacheSize?

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark Topic

- Subscribe to Topic

- Mute Topic

- Printer Friendly Page

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

[SmartDtore] How to Analyse the CacheSize?

How to verify the High cache churn rate?

We have configured Smartstore for our indexer Cluster deployment and observing many searches are taking longer to run. We will like to verify if we need to finetune cache manager size?

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Would you mind correcting the typo's in the title and then accepting your answer? Great posts as always!

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Signs that the cache is overused can be observed in the download rate:

index=_internal source=*/metrics.log* TERM(group=cachemgr_download) host="<INDEXER_NAME>" group=cachemgr_download |timechart per_second(kb) as kbps by host

If the graph show significant sustained download rate, then there may be a problem with the cache. For example "kbps>30000" (30MB) over a period of, say, 1 day.

Another sign is the number of queued download jobs:

index=_internal source=*/metrics.log* TERM(group=cachemgr_download) host="<INDEXER_NAME>" group=cachemgr_download |timechart median(queued) as queued by host

If the graph shows sustained queued jobs of 20 or more, then there may be problem of with the cache.

Identifying how many searches are slow

To see how many searches are slow, you can run the following query which will allow you to categorize them into slow and fast searches. Slow searches here are defined as those that took at least twice as long due to waiting on buckets to localize from remote storage. For the following query, we pick one indexer (the host= clause in it confines us to one):

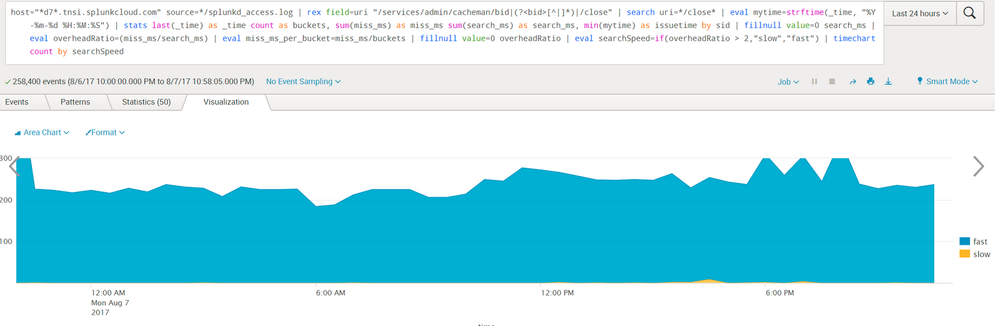

index=_internal source=*/splunkd_access.log* host="<INDEXER_NAME>" | rex field=uri "/services/admin/cacheman/bid|(?<bid>[^|]*)|/close" | search uri=*/close* | eval mytime=strftime(_time, "%Y-%m-%d %H:%M:%S") | stats last(_time) as _time count as buckets, sum(miss_ms) as miss_ms sum(search_ms) as search_ms, min(mytime) as issuetime by sid | fillnull value=0 search_ms | eval overheadRatio=(miss_ms/search_ms) | eval miss_ms_per_bucket=miss_ms/buckets | fillnull value=0 overheadRatio | eval searchSpeed=if(overheadRatio > 2,"slow","fast") | timechart count by searchSpeed

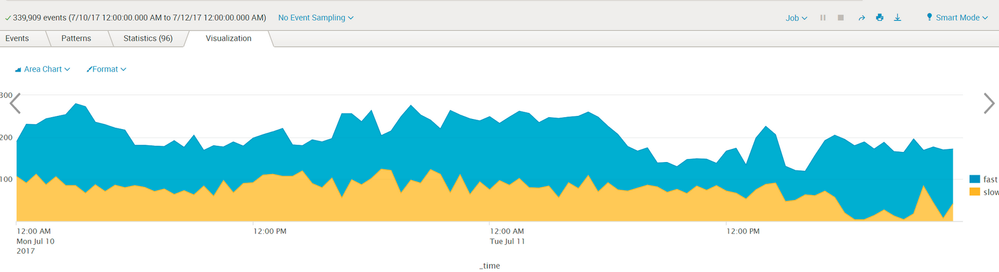

significant search slowness we observed a graph that looked like this (showing a large proportion of slow searches):

After some adjustments to the eviction policy (see below), the number of slow searches significantly decreased, though they did not entirely disappear:

If you take the search above you can modify last eval, you will be able to look at individual searches, listed by search ID (sid).

Identifying searches that go back far in time

The problem with the cache can be traced to searches that span far back in time, requiring download of buckets from S3. The following query can be used to identify all searches, including the SPL, whose earliest time is older than 7 days (again, searching a single indexer):

index=_internal host="<host_name>" source=*/splunkd_access.log | rex field=uri "/services/admin/cacheman/bid|(?<bid>[^|]*)|/close" | search uri=*/close* | eval mytime=strftime(_time, "%Y-%m-%d %H:%M:%S") | stats count as buckets, sum(miss_ms) as miss_ms sum(search_ms) as search_ms, min(mytime) as issuetime by sid | fillnull value=0 search_ms | eval overheadRatio=(miss_ms/search_ms) | eval miss_ms_per_bucket=miss_ms/buckets | fillnull value=0 overheadRatio | search overheadRatio > 2 | sort - overheadRatio | join sid [search host="<host_name>" source=*/remote_searches.log ("Streamed search search starting" OR "Streamed search connection terminated") NOT search_id=*_rt_* | eval et=strptime(apiEndTime, "%c") | eval st=strptime(apiStartTime, "%c") | eval howOldDays=(now()-st)/86400 | eval spanDays=(et-st)/86400 | transaction search_id startswith="Streamed search search starting" endswith="Streamed search connection terminated" | eval sid=search_id | table sid, duration, howOldDays, spanDays, search] | search howOldDays > 7

replace with your own indexer.

Running a large number of them will be problematic. While it is hard to come up with what number is large .

Determining work inefficiency of the eviction policy

If by using the above methods you have determined that the cache has high churn (e.g. there is high download rate and/or high number of queued download jobs), next you can check if the current eviction policy does not work well given the usage pattern.. You could use $SPLUNK_DB directory (the parent directory of all bucket files, usually "/opt/splunk/var/lib/splunk") for indexer, you can issue a series of shell commands to gather some statistics.

The following is an example of doing this:

$ cd /opt/splunk/var/lib/splunk

# get a list of all tsidx files, on the indexer, assuming that a single tsidx

# file approximates "bucket is in cache" state; get files' access times using

# the ls -lu; skip some hot buckets

$ find . -type f |grep tsidx$ |xargs ls -lu --time-style='+%s' |grep -v /hot_v1 > 111

# total number of buckets "in cache"

$ cat 111 |wc -l

3062

# set current file minus one day into $day_ago

$ day_ago=`perl -e 'print time - 86400'`

# number of buckets in cache that haven't been used in over a day

$ cat 111 |awk '{ if ($6<'$day_ago') print $0}' |wc -l

1697

# number of buckets in cache used within the last hour

$ hour_ago=`perl -e 'print time - 3600'`

$ cat 111 |awk '{ if ($6>'$hour_ago') print $0}' |wc -l

150

# total number of buckets (in cache or not)

$ find . -name db_* -type d |wc -l

25939

We collected four different metrics. Two different percentages are important here.

Percentage of buckets used within the last hour (metrics 1): 150/3062 = 4.9%

Percentage of buckets not used within the last day (metrics 2): 1697/3062 = 55.4%

For the example above, during the high churn rate the "number of buckets not used within the last day" 8 (metrics 2 above), and the "number of buckets used within the last hour" was about 4.9% only (metric 1 above). If you see similar numbers, it may be wise to eviction configuration.

If you have determined that there exists high cache churn rate, yet, the exercise described in this subsection shows that the majority of the buckets (say, well over 50%) have been used during the last 24 hours, then the problem is simply that there is not enough cache to satisfy the user's use cases. Another option is to increase the total cache available. You can do this by either or both of these: by expanding the number of indexers, or by swapping the existing indexer instances with ones with larger ephemeral size. The key metric that you try to increase with this action is the total cache size on the cluster, which is the sum of the sizes of the ephemeral storage across all indexers. The CacheManager needs is configured to use up to 85% of the available storage, according to the latest recommendations.