- Splunk Answers

- :

- Splunk Administration

- :

- Installation

- :

- The way to send files from Universal Forwarder to...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark Topic

- Subscribe to Topic

- Mute Topic

- Printer Friendly Page

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

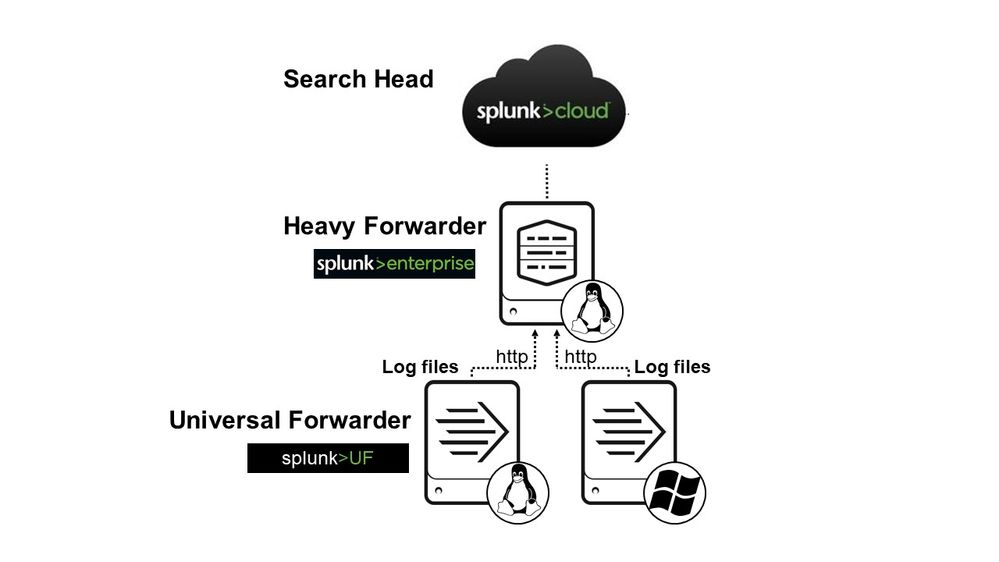

Is there any way to transfer log files utilizing Universal Forwarder?

I have to use Heavy Forwarder to extract fields form complicated log texts. So It's necessary to send logs as whole file format from the machines which generate logs toward Heavy Forwarder.

if it's possible, could you tell me How and Which directory I should check on Heavy Forwarder machine.

The Construction is this. (attachment photo)

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @smart111,

logs aren't indexed in UFs, they are indexed in Indexers.

The path is the following:

- logs are ingested in UFs and they could have the main information from conf files (index, sourcetype, host, source);

- then logs are sent to the HF where they are parsed (not stored),

- then they are sent to the Indexers (on Cloud) where they are indexed.

So the field extraction can be done at index time on HF and stored in Indexers, or at search time on Search Heads.

On HFs you have to parse all the field to extract at index time.

Logs aren't stored in HFs but they are parsed and then sent to Indexers.

Ciao.

Giuseppe

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @smart111,

using Splunk Cloud is always a best practice to use an Heavy Forwarder (it's better to use at least two HFs to avoid a Single Point of Failure!) to concentrate logs before sending to Cloud.

So you have to configure your Universal Forwarders to send their logs to the HF as if it were an Indexer.

On the HF you have to forward all logs to the Cloud, using the instructions you downloaded by Splunk Cloud.

The only attention you need is that you need to parse your logs on the HF and not on the Cloud.

In other words, you have to put on the HF all props.conf and transforms.conf you need for your applications, not search time field extractions, but all the other parsing jobs.

Ciao.

Giuseppe

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thank you for answering, @gcusello!

Using the way you suggested me, is it possible to extract fields from file paths on UF/HF machine?

Originally, I extracted these fields, "_time", "application" and "c_code" from the path like this "/var/opt/splunk/{$c_code}/{$application}/{$_time}.log at Heavy Forwarder. That's why I wondered there is the way to send log files from UF to HF and the files are stored at somewhere on HF machine.

But from your advice, I interpreted forwarding files from UF to HF are no longer effective as long as the logs specify indexed at UF. Is that correct?

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @smart111,

logs aren't indexed in UFs, they are indexed in Indexers.

The path is the following:

- logs are ingested in UFs and they could have the main information from conf files (index, sourcetype, host, source);

- then logs are sent to the HF where they are parsed (not stored),

- then they are sent to the Indexers (on Cloud) where they are indexed.

So the field extraction can be done at index time on HF and stored in Indexers, or at search time on Search Heads.

On HFs you have to parse all the field to extract at index time.

Logs aren't stored in HFs but they are parsed and then sent to Indexers.

Ciao.

Giuseppe

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thank you for answering, @gcusello

I understand the structure.

Then I should extract some fields related with file path parsing source information at HF.

Thanks a lot!