- Splunk Answers

- :

- Splunk Administration

- :

- Getting Data In

- :

- help on custom sourcetype for log parsing

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark Topic

- Subscribe to Topic

- Mute Topic

- Printer Friendly Page

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello,

I would like to reach out for some help in creating a custom sourcetype (cloned from _json), I'm calling it "ibcapacity". I've tried to edit the settings under this new sourcetype but my results are even more broken.

The output of the file is formatted correctly in _json (the jq checks come back all good); but when using the _json default sourcetype, the Splunk event gets cut off at 349 lines (the entire file is 392 lines); and the other problem using the standard _json format is that its not fully "color coding" the KVs...but that could be due to the fact that the end brackets aren't in the Splunk event because it was cut off at 349 lines.

So my solution was to try to create a custom sourcetype (cloned from _json), I'm calling it "ibcapacity". I've tried to edit the settings under this new sourcetype but my results are even more broken.

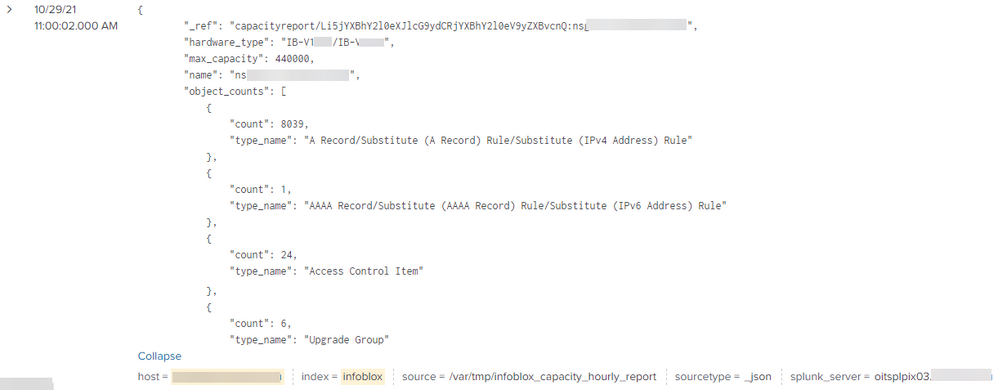

Here is the event when searched in the standard _json sourcetype:

However, the rest of the file has this at the end (past line 349), which doesn't show up in the Splunk event:

],

"percent_used": 120,

"role": "Grid Master",

"total_objects": 529020

}

]Can this community please help to identify what the correct settings should be for my custom sourcetype, ibcapacity? Why is the Splunk log getting cut off at 349 lines when using sourcetype=_json?

Thank you.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Yes, it was a "TRUNCATE" value thing, my entire json file is more than 10,000 characters; so once I updated TRUNCATE = 15000, it worked.

Thanks.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I did something here, I put the props.conf on the ~local on the Splunk UF host where the script is being run:

[ibcapacity]

ADD_EXTRA_TIME_FIELDS = True

ANNOTATE_PUNCT = True

AUTO_KV_JSON = true

BREAK_ONLY_BEFORE =

BREAK_ONLY_BEFORE_DATE =

CHARSET = AUTO

DATETIME_CONFIG =

DEPTH_LIMIT = 1000

DETERMINE_TIMESTAMP_DATE_WITH_SYSTEM_TIME = false

HEADER_MODE =

INDEXED_EXTRACTIONS = json

KV_MODE = none

LB_CHUNK_BREAKER_TRUNCATE = 2000000

LEARN_MODEL = true

LEARN_SOURCETYPE = true

LINE_BREAKER = ([\r\n]+)

LINE_BREAKER_LOOKBEHIND = 100

MATCH_LIMIT = 100000

MAX_DAYS_AGO = 2000

MAX_DAYS_HENCE = 2

MAX_DIFF_SECS_AGO = 3600

MAX_DIFF_SECS_HENCE = 604800

MAX_EVENTS = 512

MAX_TIMESTAMP_LOOKAHEAD = 128

MUST_BREAK_AFTER =

MUST_NOT_BREAK_AFTER =

MUST_NOT_BREAK_BEFORE =

NO_BINARY_CHECK = true

SEGMENTATION = indexing

SEGMENTATION-all = full

SEGMENTATION-inner = inner

SEGMENTATION-outer = outer

SEGMENTATION-raw = none

SEGMENTATION-standard = standard

SHOULD_LINEMERGE = false

TRANSFORMS =

TRUNCATE = 10000

category = Structured

description = JavaScript Object Notation format. For more information, visit http://json.org/

detect_trailing_nulls = false

disabled = false

maxDist = 100

priority =

pulldown_type = true

sourcetype =

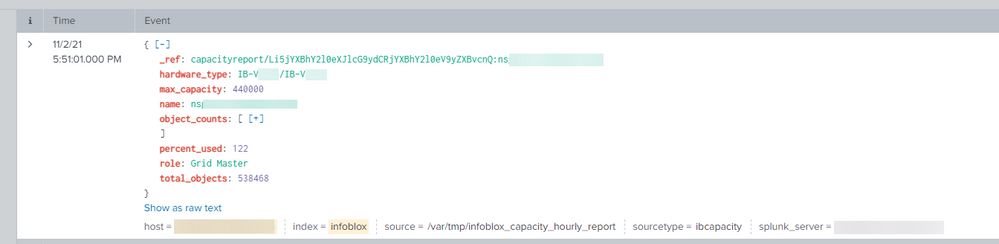

termFrequencyWeightedDist = falseand then restarted the Splunk UF on the host, and ran the script again...it did do the same exact thing as the _json sourcetype, where it cut it off at 349 lines. So then I deleted about 60 lines and ensured that the brackets all closed, let the Splunk UF read the file again, and now it did perform the correct _json like parsing for the custom sourcetype, ibcapacity:

Is there anyway to ensure that Splunk will read the entire 392 lines?

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Yes, it was a "TRUNCATE" value thing, my entire json file is more than 10,000 characters; so once I updated TRUNCATE = 15000, it worked.

Thanks.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi

can you try these and check how those are differing?

splunk btool props list _json

splunk btool props list ibcapacityProbably there is something which is missing from your new source type?

r. Ismo

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @isoutamo ,

Here is the output for _json:

[_json]

ADD_EXTRA_TIME_FIELDS = True

ANNOTATE_PUNCT = True

AUTO_KV_JSON = true

BREAK_ONLY_BEFORE =

BREAK_ONLY_BEFORE_DATE = True

CHARSET = UTF-8

DATETIME_CONFIG =

DEPTH_LIMIT = 1000

DETERMINE_TIMESTAMP_DATE_WITH_SYSTEM_TIME = false

HEADER_MODE =

INDEXED_EXTRACTIONS = json

KV_MODE = none

LB_CHUNK_BREAKER_TRUNCATE = 2000000

LEARN_MODEL = true

LEARN_SOURCETYPE = true

LINE_BREAKER = ([\r\n]+)

LINE_BREAKER_LOOKBEHIND = 100

MATCH_LIMIT = 100000

MAX_DAYS_AGO = 2000

MAX_DAYS_HENCE = 2

MAX_DIFF_SECS_AGO = 3600

MAX_DIFF_SECS_HENCE = 604800

MAX_EVENTS = 512

MAX_TIMESTAMP_LOOKAHEAD = 128

MUST_BREAK_AFTER =

MUST_NOT_BREAK_AFTER =

MUST_NOT_BREAK_BEFORE =

NO_BINARY_CHECK = true

SEGMENTATION = indexing

SEGMENTATION-all = full

SEGMENTATION-inner = inner

SEGMENTATION-outer = outer

SEGMENTATION-raw = none

SEGMENTATION-standard = standard

SHOULD_LINEMERGE = True

TRANSFORMS =

TRUNCATE = 10000

category = Structured

description = JavaScript Object Notation format. For more information, visit http://json.org/

detect_trailing_nulls = false

disabled = false

maxDist = 100

priority =

pulldown_type = true

sourcetype =

termFrequencyWeightedDist = false

And here is the output for ibcapacity:

[ibcapacity]

ADD_EXTRA_TIME_FIELDS = True

ANNOTATE_PUNCT = True

AUTO_KV_JSON = true

BREAK_ONLY_BEFORE =

BREAK_ONLY_BEFORE_DATE = true

CHARSET = UTF-8

DATETIME_CONFIG =

DEPTH_LIMIT = 1000

DETERMINE_TIMESTAMP_DATE_WITH_SYSTEM_TIME = false

HEADER_MODE =

INDEXED_EXTRACTIONS = none

KV_MODE = none

LB_CHUNK_BREAKER_TRUNCATE = 2000000

LEARN_MODEL = true

LEARN_SOURCETYPE = true

LINE_BREAKER = ([\r\n]+)

LINE_BREAKER_LOOKBEHIND = 100

MATCH_LIMIT = 100000

MAX_DAYS_AGO = 2000

MAX_DAYS_HENCE = 2

MAX_DIFF_SECS_AGO = 3600

MAX_DIFF_SECS_HENCE = 604800

MAX_EVENTS = 512

MAX_TIMESTAMP_LOOKAHEAD = 128

MUST_BREAK_AFTER =

MUST_NOT_BREAK_AFTER =

MUST_NOT_BREAK_BEFORE =

NO_BINARY_CHECK = true

SEGMENTATION = indexing

SEGMENTATION-all = full

SEGMENTATION-inner = inner

SEGMENTATION-outer = outer

SEGMENTATION-raw = none

SEGMENTATION-standard = standard

SHOULD_LINEMERGE = true

TRANSFORMS =

TRUNCATE = 10000

category = Structured

description = Infoblox capacity report from host

detect_trailing_nulls = false

disabled = false

maxDist = 100

priority =

pulldown_type = 1

sourcetype =

termFrequencyWeightedDist = falseEven if I duplicate _json's exact settings for ibgrid, and assuming that works, the fact remains that the event itself still gets cut off at 349 lines. Is there any reasoning to this?

Thanks.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

When you are doing diff for those definitions you see a difference.

12c12

< INDEXED_EXTRACTIONS = none

---

> INDEXED_EXTRACTIONS = jsonJust add second line to your new definition and then it probably works.

r. Ismo

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

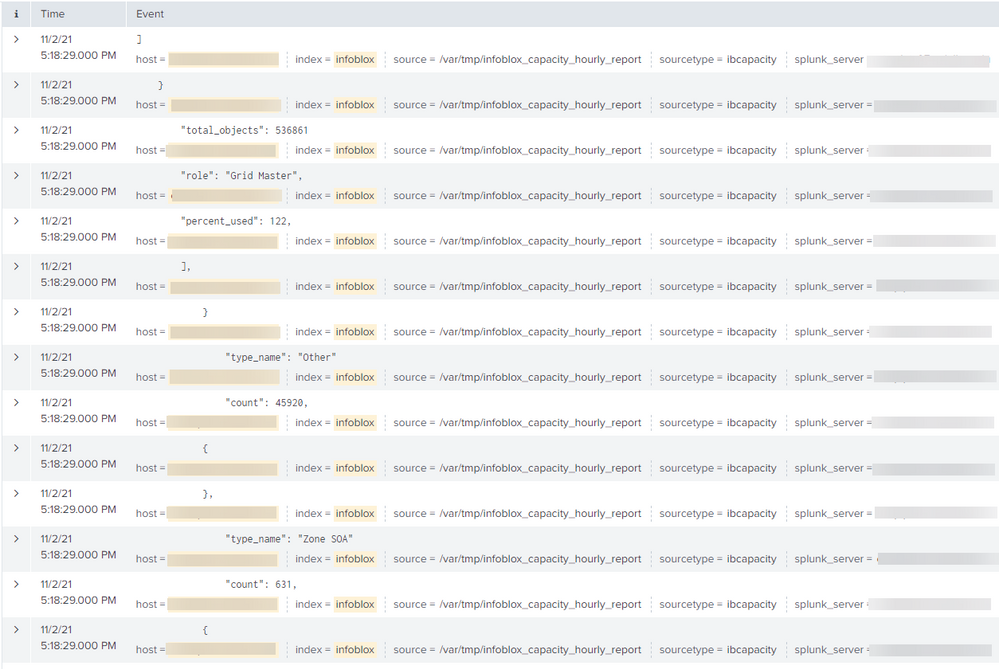

Thanks for the suggestion, I did update the settings on my custom sourcetype, ibcapacity, where the btool command was identical to that of _json's btool command, and it was doing exactly as before, linebreaking every line:

I'm sure there is a line break issue here, but I'm not sure how to implement it.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@isoutamo wrote:

This works ok if you have set sourcetype as _json ?

Yes and no, it works to display the log file as an event, but cuts it off at 349 lines, the file itself is 392 lines.