- Splunk Answers

- :

- Splunk Administration

- :

- Getting Data In

- :

- extract key value pairs and timestamp field from n...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark Topic

- Subscribe to Topic

- Mute Topic

- Printer Friendly Page

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

extract key value pairs and timestamp field from nested json

Hi,

I need to read the below json file in python script and send each json to splunk.

[[[

with open('sampledata.json') as jsond:

jsondata = json.load(jsond)

for line in jsondata:

target.submit(event=str(line),sourcetype=sourcetype)

time.sleep(5)

]]]

How to split each key value pair in json to "search fields" in splunk and also extract the "timestamp" field from json to be used by splunk as event timestamp?

[

{

"id": 707860,

"name": "Hurzuf",

"country": "UA",

"timestamp":"2017-09-02 06:44:14",

"coord": {

"lon": 34.283333,

"lat": 44.549999

},

"ports":[

{

"port": 1,

"utilization": 140,

"error": {

"tx": 1000.00,

"rx": 500

}

},

{

"port": 2,

"utilization": 110,

][1] "error": {

"tx": 1002.00,

"rx": 420

}

}

]

},

{

"id": 519188,

"name": "Novinki",

"country": "RU",

"timestamp":"2017-09-03 06:50:14",

"coord": {

"lon": 37.666668,

"lat": 55.683334

},

"ports":[

{

"port": 1,

"utilization": 120,

"error": {

"tx": 1020.00,

"rx": 400

}

},

{

"port": 2,

"utilization": 120,

"error": {

"tx": 1002.00,

"rx": 400

}

}

]

}

]

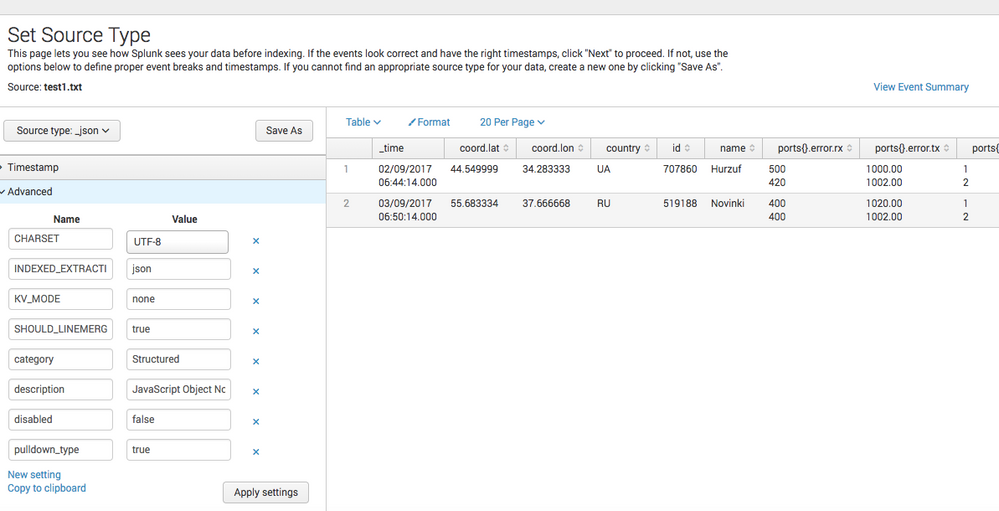

If I directly upload the json file the screen looks like the image attached.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @sawgata12345,

Based on sample data I have uploaded below sample data in splunk with _json sourcetype and it is working fine and extracting fields properly.

Sample data:

[

{

"id": 707860,

"name": "Hurzuf",

"country": "UA",

"timestamp":"2017-09-02 06:44:14",

"coord": { "lon": 34.283333, "lat": 44.549999 },

"ports": [ { "port": 1, "utilization": 140, "error": { "tx": 1000.00, "rx": 500 } }, { "port": 2, "utilization": 110, "error": { "tx": 1002.00, "rx": 420 } } ]

},

{

"id": 519188,

"name": "Novinki",

"country": "RU",

"timestamp":"2017-09-03 06:50:14",

"coord": { "lon": 37.666668, "lat": 55.683334 },

"ports":[ { "port": 1, "utilization": 120, "error": {"tx": 1020.00, "rx": 400 } },

{ "port": 2, "utilization": 120, "error": { "tx": 1002.00, "rx": 400 } } ]

}

]

Screenshot:

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@harsmarvania57

see the ports{}:error,rx

it should come as port1:error:rx | port1:error:tx|port2:error:rx|port2:error:tx etc

and even this much also not coming when i upload the same data from python code as in my query above.

If sending from python code whole line is coming as event with current time as timestamp

timestamp | event

XXXXXX | { "id": 707860, "name": "Hurzuf", "country": "UA", "timestamp":"2017-09-02 06:44:14",

"coord": { "lon": 34.283333, "lat": 44.549999 }, "ports": [ { "port": 1, "utilization": 140, "error": { "tx": 1000.00, "rx": 500 } }, { "port": 2, "utilization": 110, "error": { "tx": 1002.00, "rx": 420 } } ] }

XXXXXX | { "id": 707860, "name": "Hurzuf", "country": "UA", "timestamp":"2017-09-02 06:44:14",

"coord": { "lon": 34.283333, "lat": 44.549999 }, "ports": [ { "port": 1, "utilization": 140, "error": { "tx": 1000.00, "rx": 500 } }, { "port": 2, "utilization": 110, "error": { "tx": 1002.00, "rx": 420 } } ] }

It dosen't even seperate the Id,name,country which are not nested as "ports".

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

When you are sending data from python which source type are you supplying?

Have you tried with _json sourcetype?

And ports{}.error.rx is coming because ports has nested json value so it will come as ports{}.error.rx not port:error:rx

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I have created python script called test.py and I have already installed splunk-python-sdk

test.py script

import json

import splunklib.client as client

HOST = "localhost"

PORT = 8089

USERNAME = "admin"

PASSWORD = "changeme"

# Create a Service instance and log in

service = client.connect(

host=HOST,

port=PORT,

username=USERNAME,

password=PASSWORD)

with open('sampledata.json') as jsond:

data=json.load(jsond)

for line in data:

target=service.indexes["main"]

data_json=json.dumps(line)

target.submit(event=data_json,sourcetype="json")

Sample data in sampledata.json file

[

{

"id": 707860,

"name": "Hurzuf",

"country": "UA",

"timestamp":"2017-09-02 06:44:14",

"coord": { "lon": 34.283333, "lat": 44.549999 },

"ports": [ { "port": 1, "utilization": 140, "error": { "tx": 1000.00, "rx": 500 } }, { "port": 2, "utilization": 110, "error": { "tx": 1002.00, "rx": 420 } } ]

},

{

"id": 519188,

"name": "Novinki",

"country": "RU",

"timestamp":"2017-09-03 06:50:14",

"coord": { "lon": 37.666668, "lat": 55.683334 },

"ports":[ { "port": 1, "utilization": 120, "error": {"tx": 1020.00, "rx": 400 } },

{ "port": 2, "utilization": 120, "error": { "tx": 1002.00, "rx": 400 } } ]

}

]

Which is giving me perfect result, please find screenshot here https://prnt.sc/hmu3s6

If you want to rename field names from ports{} to ports: you can use rename command like this

<yourBasesearch> | rename ports{}.error.rx AS ports:error:rx, ports{}.error.tx AS ports:error:tx, ports{}.port AS ports:port, ports{}.utilization AS ports:utilization

I hope this helps.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thats the issue, the whole json is coming in single block and the timestamp is also not extracted.

In your upload the event time is showing as the time of upload for all json and not that which is mentioned inside each json.

And see(the image which I had uploaded with the Question) how the fields get seperated if we upload whole json file manually using System->AppData->upload.

_time | coord:lat | cooord:long|country | Id |name ........

(timeshould be from the "timestamp" field in json)

This extraction is not happening form python upload, and the events are not getting segregated using the "timestamp" field inside each json.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

You have 2 json blocks in your sample data so it will generate 2 events in splunk, still I am not clear what you are trying to say that "it is coming in single block".

Based on comment which I have provided earlier in which I have changed sourcetype in test.py

script to target.submit(event=data_json,sourcetype="custom_json")

And I have created $SPLUNK_HOME/etc/system/local/props.conf with below content for custom_json sourcetype

[custom_json]

KV_MODE = json

TIME_PREFIX = timestamp

Which is extracting timestamp correctly, please refer screenshot https://prnt.sc/hn0h1l

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The timestamp is working fine but uploading from python code the columns are not getting splitted as it is while we upload json file from UI.

Image snapshot for data uploaded directly from splunk UI (Add Data).

https://prnt.sc/hoy25e

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

When you index anything in Splunk it will display as events only (Refer screenshot which I have provided earlier), if you want to display those data in table format in that case you need to usetable Command as I have provided earlier.

Screenshot which you have provided is displaying data when you assigning sourcetype but after that when you index it, it will display as events only (Same as screenshot which I have provided earlier)

I hope this helps.

If this clears your query then please accept my answer and upvote it.

Thanks,

Harshil

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Try selecting json related sourcetypes. There are couple of sourcetype

Also Try adding

TIME_PREFIX = \"timestamp\":\"

In the screenshot

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@mayurr98

I have already tried TIME_PREFIX = \"timestamp\":\" also. it didn't work.

As above in my query the major issue is when I am sending the data from python to splunk using splunksdk.

[[[

with open('sampledata.json') as jsond:

jsondata = json.load(jsond)

for line in jsondata:

target.submit(event=str(line),sourcetype=sourcetype)

time.sleep(5)

]]]