Are you a member of the Splunk Community?

- Find Answers

- :

- Splunk Administration

- :

- Getting Data In

- :

- Re: custom sourcetype micmicing csv having issues ...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark Topic

- Subscribe to Topic

- Mute Topic

- Printer Friendly Page

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi All,

I am ingesting comma separated file "filename.out" from database server onto splunk indexer using splunk forwarder.

Data ingestion is happening but I am having issues with the field extractions. I do not see the fields in the interesting field panel on the left hand side on the search head(key-value pairs).

When I forward the same data from the data base server by selecting the sourcetype=csv in the inputs.conf , the data is ingested correctly along with the field extraction(shows up in left hand navigation)

In props.conf I copied the csv stanza and just renamed it to "MyCustomSourceType", restarted the indexer instance and forwarded the data but field extractions do not happen.

Please note I have single instance acting as search head and indexer.

Also when I upload the data on the searchhead using the same "MyCustomSourceType" field extractions show up.

Can you help me understand why the field extractions do not show up while forwarding the data using custom sourcetype but shows up during splunkweb upload. Also why standard csv stanza sourcetype is working but copy pasting the same and creating new sourcetype does not work?(I cross checked csv stanza attributes on system/local and system/default attributes on indexer and they are all part of my custom source type).

I have created custom source types by copying the sourcetype csv stanza

inputs.conf ( of the forwarder installed on the db host)

[monitor:///xyz/abx/filename.out] ----WORKS

disabled=false

index=xyz

sourcetype=csv

crcSalt=<SOURCE>

[monitor:///xyz/abx/filename.out] ---DOES NOT EXTRACT FIELDS

disabled=false

index=xyz

sourcetype=MyCustomSourceType

crcSalt=<SOURCE>

props.conf (on the indexer)

[csv]

SHOULD_LINEMERGE = False

pulldown_type = true

INDEXED_EXTRACTIONS = csv

KV_MODE = none

category = Structured

description = Comma-separated value format. Set header and other settings in "Delimited Settings"

DATETIME_CONFIG =

NO_BINARY_CHECK = true

disabled = false

[MyCustomSourceType]

SHOULD_LINEMERGE = False

pulldown_type = true

INDEXED_EXTRACTIONS = csv

KV_MODE = none

category = Structured

description = Comma-separated value format. Set header and other settings in "Delimited Settings"

DATETIME_CONFIG =

NO_BINARY_CHECK = true

disabled = false

Sample data in filename.out

ID , MEMID,MEMTYP,LOGINTIME ,MEM_LOCKOUT,RSN ,MEM_UPD,IP

1234 , 2222, 1,03-14-2017 02:16:34, 1,Password Expired ,abc ,108.0.0.0

1234 , 2222, 1,03-14-2017 02:16:34, 1,Password Expired ,abc ,108.0.0.1

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Indexed_extractions is "funny" in that it applies at the forwarder. So if you are forwarding your data to Splunk the csv sourcetype will work but then the mimicked sourctype will not IF you haven't added that sourcetype in props.conf ON THE FORWARDERS TOO.

Is this the case for you?

Thanks,

JKat54

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Indexed_extractions is "funny" in that it applies at the forwarder. So if you are forwarding your data to Splunk the csv sourcetype will work but then the mimicked sourctype will not IF you haven't added that sourcetype in props.conf ON THE FORWARDERS TOO.

Is this the case for you?

Thanks,

JKat54

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thank you jkat54, I will try this out and update.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi jkat54,

You are right, I was able to see the field extractions on the left nav after adding the custom source types to the props.conf. I will accept this answer.

However in my case i do not want to have props.conf on forwarders hence I tried the approach in the link shared by DalJeanis and that approach works for me.

Do you know why my custom sourcetype works fine and shows fields in left nav when I upload data using splunkweb ? you answered the other part as to why it does not work while forwarding.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

When you upload via web, the props is local to where the input is. When you upload the data via forwarder, you have to have the props on the forwarder because that is where the input is.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks jkat54...I got it now:)!!

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

found this link to be informative http://docs.splunk.com/Documentation/Splunk/6.5.3/Data/Extractfieldsfromfileswithstructureddata

the section "Caveats to extracting fields from structured data files" in above links explains why custom source types does not work on indexers, now it gives more insight.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks Jkat54,

i did not add them on the forwarders, i can try doing it but my admin team does not recommend doing it, i will try it first thing i get to work.

I could use csv sourcetype but in my implementation we use sourcetype to distinguish the data for the usecase and usually never use out of the box as is , we copy and rename to make it custom. I was hoping to do the same in this case.

Do you know how does standard csv sourcetype does not need this (adding to props.conf on the forwarder) and why it works thru splunkweb.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

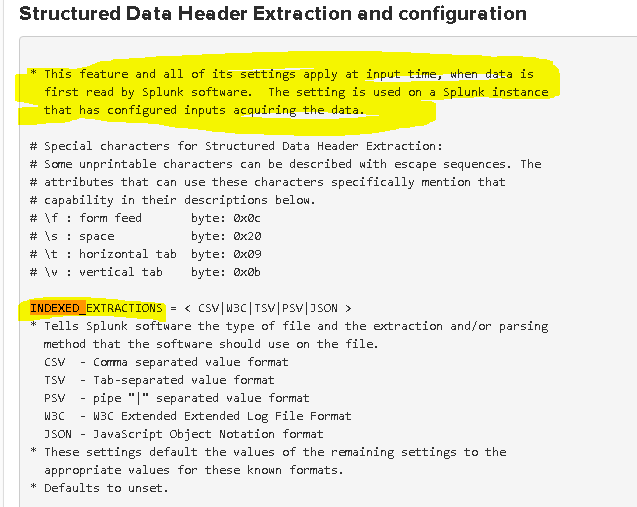

The standard csv sourcetype is already configured on all splunk instances. Your new / cloned sourcetype is only known wherever you've set it up at... and since the sourcetype uses indexed_extractions, it has to be configured at the source.

For proof that I am correct, see this document:

https://docs.splunk.com/Documentation/Splunk/latest/Admin/Propsconf#Structured_Data_Header_Extractio...

And this screenshot of the same:

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

have you done this?

Set header and other settings in "Delimited Settings"

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi DalJeanis,

No i did not do that. Do we need to do that ? if it is not needed for standard csv why would it be needed for custom? trying to understand

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

To me, it looks like an instruction to the user. I'm thinking the "Delimited Settings" are already established established for [csv] type in various places.

Here's another thing to check first - nonstandard line breaks... https://answers.splunk.com/answers/227769/deploying-an-app-to-read-csv-files-why-is-the-univ.html

...and here's a rundown setting one up from scratch...

https://www.splunk.com/blog/2013/03/11/quick-n-dirty-delimited-data-sourcetypes-and-you/

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks DalJeanis for the links, I will try these options out.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi DalJeanis,

Thanks for sharing the links, approach specified in quick n dirty worked like charm for me and I would be using this approach as I do not want to have any props changes on the forwarder. would you know how we can avoid the header fields in the file from being indexed, I can filter them in the search query but wanted to avoid bringing them in as per the approach in the link we already are naming the fields.

If you can post your previous reply as an answer I would happy to accept the answer.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I tried the "PREAMBLE_REGEX " attribute on the indexer props.conf but I guess my regex wasn't right it did bring in the header again, I will deal with it in spl