- Splunk Answers

- :

- Splunk Administration

- :

- Getting Data In

- :

- Re: Why does my indexer stop responding to search ...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark Topic

- Subscribe to Topic

- Mute Topic

- Printer Friendly Page

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

We are noticing performance issues on an Indexer. The server in question is being used to index network data so index rate is high.

Type: Physical

CPU - 32

Mem - 32 GB

Splunk Version - 6.4.1

Splunk Build - debde650d26e

Quite frequently this server stops responding to the search heads and also doesn't allow anyone to login directly. Splunk Web goes down as well. On the search head, following error is seen under peer status:

Error [00000080] Failed 12 out of 11 times.REST interface to peer is taking longer than 5 seconds to respond on https. Peer may be over subscribed or misconfigured. Check var/log/splunk/splunkd_access.log on the peer

Although, Indexing never stops and once the server normalizes, we can search on indexed data.

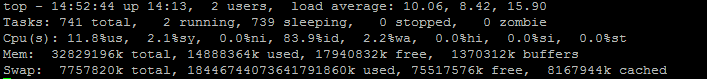

Attached is a screenshot of "top".

This shows the usage while server is functioning normally with all connections established. During peak, all memory usage goes to max 100%.

Has anyone experienced similar issues with indexers? And is there a way I could dive in deeper to see what is happening when the server stops responding?

Thanks,

~ Abhi

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Abhi, I haven't seen this in years but it was caused by runaway searches when I did see it. Your other questions imply that you have an indexer clustering environment. Is this problem only happening on a specific indexer in your cluster? If it's a standalone indexer, I'd probably focus on what kind of searches are running at the time that it breaks. The Distributed Management Console will give you a historical chart of Splunk memory usage and even what high-level component is responsible for the usage. If it turns out to be search, I would probably look in _internal for searches that were launched at the time that the memory usage started to climb.

If you want a solution for logging process memory usage that doesn't involve Splunk, I imagine everyone will have an opinion but if I needed a quick-and-dirty logging approach I'd probably do something like this:

Create a shell script that will simply write out the output of ps aux but prepend the current timestamp to each line. It will also filter the output so you'll only see processes using more than 0.1% of system memory (noise filtering):

#!/bin/bash

ps aux | gawk '{ print strftime("[%Y-%m-%d %H:%M:%S]"), $0 }' | gawk '$6 > 0.1' >> /tmp/ps.txt

Note that the timestamp prepending approach was grabbed from: http://unix.stackexchange.com/a/26729

Setting the script to executable and scheduling it to run every minute with cron is probably good enough. Now you have a log of process memory usage with minute precision. If the system melts down again, maybe run this to see processes that were using more than 5% memory:

gawk '$6 > 5' >> /tmp/ps.txt

I imagine you'll see the memory usage of a particular process increase rapidly until swapping starts to grind the machine to a near-halt. It could also turn out that a lot of processes are spinning up that each use a relatively small amount of memory, so if this "search" comes up with nothing you might have to refer to the raw log.

I know, very primitive and there are 1,000 better ways to do it, but it's a one-off that can be set up in 2 minutes (don't forget to delete the cron job when done!). Tested on RHEL5 and RHEL6. I'm curious about what process will end up causing the problem!

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

This error also results from clobbering an indexer's splunk.secret file. This can happen if you are trying to synchronize this file so that you can push out encrypted passwords from a DS.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Abhi, I haven't seen this in years but it was caused by runaway searches when I did see it. Your other questions imply that you have an indexer clustering environment. Is this problem only happening on a specific indexer in your cluster? If it's a standalone indexer, I'd probably focus on what kind of searches are running at the time that it breaks. The Distributed Management Console will give you a historical chart of Splunk memory usage and even what high-level component is responsible for the usage. If it turns out to be search, I would probably look in _internal for searches that were launched at the time that the memory usage started to climb.

If you want a solution for logging process memory usage that doesn't involve Splunk, I imagine everyone will have an opinion but if I needed a quick-and-dirty logging approach I'd probably do something like this:

Create a shell script that will simply write out the output of ps aux but prepend the current timestamp to each line. It will also filter the output so you'll only see processes using more than 0.1% of system memory (noise filtering):

#!/bin/bash

ps aux | gawk '{ print strftime("[%Y-%m-%d %H:%M:%S]"), $0 }' | gawk '$6 > 0.1' >> /tmp/ps.txt

Note that the timestamp prepending approach was grabbed from: http://unix.stackexchange.com/a/26729

Setting the script to executable and scheduling it to run every minute with cron is probably good enough. Now you have a log of process memory usage with minute precision. If the system melts down again, maybe run this to see processes that were using more than 5% memory:

gawk '$6 > 5' >> /tmp/ps.txt

I imagine you'll see the memory usage of a particular process increase rapidly until swapping starts to grind the machine to a near-halt. It could also turn out that a lot of processes are spinning up that each use a relatively small amount of memory, so if this "search" comes up with nothing you might have to refer to the raw log.

I know, very primitive and there are 1,000 better ways to do it, but it's a one-off that can be set up in 2 minutes (don't forget to delete the cron job when done!). Tested on RHEL5 and RHEL6. I'm curious about what process will end up causing the problem!

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi jtacy,

Thanks for the script. After getting the data and sorting it based on memory usage, here are the results for first few entries.

[2016-09-21 17:17:23] root 28579 86 92.3 38796764 30314280 Sl 16:00 66:35:00 [splunkd pid=45955] search =--id=scheduler__admin__dsa__RMD5303b1beb41b3b9a8_at_1474488000_6131

[2016-09-22 22:40:01] root 34035 66.8 89.4 38225372 29370204 Sl 21:00 66:50:00 [splunkd pid=21550] search =--id=scheduler__admin__dsa__RMD5303b1beb41b3b9a8_at_1474592400_173

[2016-09-22 22:38:51] root 34035 67.5 90.6 38208988 29762256 Sl 21:00 65:46:00 [splunkd pid=21550] search =--id=scheduler__admin__dsa__RMD5303b1beb41b3b9a8_at_1474592400_173

[2016-09-22 22:22:40] root 34035 73.3 89.5 37723612 29399444 Sl 21:00 60:37:00 [splunkd pid=21550] search =--id=scheduler__admin__dsa__RMD5303b1beb41b3b9a8_at_1474592400_173

[2016-09-21 08:15:01] root 17657 80.5 92.3 36834780 30302380 Sl 7:00 60:25:00 [splunkd pid=45955] search =--id=scheduler__admin__dsa__RMD5303b1beb41b3b9a8_at_1474455600_5657

[2016-09-23 05:15:46] root 44851 78.1 87.2 36810204 28654208 Sl 4:00 59:13:00 [splunkd pid=21550] search =--id=scheduler__admin__dsa__RMD5303b1beb41b3b9a8_at_1474617600_477

[2016-09-23 05:10:06] root 44851 81.2 87.4 36756956 28714840 Sl 4:00 56:54:00 [splunkd pid=21550] search =--id=scheduler__admin__dsa__RMD5303b1beb41b3b9a8_at_1474617600_477

Each time, it's the Dell SonicWALL app searches taking the maximum memory. I am not sure if something wrong with the app, or if it's just heavy indexing load(578 KB/s as per DMC) on the server. I also have a case open with support and will update here once I get a response.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks for the script.

I ended up disabling the two searches which were consuming the resources. Both were from the DSA app. Resource usage is under control now.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I guess it's a good situation - Why does my indexer stop responding to search heads but indexing continues?

Imagine the opposite ; -)

Scaling up the environment is never easy. The following speaks about it - Splunk Sizing and Performance: Doing More with More

In the past couple of months we tried to scale up by adding indexers, adding cpu cores etc and also we took a closer look at what is using the resources and optimize them.