- Splunk Answers

- :

- Splunk Administration

- :

- Getting Data In

- :

- Why does importing CSV files from a server directo...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark Topic

- Subscribe to Topic

- Mute Topic

- Printer Friendly Page

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

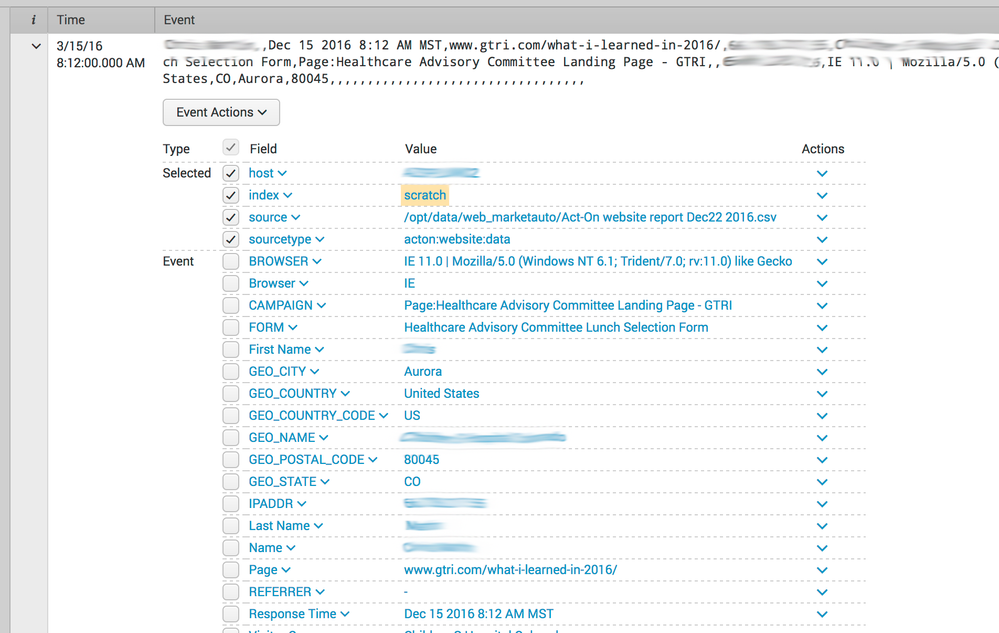

I am monitoring a directory on the search head server that contains a group of CSV's that are being imported into Splunk. I have setup an app for this import type with inputs, props and transforms (code pasted below) and it works for 9,997 out 10,012 line items in an example CSV - yes the CSV does contain header fields that I have extracted, however the CSV header row is not consistent from file to file - the time field "Response Time" is however and is what I need the data to be time stamped on.

The issue I am running across is that a few events are not picking up the correct MONTH even though I have defined what field to extract the time from for the event. I cannot find any pattern or reason for the few odd ball events to be showing a different month than the "Response Time" defined in the transforms.conf file.

I might be missing something or have things turned around but at a loss of as now.

inputs.conf:

[monitor:///opt/data/web_marketauto]

index = scratch

sourcetype = acton:website:data

props.conf

[acton:website:data]

INDEXED_EXTRACTIONS = csv

NO_BINARY_CHECK = true

SHOULD_LINEMERGE = false

CHECK_FOR_HEADER = True

CATEGORY = Structured

DESCRIPTION = Website data from Act-On

PULLDOWN_TYPE = true

HEADER_FIELD_LINE_NUMBER = 1

TRANSFORMS-webfieldtransform = web_field_trans

transforms.conf

[web_field_trans]

TIME_FIELD = Response Time

TIME_FORMAT = %b %d &Y %H:%M %Z

DELIMS = ,

Screenshot of the indexed oddball event:

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I reworked my .conf files and in doing so solved my issue (and a few others) - instead of letting Splunk define my fields and timestamp I am using TIME_PREFIX, REGEX, FIELDS, TRANSFORMS and REPORT to explicitly tell Splunk what to do. Everything works great now and it gives me more flexibility to define/rename new fields via REPORT if the header names ever change or new ones added in the future.

inputs.conf

[monitor:///opt/data/web_marketauto/...]

host = XX.XXX.XX.XXX

index = scratch

sourcetype = acton:website:data

- Monitoring a directory for all files

- Defining host since the host is not included in the source files

- Dumping to a test "scratch" index for testing

- Defining sourcetype

props.conf

[acton:website:data]

description = Website data from Act-On

KV_MODE = none

SHOULD_LINEMERGE = false

NO_BINARY_CHECK = true

TIME_PREFIX= ^[^\w]?^[^\d]+\,

MAX_TIMESTAMP_LOOKAHEAD = 25

TIME_FORMAT = %b %d %Y %I:%M %p %Z

TZ = MST

TRANSFORMS-t2 = delete_web_headers

REPORT-acton_website_fields = acton_website_fields

- Sourcetype as defined in inputs.conf

- Description of sourcetype

- "KV_MODE = none" is forcing Splunk to not autogenerate fields that might over-ride my defined fields in transforms.conf

- no line merging

- no binary check

- Defining where to find my timestamp that Splunk should used at index time

- How far ahead to look for the end of the timestamp from the end of my regex

- Defining the time stamp format in my source file

- What time zone my source file was written from

- Defining a index time TRANSFORMS that will eliminate the header row from my source file

- Defining a search time REPORT that will define the fields to use

transforms.conf

[delete_web_headers]

REGEX = ^(Name\s\,.*)

DEST_KEY = queue

FORMAT = nullQueue

[acton_website_fields]

DELIMS = ","

FIELDS = name,email,response_time,visited_page,ip_address,visitor_company,visitor_locations,referrer,search_engine,search_query,browser,event_name,event_start_date,user_type,first_name,last_name,email_address,registered,attended,duration,title,company,phone,address_1,address_2,city,state,post_code,country,company,job_title,business_phone,currently_use_splunk,company_splunk_use,upcoming_splunk_project,splunk_experience_level,topic,source_campaign,form,campaign,campaign_id,ip_address_2,browser_2,referrer_2,search,geo_company_name,geo_country_code,geo_country,geo_state,geo_city,geo_postal_code,page_url,email_3,description,lead_source,heard_about_gtri,email_4,middle_name,department,business_street,business_city,business_state,business_postal_code,business_country,business_fax,cell_phone,business_website,personal_website,account_name,full_name,mobile_phone,modified_on,ownder,parent_account,website,all_job_functions,linkedin_profile,twitter_profile,facebook_profile,gtri_splunk_services,additional_details,comments,address_id,splunk_experience,splunk_use_case

[delete_web_headers]

- REGEX that captures the first row in my source file

- DEST_KEY tells Splunk to move the first row defined by the REGEX

- FORMAT tells Splunk to dump the first row of data into null at index time

- This eliminates capturing the first row from my source file

[acton_website_fields]

- DELIMS tells Splunk what the delimiterwill be when I define my fields

- FIELDS are the manually assigned fields that I defined for every row of my source file(s)

- This allows me a ton of options in order to normalize the field names atsearch time

I have gone a bit further in indexing other sources and defined individual fields on a row by row basis based on certain attributes of data in that row using multiple REPORTS - helps when groups of rows contain different fields.

Hopefully this helps someone out, hate it when solutions are not posted 😉

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I reworked my .conf files and in doing so solved my issue (and a few others) - instead of letting Splunk define my fields and timestamp I am using TIME_PREFIX, REGEX, FIELDS, TRANSFORMS and REPORT to explicitly tell Splunk what to do. Everything works great now and it gives me more flexibility to define/rename new fields via REPORT if the header names ever change or new ones added in the future.

inputs.conf

[monitor:///opt/data/web_marketauto/...]

host = XX.XXX.XX.XXX

index = scratch

sourcetype = acton:website:data

- Monitoring a directory for all files

- Defining host since the host is not included in the source files

- Dumping to a test "scratch" index for testing

- Defining sourcetype

props.conf

[acton:website:data]

description = Website data from Act-On

KV_MODE = none

SHOULD_LINEMERGE = false

NO_BINARY_CHECK = true

TIME_PREFIX= ^[^\w]?^[^\d]+\,

MAX_TIMESTAMP_LOOKAHEAD = 25

TIME_FORMAT = %b %d %Y %I:%M %p %Z

TZ = MST

TRANSFORMS-t2 = delete_web_headers

REPORT-acton_website_fields = acton_website_fields

- Sourcetype as defined in inputs.conf

- Description of sourcetype

- "KV_MODE = none" is forcing Splunk to not autogenerate fields that might over-ride my defined fields in transforms.conf

- no line merging

- no binary check

- Defining where to find my timestamp that Splunk should used at index time

- How far ahead to look for the end of the timestamp from the end of my regex

- Defining the time stamp format in my source file

- What time zone my source file was written from

- Defining a index time TRANSFORMS that will eliminate the header row from my source file

- Defining a search time REPORT that will define the fields to use

transforms.conf

[delete_web_headers]

REGEX = ^(Name\s\,.*)

DEST_KEY = queue

FORMAT = nullQueue

[acton_website_fields]

DELIMS = ","

FIELDS = name,email,response_time,visited_page,ip_address,visitor_company,visitor_locations,referrer,search_engine,search_query,browser,event_name,event_start_date,user_type,first_name,last_name,email_address,registered,attended,duration,title,company,phone,address_1,address_2,city,state,post_code,country,company,job_title,business_phone,currently_use_splunk,company_splunk_use,upcoming_splunk_project,splunk_experience_level,topic,source_campaign,form,campaign,campaign_id,ip_address_2,browser_2,referrer_2,search,geo_company_name,geo_country_code,geo_country,geo_state,geo_city,geo_postal_code,page_url,email_3,description,lead_source,heard_about_gtri,email_4,middle_name,department,business_street,business_city,business_state,business_postal_code,business_country,business_fax,cell_phone,business_website,personal_website,account_name,full_name,mobile_phone,modified_on,ownder,parent_account,website,all_job_functions,linkedin_profile,twitter_profile,facebook_profile,gtri_splunk_services,additional_details,comments,address_id,splunk_experience,splunk_use_case

[delete_web_headers]

- REGEX that captures the first row in my source file

- DEST_KEY tells Splunk to move the first row defined by the REGEX

- FORMAT tells Splunk to dump the first row of data into null at index time

- This eliminates capturing the first row from my source file

[acton_website_fields]

- DELIMS tells Splunk what the delimiterwill be when I define my fields

- FIELDS are the manually assigned fields that I defined for every row of my source file(s)

- This allows me a ton of options in order to normalize the field names atsearch time

I have gone a bit further in indexing other sources and defined individual fields on a row by row basis based on certain attributes of data in that row using multiple REPORTS - helps when groups of rows contain different fields.

Hopefully this helps someone out, hate it when solutions are not posted 😉

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

From your data I can see Your timestamp should be like this

TIME_FORMAT = %b %d %Y %I:%M %p %Z

Dec 15 2016 8:12 AM MST

Maybe this is causing you problems

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks for the sanity check, implemented the time format change - missed the 24 to 12 hour strftime formatting in my original TIME_FORMAT but the problem still exists.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

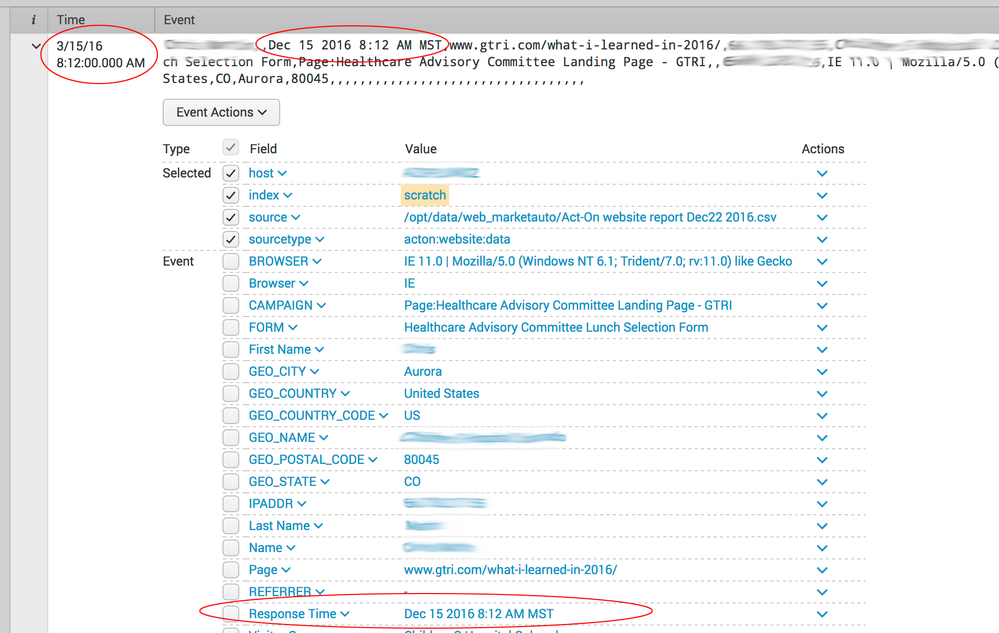

There's nothing incorrect I can see in that image.

It extracted Dec 15 2016 8:12 AM MST to Dec 15 2016 8:12 AM MST.

Is there a MONTH field, not shown, that is incorrect?

Or is there supposed to be a MONTH field that is missing?

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

if you look at the Time stamp outside of the event - 3/15/16 8:12.00 AM

Supposed to be 12/15/16 8:12.00 AM as viewed in the event itself.