- Splunk Answers

- :

- Splunk Administration

- :

- Getting Data In

- :

- What kind of situation should I configure "SHOULD_...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark Topic

- Subscribe to Topic

- Mute Topic

- Printer Friendly Page

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

My environment:

UF ver 7.2.3 on windows

Indexer ver 7.2.3 on Linux

My UF is monitoring log that has second header line in middle of log like below.

* I don't know why, but this is specifications of certain product...

*This log is not CSV, also not any kind of structered data.

time message

2019/02/05 10:00:00 this is test

2019/02/05 11:00:00 this is test

2019/02/05 12:00:00 this is test

time message

2019/02/05 13:00:00 this is test

2019/02/05 14:00:00 this is test

I was thinking to avoid first header line by below settings, and ignore second header line.

Indexer's props.conf

[test]

DATETIME_CONFIG =

SHOULD_LINEMERGE = false

TIME_FORMAT = %Y/%m/%d %H:%M:%S

TZ = Asia/Tokyo

category = Custom

disabled = false

pulldown_type = true

LINE_BREAKER = ([\r\n]+)

UF's props.conf

[test]

CHARSET = UTF-8

NO_BINARY_CHECK = true

disabled = false

HEADER_FIELD_LINE_NUMBER = 2

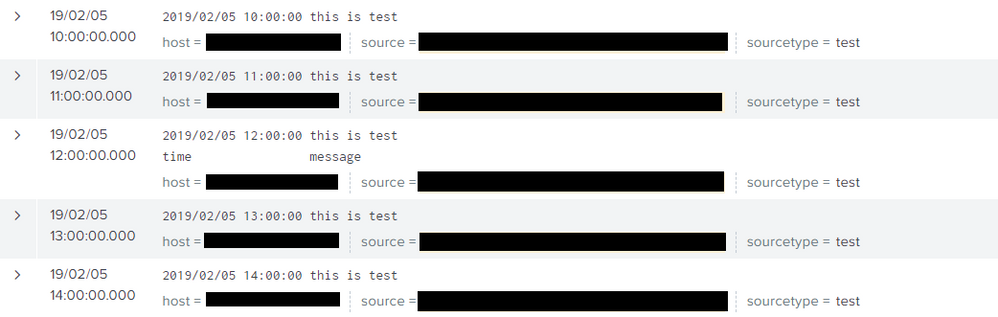

But one event above second header line was merged with header line like below capture.

Anyway, I added SHOULD_LINEMERGE = false to UF's props.conf, then it was solved.

But I can't understand how it works!

I think that I have to configure SHOULD_LINEMERGE = false to props.conf of Indexer or HF, but is it wrong?

Is there some situation that I have to configure it to props.conf of UF?

Please someone tell me about it.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @yutaka1005

Set SHOULD_LINEMERGE = false - this should be set on your indexers (or heavy forwarder if the data goes through a heavy forwarder)

Also on the indexers you should set the props/transforms to "nullQueue" the header lines. You can do this by following these instructions: https://docs.splunk.com/Documentation/Splunk/7.2.3/Forwarding/Routeandfilterdatad#Discard_specific_e...

here is the props.conf that you need

[test]

DATETIME_CONFIG =

SHOULD_LINEMERGE = false

TIME_FORMAT = %Y/%m/%d %H:%M:%S

TZ = Asia/Tokyo

category = Custom

disabled = false

pulldown_type = true

LINE_BREAKER = ([\r\n]+)

TRANSFORMS-null= setnull

and here is the transforms.conf

[setnull]

REGEX = ^time\s

DEST_KEY = queue

FORMAT = nullQueue

Hope this helps you

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @yutaka1005

Set SHOULD_LINEMERGE = false - this should be set on your indexers (or heavy forwarder if the data goes through a heavy forwarder)

Also on the indexers you should set the props/transforms to "nullQueue" the header lines. You can do this by following these instructions: https://docs.splunk.com/Documentation/Splunk/7.2.3/Forwarding/Routeandfilterdatad#Discard_specific_e...

here is the props.conf that you need

[test]

DATETIME_CONFIG =

SHOULD_LINEMERGE = false

TIME_FORMAT = %Y/%m/%d %H:%M:%S

TZ = Asia/Tokyo

category = Custom

disabled = false

pulldown_type = true

LINE_BREAKER = ([\r\n]+)

TRANSFORMS-null= setnull

and here is the transforms.conf

[setnull]

REGEX = ^time\s

DEST_KEY = queue

FORMAT = nullQueue

Hope this helps you

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thank you for answer!

I was able to solve this problem by setting nullQueue to Indexer and delete HEADER_FIELD_LINE_NUMBER from Universal Forwarder!

Apparently setting HEADER_FIELD_LINE_NUMBER seems to be skipping the parsing process of Indexer side like when setting INDEXED_EXTRACTIONS.

I think that Indexer's SHOULD_LINEMERGE = false was ignored and that I was able to avoid log combining by setting on the UF side is caused by this movement.

I wonder if this content was described in the manual...