- Splunk Answers

- :

- Splunk Administration

- :

- Getting Data In

- :

- Heavy Forwarder based Transforms - splitting event...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark Topic

- Subscribe to Topic

- Mute Topic

- Printer Friendly Page

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Heavy Forwarder based Transforms - splitting events to send to different sourcetypes without losing data?

Hey everyone!

I have what I would consider a complex problem, and I was hoping to get some guidance on the best way to handle it.

We are attempting to log events from an OpenShift (Kubernetes) environment. So far, I've successfully gotten raw logs coming in from Splunk Connect for Kubernetes, into our heavy forwarder via HEC, and then into our indexer.

The data that is being ingested has a bunch of metadata about the pod name, container name, etc. from this step. However, the problem is what to do with it from there.

In the case of this specific configuration, the individual component logs are getting combined into a single stream, with a few fields of metadata attached to the beginning. After this metadata, the event 100% matches up with what I'd consider a "standard" event, or something Splunk is more used to processing.

For example:

tomcat-access;console;test;nothing;127.0.0.1 - - [08/Dec/2021:13:25:21 -0600] "GET /idp/status HTTP/1.1" 200 3984This is first semicolon-delimited, and then space delimited, as follows:

- "tomcat-access" is the name of the container component that generated the file.

- "console" indicates the source (console or file name)

- "test" indicates the environment.

- "Nothing" indicates the User Token

- And everything after this semicolon is the real log. In this example, it is a Tomcat-access sourcetype.

Compare this to another line in the same log:

shib-idp;idp-process.log;test;nothing;2021-12-08 13:11:21,335 - 10.103.10.30 - INFO [Shibboleth-Audit.SSO:283] - 10.103.10.30|2021-12-08T19:10:57.659584Z|2021-12-08T19:11:21.335145Z|sttreic- shib-idp is the name of the container component that generated the log

- idp-process.log is the source file in that component

- test is the environment

- nothing is the user token

- And everything after that last semicolon is the Shibboleth process log. Notably, this part uses pipes as delimiters.

The SCK components, as I have them configured now, ship all these sources to "ocp:container:shibboleth" (or something like that). When they are shipped over, metadata is added for the container_name, pod_name, and other CRI-based log data.

What I am aiming to do

I would like to use the semicolon-delimited parts of the event to tell the heavy forwarder what sourcetypes to work with. Ideally, I would like to cut down on having to make my own sourcetypes and regex, but I can do that if I must.

So for the tomcat-access example above, I'd want:

- All the SCK / Openshift related fields to stick with the event.

- The event to be chopped up into 5 segments

- The event type to be recognized by the first 2 fields (there is some duplication in the first field, so the second field would be the most important)

- The first 4 segments to be appended as field information (like "identifier" or "internal_source")

- The 5th segment to be exported to another sourcetype for further processing (in this case, "tomcat_localhost_access" from "Splunk_TA_tomcat").

- All the other fields would stick with the system as Splunk_TA_tomcat did its field extractions.

If this isn't possible, I could make a unique sourcetype transform for each event type - the source program has 8 potential sources. But that would involve quite a bit of duplication.

Even as I type this out, I'm getting the sinking feeling that I'll need to just bite the bullet and make 8 different transforms. But one can hope, right?

Any help would be appreciated. I've gotten through Sysadmin and data admin training, but nothing more advanced than that. I suspect I'll need to use this pattern in the future for other Openshift logs of ours, but I don't know at this stage.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I just wanted to update people to let them know that I did figure this out.. and it was a bit of a wild trip. 🙂

Turns out, I was mixing up index-based field extraction and searchtime field extraction.

What I'm doing now (and I can post code examples) is having the SCK send in data, which the heavy forwarder then changes to a different sourcetype. From there, I have an app on my indexer that is doing field extraction. I do need a unique field extraction regex for each source type, but that's not terribly difficult. I'm also adding in a bunch of human readable aliases and CIM compliant aliases, so I think I'm getting some pretty rich data.

The idea of setting the transform to match a known sourcetype and then having another app pick it up seems to have failed. I'm not sure why, but I'm also not questioning it. 🙂

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hunting a bit, I am finding evidence that I cannot feed one sourcetype into another. I've had some success in stripping out the openshift related delimiters at the front of the file, but I cannot then pass it into a sourcetype it seems. Or, if I do, it doesn't properly process it. I've also tried cloning the sourcetype, but I think I am missing a step there as it doesn't appear to be picking up by the tomcat_local_access sourcetype (if that even exists).

I also saw some documents that indicated that you might be able to run two transforms in a row, but I do not know how to ensure that will pass properly on a single line. Instead, it looks as if it looks for different criteria.

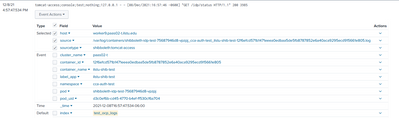

Edit: It's the end of the day and I need to step away for now. Here's my latest attempt, at trying to get the heavy forwarder to generate search-time field extractions. However, no new fields are showing up in my search results, so I can't tell if it is doing what it should or not.

props.conf

#Intake from Shib containers (OCP specific!)

[ocp:container:ilstu-shib-test]

TRANSFORMS-shibboleth-tomcat-access = shibboleth:tomcat-access-sourcetype

[shibboleth:tomcat-access]

REPORT = shibboleth:tomcat-access-fields

transforms.conf

[shibboleth:tomcat-access-sourcetype]

REGEX = tomcat-access;console;.*?;.*?;(.*)

DEST_KEY = MetaData:Sourcetype

FORMAT = sourcetype::shibboleth:tomcat-access

[shibboleth:tomcat-access-fields]

REGEX = tomcat-access;console;.*?;.*?;(?P<ip>(?:(?:25[0-5]|2[0-4][0-9]|[01]?[0-9][0-9]?)\.){3}(?:25[0-5]|2[0-4][0-9]|[01]?[0-9][0-9]?)|(?:[A-F0-9]{1,4}:){7}[A-F0-9]{1,4})\s-\s(?P<user>(-|\w+))\s\[\d+\/\w+\/\d{4}:\d{2}:\d{2}:\d{2}\s[\+\-]\d{4}\]\s"(?P<method>[A-Z]{3,7})\s(?P<request_uri>[\S]+)\s(?P<protocol>[\w\/\.]+)"\s(?P<status>\d{3})\s(?P<bytes_sent>(?:\d+|-))$

FORMAT = ip::$1 user::$2 method::$4 request_uri::$5 protocol::$6 status::$7 bytes_sent::$8

WRITE_META = true