- Splunk Answers

- :

- Using Splunk

- :

- Splunk Dev

- :

- Re: How do I remove a Splunk event via the Splunk ...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark Topic

- Subscribe to Topic

- Mute Topic

- Printer Friendly Page

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I apologize in advance for the long question...I want to make sure to describe the issue thoroughly.

The basic goal:

I am trying to get a table from one database (let's call this the source table) to be replicated and stay replicated exactly within our Splunk database. I do not want to have historical data in the Splunk database but rather a direct copy of the source table in the Splunk database. This means that when new data is updated in the source table, I would like to have the data in the Splunk database updated as well (not inserted as a new row in the Splunk table). We would like to create charts, alerts, etc off of the data within Splunk.

Paths already taken:

I have used the DB Connect app to push data from the source database. Item 1 and 2 shows the results of that effort:

Path 1. The DB Inputs' "Batch Input" type would be an ideal for what I am trying to accomplish. My understanding is that "Batch Input" drops the existing Splunk database and recreates the Splunk table anew from the source table every time. The problem with this solution is that the source table is huge. Dropping the existing Splunk table and regenerating it from scratch every hour or even every day would be an enormous use of data.

Path 2. The DB Inputs' "Rising Column" type looked promising but seems to violate my goal of not inserting the data from my source table into the Splunk table.

Path 3. I have started using the Splunk C# SDK to program a solution to this issue. My thought is that if use a scheduled C# service to:

a. get the data from the source table;

b. perform a search and purge the targeted existing data in the Splunk table (via a "search query | delete" SearchOneShotAsync method call) and;

c. and then insert (via a SendAsync method call) that I can "update" the data in the Splunk database.

Thus keeping a replica table in Splunk without the disadvantaged discussed above.

Here's the code (finally right? 🙂

public class SplunkFacade

{

private readonly ILogger _log;

private readonly IConfig _config;

private string _indexName;

public SplunkFacade(ILogger log, IConfig config)

{

_log = log;

_config = config;

}

public void UpdateEventData(string indexName)

{

_log.Verbose("UpdateEventData");

_indexName = indexName;

using (var service = new Service(Scheme.Https, _config.SplunkUri, _config.SplunkPort))

{

_log.Verbose("Connecting to {0}:{1} ", service.Context.Host, service.Context.Port);

try

{

var fakeKeyToUpdate = "5";

var fakeValueToUpdate = "Jim Jones";

LogOnAsync(service).Wait();

PurgePreviousValuesAsync(service, fakeKeyToUpdate).Wait();

InsertIntoSplunkAsync(service, fakeKeyToUpdate, fakeValueToUpdate).Wait();

}

catch (Exception ex)

{

_log.Fatal(ex, "Error inserting");

throw;

}

finally

{

LogOffAsync(service).Wait();

}

}

}

private async Task LogOnAsync(Service service)

{

await service.LogOnAsync(_config.SplunkUserName, _config.SplunkPassword);

}

private async Task LogOffAsync(Service service)

{

await service.LogOffAsync();

}

private async Task InsertIntoSplunkAsync(Service service, string key, string value)

{

ITransmitter transmitter = service.Transmitter;

await transmitter.SendAsync(String.Format("my_id={0} my_name={1}", key, value), _indexName);

}

private async Task PurgePreviousValuesAsync(Service service, string key)

{

var searchResults = await service.SearchOneShotAsync(GetPurgeSplunkSql(key));

foreach (var result in searchResults)

{

//write out the raw event

_log.Information("Result from Query -> {0}", result.ToString());

}

}

private string GetPurgeSplunkSql(string key)

{

var result = String.Format(@"search index=""{0}"" my_id=""{1}"" | delete", _indexName, key);

//var result = "search index=test-index my_id=5 | delete";

return result;

}

}

The question:

First off, please feel free to tell me if there's a more effective mechanism to achieve this goal. I am open to other ways to solve this issue.

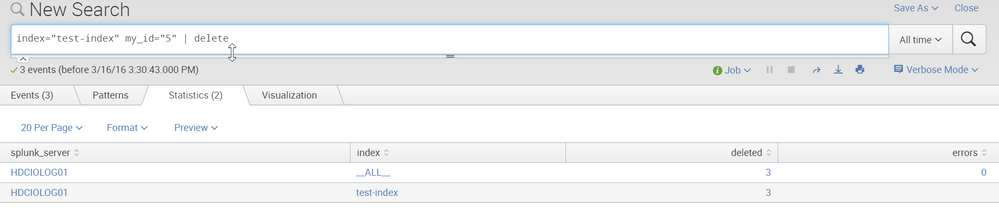

The code above runs and the InsertIntoSplunkAsync method inserts test data into the Splunk database nicely. The PurgePreviousValuesAsync method does not, however, delete the previous values even though the searchResults object shows no errors. It does not work, even when the hard-coded version without quotes is used, which works when directly run against the web server with or without quotes (see image below showing it working on the web server).

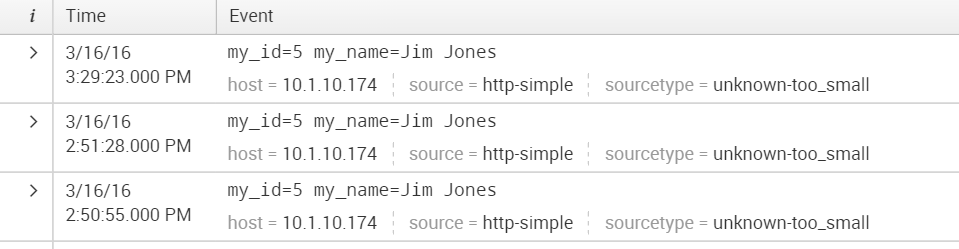

When I run this code three times in the Visual Studio debugger, I expect to see only one instance of "my_id=5" but I see instead three. See the second image below showing three events. Perhaps the SearchOneShotAsync is not capable of deleting, but only retrieving...if so, is there a way for me to remove the values in searchResults in the foreach loop via a RemoveAsync method call? Or perhaps there another SDK method capable of the "... | delete" query?

So the question, how do I achieve the goal above? Either through fixing the existing code or using a better path.

I recognize that this is an unusual task to do with Splunk, but I'd appreciate any suggestions.

Thank you for reading and for any help that you can give me.

Best regards,

Michael

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Also remember that only a user with the "can-delete" role (or equivalent capabilities) can delete events. I am sure that this restriction applies to authentication via the SDK, the same way as it does in the GUI etc.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Also remember that only a user with the "can-delete" role (or equivalent capabilities) can delete events. I am sure that this restriction applies to authentication via the SDK, the same way as it does in the GUI etc.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks. It is a good check. I checked the permissions this morning because the C# SDK service that I've created is using a Splunk service account. The service account does, in fact, have permissions to delete.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

You are correct. I just checked the permissions again. It turns out that the "admin" role doesn't have "can_delete" and I had assumed it did. After giving the service account "can_delete" I can delete using:

var searchResults = await service.SearchOneShotAsync("search index=test-index my_id=5 | delete");

What is also interesting is that the SearchResult object's Response field shows a StatusCode of 200/OK, regardless of whether the user is permitted to delete or not.

Thanks for the help.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I'm not saying one way or the other necessarily, but trying to take a step back and figure out in as ideal of a situation as you can probably get, what's going to happen? Also, a couple of options that perhaps you hadn't thought of yet and some just thinking out loud a bit.

Let's take a quick look at some numbers. Adjust as necessary.

Path 1 - "Dropping the existing Splunk table and regenerating it from scratch every hour or even every day would be an enormous use of data." For the sake of argument, let's call this 1 TB.

Path 2 - "The DB Inputs' "Rising Column" type looked promising but seems to violate my goal of not inserting the data from my source table into the Splunk table." Again for the sake of argument, let's call our daily update data size 10 GB. I also think your goal isn't specifically to not insert data, but rather one of the two very similar but not quite the same goals of either a) not filling up my disk or license or b) not make searches overly complex or slow to compensate for having outdated historical data in addition to the current set of data. Do those sound reasonable too?

They way you are going at this, I'm close to but not quite 100% positive that any REST or SDK way of doing a |delete would not actually delete data from disk, just like |delete itself doesn't. It WILL make it not show up in searches, though, so it acts like it's been deleted from that viewpoint. I'm also not aware of any method to "overwrite" data once indexed (though I didn't look hard), so you'll take the 10 GB/day hit regardless, both in disk space and in license. You will avoid the 1TB hit this way, though (beyond initial load).

But if you are going to take the 10 GB/day hit either way, I'm not sure if the argument against doing it the simple "built-in" way of a rising column is still entirely valid. In the example I describe, I don't think you would be able to control buckets going to frozen well, so I think you'd have to set the index up to hold, say, 30 days of data (1TB+10GB*30=4+TB, which isn't really very hard or expensive) and then wipe the index, batchload a new fresh copy and continue, taking that once-per-month license hit (which is allowed) in order to not take have to increase disk beyond measure. To the other point about search complexity, you may - MIGHT - complicate a few searches up a bit more than you would in a batch-upload (e.g. only one copy of any row) method, but it really shouldn't be too bad and should be able to be made pretty efficient. Frankly, you could handle that (possibly, anyway) by doing a carefully crafted |delete after the new insert is finished (via regular scheduled searches and whatnot) - again it wouldn't save disk but it could save search efficiency.

As an alternative you could export the change data from your DBMS on a schedule, then write a scripted input that inserts it with a delete .... although I don't have a clue how that would be done (you in fact might hit the same issue you have pointed about above).

On an entirely different note, do you really need all that data in Splunk? Can you create a summary of said data that may provide all the answers you actually need? If you could summarize to 1/60th of that data the ballgame changes entirely. That gets you to 16 GB initial load and 160 MB/day. Even at 1/10th, you are still looking at a 1 GB/day change rate. You may lose some drill-down, but sometimes that's an acceptable trade off if you can still drill down to detail in the original DB. (As an interesting aside; I recall someone wanting to have timechart always show all data points with their example being that in their use case sometimes there would be more than 10,000. I believe the question was asked - well, what monitor resolution are you running at that you'd be able to see 10,000 dots?)

Or, as suggested by the fez-wearing SplunkTrust member martin_mueller, who knows a lot about this stuff, have you investigated a lookup table?

Or, ignore me as I'm babbling and entirely and completely wrong anyway. 😞

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Rich:

I'm impressed with your insight. Regarding Path 2 point a and b, you are right on the mark. "Inserting" really doesn't matter to me as long as all other constraints are met.

Here's the detail:

a) license usage data is the main concern (the physical disk usage is less of a concern, although using TBs of space to represent a table with tens of GBs is obviously wasteful for the reports that we're doing). This is why the Splunk DB Connect v2.0 "drop and batch input" technique is not ideal.

b) complexity of search is the other main concern. If you know of any mechanisms that Splunk has to simplify queries, perhaps that would alleviate this issue. For example, perhaps this could be resolved through other means like tags. I'm still very new to Splunk, so I apologize that I'm not familiar with all the techniques.

Additionally c) Correctness of data is a concern. We don't want to have duplicate data in reports due to the inserts, I think that this comes down to simplifying the complexity of search so that the average user knows how to query for basic data without getting multiple inserted data.

Additionally d) Freshness of data is a concern. We would like to have the data that we're using to be as "real-time" as possible. This is the reason that having a C# service running and "updating" the data every 5 minutes is considered ideal. The Splunk DB Connect v2.0 "Rising column" technique running every 5 or 10 minutes is also very useful but it violates constraint b above. Perhaps simplifying the queries is the answer.

Regarding the statement that "|delete would not actually delete data from disk", this is an interesting point. I imagine that by this you mean that "|delete" is actually moving data from the main bucket to the frozen bucket? I didn't realize that this is how it works, so that's very interesting. The issue that I'm seeing with the C# SDK code that I have included is that the following code does not have any affect on removing data from Splunk searches. I was assuming that the SearchOneShotAsync with a "|delete" would remove the "my_id=5" data from Splunk searches. Perhaps SearchOneShotAsync is only capable of read-only searches? (note: the same code without the "|delete" returns the expected data)

var searchResults = await service.SearchOneShotAsync("search index=test-index my_id=5 | delete");

Regarding your question of "do you really need all that data", this is a very good question. We are trying to preserve as much of the original table as possible as we're not sure how the charting team will use it. To your point, I will be looking at removing some of the data that is unlikely to ever be used. The amount of transactional data on an hour by hour basis is very small, in the MB range, the issue is really that reproducing a table that is tens of GBs every couple of hours would be expensive.

Thanks again for your detailed response and any further detail you can give me would be appreciated.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

| delete simply removes the results from displaying in a search but doesn't actually delete them from disk. Notice how carefully they phrase the first sentence under Delete events from subsequent searches? A bit of googling and searching will turn up more. Anyway, how well this really aligns with your needs I'm not sure about yet, but the behavior is something to keep in mind.

Still, MBs per hour is not an overly large amount to deal with.

I'm working down a potential easy-to-implement and easy-to-use path for this, but it'll take a bit of thinking and playing around to test. But basically, I'm investigating using a carefully crafted eventtype that would do the "filtering" of old data for you, so searches would just be against

eventtype=currentEvents | blah blah

There'd be some working getting your eventtype definition right, but from then on it'd be easy.

There's probably other answers, I have a few folks who may be thinking about this now.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks again Rich, I hadn't noticed the careful phrasing until now.

I will try out the eventtype technique as well.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Have you considered storing the copy of the database table in a lookup file? Those can be easily overwritten.

Trying to implement an SQL UPDATE for indexed events in Splunk is bad mojo.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Martin:

Thank you for your quick response! Could you please expand on the lookup file idea? I'm familiar with the basics of using cvs lookup (I'm still very new to Splunk).

Does the lookup technique count toward the License Usage Data?

Thank you for giving me an angle that I hadn't considered.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Lookups don't count towards licensing, it's not technically indexing anything.

You'd have a scheduled search something like this:

| dbquery "connection" "select foo from bar where baz" | maybe some postprocessing | outputlookup your_lookup

Whether this is the best approach for your situation or not is hard to tell from over here. For example, I wouldn't want to copy a 100GB table into a csv file.

Here's another alternative to consider: Make search-time queries into the live database rather than storing a copy in Splunk. This could be through explicit dbquery commands, or through configured database lookups. This would best address freshness, would cost no license, would consume no Splunk disk space, would be as correct as the underlying database, but would add load to said database and require constantly available connectivity.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks Martin. This is really good detail.

A couple of weeks ago I looked at a blog that suggested a similar thing with the dbquery command and it then referred me to dbxquery command as the upgraded version of dbquery. After reading up on dbxquery, it seemed to be only available for searching in the DB Connect v2 app. Meaning that it would not be available in the main "Search & Reporting" app. After reading this, I assumed that creating a chart in the "Search & Reporting" app would not be possible. Perhaps I made a bad assumption?

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

IIRC, dbquery is v1 while dbxquery is v2. By default, both are only shared within the DBConnect app, but - at least for v1 - you can easily change the sharing to global and give those that should run the command permissions to view the app and command. Didn't actually check for v2, but I see little reason why this shouldn't work.

If it doesn't, you can still create a scheduled search within the DBConnect app that writes to outputlookup with a lookup definition defined and shared from elsewhere.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks again Martin. I will read up on the outputlookup as well.