- Splunk Answers

- :

- Splunk Administration

- :

- Deployment Architecture

- :

- Hot/Warm/Cold bucket sizing- How do I set up my in...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark Topic

- Subscribe to Topic

- Mute Topic

- Printer Friendly Page

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hot/Warm/Cold bucket sizing- How do I set up my index.conf with as few possible per-index settings?

After trying to get my head around the settings in indexes.conf to do data retention, and trying numerous different approches - I've decided to ask for guidance here.

Pretext - I have a splunk indexer with approximately 50 indexes. I want to set up an indexes.conf with as few as reasonably possible per-index settings to keep this file small and manageable.

- For hot/warm storage I save buckets on the SSD backed storage of the server itself. ~8TB available

- Cold storage is moved off to a NAS on the network - ~100TB available

- No frozen storage - i.e. data should be deleted after 1 year.

I would like to set up indexes.conf to:

- If any individual index has hot/warm data larger than 100GB > roll to cold (I would actually prefer to do this based on age - say 60 days, not size - but seems this is not standard functionality in Splunk

- If any data in any individual index is older than 1 year - permanently delete it.

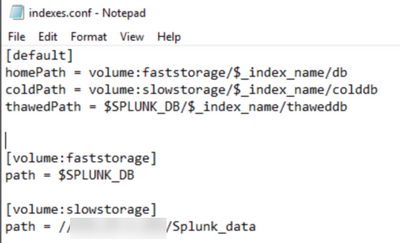

The definition of the hot/warm/cold storage is set up as follows and I think this is correct.

Any suggestions on how I can achieve the ask above would be appreciated. Preferably by using the [default] stanza as much as possible, or wildcard stanzas if that is possible. I would hate to have to define the same settings for 50 indexes, but if there's no other way, then so be it.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I have to admit that I may have bitten off more than I can chew here - This became way more complex than I thought very quickly. I really appreciate the responses provided here!

I am trying to capture what I've gleaned from this in an indexes.conf file. I would appreciate if you can see the comments and questions and perform a sanity check to let me know if I'm missing the plot somewhere

[default]

# should these paths be defined in each index stanza, or is it ok in default only?

homePath = volume:faststorage/$_index_name/db

coldPath = volume:slowstorage/$_index_name/colddb

thawedPath = volume:faststorage/$_index_name/thaweddb

# Any path required for the Splunk Summaries volume?

maxWarmDBCount = 300 # Is this required? If so, is it in the correct place?

maxDataSize = auto_high_volume # Is this required? If so, is it in the correct place?

frozenTimePeriodInSecs = 64800000 # 2 years

[volume:faststorage]

path = D:/Splunk/var/lib/splunk # Forward slashes on windows?

maxVolumeDataSizeMB = 5242880 # 5TB (no less than amount of 100GB indexes multiplied by 50 non-internal indexes)

[volume:slowstorage]

path = //MYNAS/Splunk_data

maxVolumeDataSizeMB = 10485760 # 10TB # Data is deleted when the volume reaches maximum size, or when frozenTimePeriodInSecs is met - whichever happens first?

[volume:_splunk_summaries]

path = D:/Splunk/var/lib/splunk # Forward slashes on windows?

maxVolumeDataSizeMB = 1048576 # 1 TB

[myindex1]

homePath.maxDataSizeMB = 102400 #100GB per index

[myindex2]

homePath.maxDataSizeMB = 102400 #100GB per index

[myindex3]

homePath.maxDataSizeMB = 102400 #100GB per index

#...

[myindex50]

homePath.maxDataSizeMB = 102400 #100GB per index

#Can a wildcard stanza be used such as [myindex*] to keep things simpler?

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

1) No, you can't use wildcards. Each index must be explicitly configured

2) See my other answer. You can have default settings. With one caveat. Even if you overwrite default default settings with your own local setting, the default per-index settings (settings for particular indexes defined in - for example - system/default/indexes.conf) will take precedence over the settings defined in [default] stanza.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

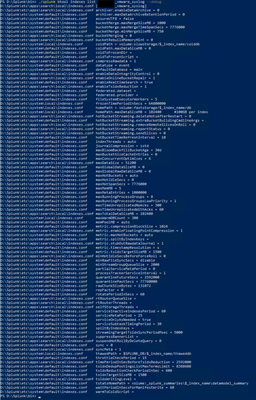

This is what I have in indexes.conf at the moment

[default]

homePath = volume:faststorage/$_index_name/db

coldPath = volume:slowstorage/$_index_name/colddb

thawedPath = $SPLUNK_DB/$_index_name/thaweddb

maxWarmDBCount = 300

frozenTimePeriodInSecs = 64800000

maxDataSize = auto_high_volume

homePath.maxDataSizeMB = 102400 #100GB per index

enableTsidxReduction = true

timePeriodInSecBeforeTsidxReduction = 15780000 # 6 months

[volume:faststorage]

path = $SPLUNK_DB

[volume:slowstorage]

path = //MYNAS/Splunk_data

I have not been able to see any evidence that it is actually doing anything.

This is what I had before and it worked, but for some reason not automatically - it would only start rolling when splunk service is restarted and the mysteriously stop a while later, then when restarting splunk it would continue. Once a month I'd have to go in and over the course of a few days restart the splunk indexer service several times to get it to free up enough space on the local drive.

[default]

homePath = volume:faststorage/$_index_name/db

coldPath = volume:slowstorage/$_index_name/colddb

thawedPath = $SPLUNK_DB/$_index_name/thaweddb

homePath.maxDataSizeMB = 100000 #100GB per index

coldPath.maxDataSizeMB = 200000 #200GB per index

maxWarmDBCount = 10

frozenTimePeriodInSecs = 64800000

maxDataSize = auto_high_volume

[volume:faststorage]

path = $SPLUNK_DB

[volume:slowstorage]

path = //MYNAS/Splunk_data

Of course I restarted splunk after making changes to indexes.conf.

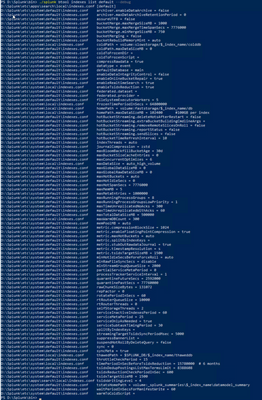

@PickleRick See attached screenshots of the btool and rest outputs

Can anyone propose improvements to my indexes.conf I can try which follows all aforementioned best practices and perform the bucket rotating /retention?

Thanks again, appreciate all the responses

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

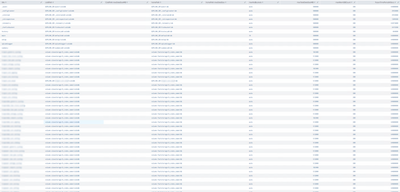

OK. So you have some indexes using volume definition and some which do not use it which makes it inconsistent and it might not work as you'd expect. You should redefine your default indexes to use volume definition as we discussed with @isoutamo (but be wary of the actual directory name since they don't map 1:1 from index name to directory name!).

That's one. And because of it volume-based housekeeping might not work properly.

Another thing is check your buckets - especially the timerange of events contained there - with dbinfo and manually verify if they should be frozen or not. Remember that - as I wrote before - index is rolled to frozen if _latest_ event in the index is older than threshold.

Anyway, you have 8TB (I suppose, minus filesystem structures, minus some other system stuff) for 50 indexes for which you want to have 100GB threshold. Remember that unless you explicitly defined them somewhere else, you have additional stuff that is stored with the index, like summaries.

And I didn't notice that before - you have your volumes defined but do you have volume limits set?

Are you absolutely sure that you want tsidx reduction?

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@PickleRick wrote:OK. So you have some indexes using volume definition and some which do not use it which makes it inconsistent and it might not work as you'd expect. You should redefine your default indexes to use volume definition as we discussed with @isoutamo (but be wary of the actual directory name since they don't map 1:1 from index name to directory name!).

Ha, I think you are overestimating my knowledge of Splunk 🙂 I'm gonna need some guidance on how to do this please

Another thing is check your buckets - especially the timerange of events contained there - with dbinfo and manually verify if they should be frozen or not. Remember that - as I wrote before - index is rolled to frozen if _latest_ event in the index is older than threshold.

I tried googling where to find dbinfo and can only find references stating it should be a dashboard. Do you mean dbinspect?

Anyway, you have 8TB (I suppose, minus filesystem structures, minus some other system stuff) for 50 indexes for which you want to have 100GB threshold. Remember that unless you explicitly defined them somewhere else, you have additional stuff that is stored with the index, like summaries.

And I didn't notice that before - you have your volumes defined but do you have volume limits set?

I do not have volume limits. Is this done with maxTotalDataSizeMB? Does this mean that some data may be rolled to frozen even before the frozenTimePeriodInSecs age is reached if the index exceeds the volume limit?

Are you absolutely sure that you want tsidx reduction?

No I'm not 🙂 I've taken that off

I think I need a fresh start with indexes.conf file, I've done a few attempts, but I'm cautious of doing something that will make me lose my data. Can you give me suggestions of what to change in which stanzas please?

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

dbinspect, of course. My bad. It was late 😉

OK. About the redefinition of default indexes - you need to get the stanzas for default indexes (see that search I gave you, the one listing indexes) and redefine the path settings so that they're not based on $SPLUNK_HOME variable but are based on volume definition. (they still need to point effectively to the same directory on the disk so that you don't lose any data). As always, if in doubt, engage PS or your favourite local Splunk partner since that's a potentialy risky move.

Volumes can (and should) be size restricted by maxVolumeDataSizeMB parameter (in each volume definition stanza separately of course).

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I see in https://docs.splunk.com/Documentation/Splunk/9.0.4/admin/Indexesconf#GLOBAL_SETTINGS that the homePath.maxDataSizeMB setting is a per index setting, and not a global setting.

Does this mean I have to create separate stanzas in indexes.conf for each index and I cannot use this setting in the default stanza? If a can use it in the default stanza, does it still apply per-index?

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

It works like that:

1) Some settings simply do not make sense at index-level so they are trully "global". Like lastChanceIndex for example.

2) Per-index settings can be specified as default values. So if you create a default stanza with maxTotalDataSizeMB setting, any index that doesn't have this setting defined in its stanza will inherit this parameter.

3) Per-index setting defined explicitly at index level takes precedence over default values. So you can have default settings of - for example - 10 years retention period but override it on a specific index and set it for 2 years.

Also keep in mind that before all this takes place, splunk compiles all its configuration files according to precedencd rules.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I agree with @PickleRick that when you are using volumes you must use volumes on every index not only with some of those. And when you have defined volumes you must define size of those or otherwise there is no sense to use those!!!

Don't allocate all available storage for volume as there is need for some free space for it. Maybe @gjanders can open his study about it?

r. Ismo

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

So repeating what @isoutamo and @PickleRick have mentioned, don't mix volumes and non-volumes on a path, that will not end well.

Additionally, when you size your volume, maxVolumeDataSizeMB , keep in mind that this is for the buckets. The DMA objects do *not count* towards this size, so if you have DMA you need to change the volume:_splunk_summaries as well or you may fill that disk with summaries.

Another tip is that if you use dbinspect or similar to measure the size, I found in previous versions (7.x or prior) I saw a difference of around 25% between what Splunk measured and what I saw on the actual disk of a Linux server.

Finally, this game changes completely for smartstore , in that case you just configure cache padding instead...(and/or you can use maxGlobalRawDataSizeMB per-index which is for the whole index, not per-indexer server). Since you are not using smartstore, maxTotalDataSizeMB is per index (which you could override in [default], but also *per* indexer, so you need to do the maths carefully.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Did you read https://docs.splunk.com/Documentation/Splunk/latest/admin/Indexesconf ?

There is a [default] stanza and there are settings for data retenton. You can limit hot/warm size per index as well as cold size per index. You can set overall time retention. So where's the problem?

Oh, and remember than the setting works at indexer level - you can't limit data as overall limit per all indexers as a whole.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Of course I read that 🙂

I have tried numerous times but I cannot seem to get it the way I would like per the previously mentioned parameters

I currently have the following under default stanza.

maxWarmDBCount = 300

frozenTimePeriodinSecs = 31536000

maxDataSize = auto_high_volume

homePath.maxDataSizeMB = 102400

But the above does not do what I need.

I only have one indexer, so no concerns there.

Could you give me an example of how I should either fix the above, or the indexes.conf file as a whole?

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Ok. First things first.

1) Make sure what your effective settings are

splunk btool indexes list default --debug

splunk btool indexes list my_index --debug

Verify that some settings aren't overwritten somewhere

Also verify the running settings (you did restart indexer after editing indexes.conf?) by

| rest /services/data/indexes | table title coldPath coldPath.maxDataSizeMB homePath homePath.maxDataSizeMB maxDataSize maxHotBuckets maxTotalDataSizeMB maxWarmDBCount frozenTimePeriodInSecs

2) I suppose I don't have to tell you this but bucket management is a bit more complicated than just "remove everything as soon as it exceeds the limits". If a size limit is reached, oldest (in terms of most recent event in the bucket!) bucket is rolled to next stage (warm->cold, cold->frozen). If a most recent event in a bucket gets older than frozenTimePeriodInSecs (btw check if there is a typo in your config as well, splunk is case-sensitive related to option names; do "splunk btool check" often), the bucket is rolled from cold to frozen.

3) The size limit I mentioned earlier can be either per-"path", total per-index or the general volume limit so your bucket might get rolled to frozen (i.e. deleted) even if the "other" limits don't apply so you wouldn't expect it to.

4) What do you mean by "does not do what I need"?

Your set of settings looks pretty consistent with what you want - 100GB for hot/warm and 365 days of retention period. So if your data gets rotated to cold or to frozen quicker than that, maybe you're hitting volume-based limits.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

You should also remember that there are some splunk's internal index which name and path didn't match (e.g. main vs. defaultdb). For that reason you shouldn' t remove those and replace by $_index_name variable.

Also I propose that you should set volume size also to all used volumes to avoid "lack of space" situations which stop indexing.

splunk btool is your friend to solve this kind of issues. And as @PickleRick you should remember that btool show what you have configured on disk not what you have on running version.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Luckily, the default indexes (all the _whatever internal indexes, the main index) come with specific stanzas which have precedence over [default]. And those are defined in system/default so unless someone is really bent on breaking the working Splunk environment and fiddles with files in that directory, they should be there.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

When/if you want to utilise volumes on all indexes you must update also those 😞

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

True. You could leave them "as is" but it's not a good practice. If you define volumes, make all definitions volume-based. But in such case you wouldn't touch system/default/indexes.conf but rather create own app with index definitions or create system/local/indexes.conf overwriting the default settings. Still, the logic applies - the [default] stanza is applied only to those settings for which there are no index-specific ones (which of course are defined using standard splunk config file precedence).