- Splunk Answers

- :

- Using Splunk

- :

- Splunk Search

- :

- Re: Winsorized Average Calculation

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark Topic

- Subscribe to Topic

- Mute Topic

- Printer Friendly Page

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

host = Mayhem

sourcetype="phutans:servo" host=R00878

| eval headers=split(_raw," ")

| eval plant_length=mvindex(headers,10) | sort 0 plant_length

(I am trying to calculate mean plant_lenght here but in a different way)

Let me put it as example :

say we get 10 values of plant_length. 1, 2, 2, 3, 4, 4, 4, 5, 20

Ideally to get mean, we would do (1+2+2+3+4+4+4++5+20)/10

But I don't want it this way. What I am looking is to identify 10% of data in each tail.

And then to replace them with the next available data.

e.g. 1, 2, 2, 3, 4, 4, 4, 5, 20

The 10 % on each side is, 1 and 20.

So the updated data set to become 2, 2, 2, 3, 4, 4, 4, 5, 5. (I just replaced the each extreme 10 % with the nearest value).

And the new average should be calculated like (2+2+2+3+4+4+4++5+5)/10

Could you please guide how to achieve this. Thank you.

I could use some help in implementing the above scenario.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @zacksoft

Yes this works..to avoid index confusion I have now hard coded the values using makeresults.

I have tested for date_minute =1-10 and 1-20. Remember we have to change this in 3 places while testing, main query, first append and second append.

Try this :

| makeresults

| eval date_minute="1 2 3 4 5 6 7 8 9 10" | eval date_minute=split(date_minute," ")

| mvexpand date_minute

| table date_minute,_time

| where isnotnull(date_minute) | rename date_minute as x

| eventstats count(x) as maxcount

| eval perc=0.1*maxcount | streamstats count as row | eval z=maxcount-row

| eval diff=row-perc

| eval diff1=z-perc | where diff <=perc

| where diff >0

| append

[ | makeresults

| eval date_minute="1 2 3 4 5 6 7 8 9 10" | eval date_minute=split(date_minute," ")

| mvexpand date_minute

| table date_minute,_time

| where isnotnull(date_minute) | rename date_minute as x

| eventstats count(x) as maxcount

| eval perc=0.1*maxcount | streamstats count as row | where row>perc | eval z=maxcount-row | where z>=perc]

| append

[ | makeresults

| eval date_minute="1 2 3 4 5 6 7 8 9 10" | eval date_minute=split(date_minute," ")

| mvexpand date_minute

| table date_minute,_time

| where isnotnull(date_minute) | rename date_minute as x

| eventstats count(x) as maxcount

| eval perc=0.1*maxcount | streamstats count as row | eval z=maxcount-row

| eval diff=row-perc

| eval diff1=z-perc

| where diff1<perc

| where diff1>=0]

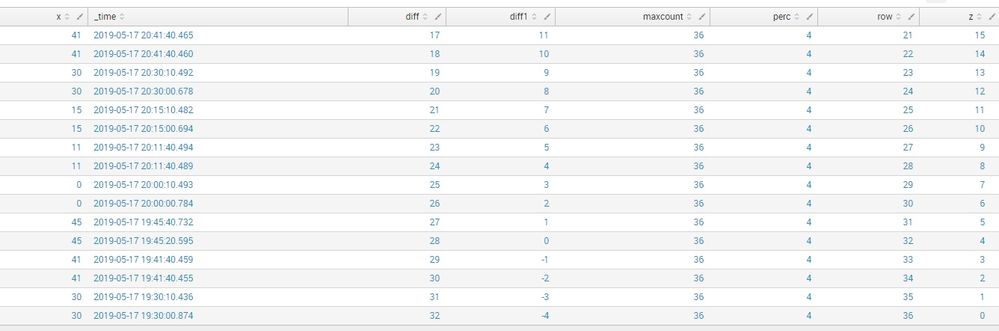

Here is the screen shot of the output..

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @zacksoft

Yes this works..to avoid index confusion I have now hard coded the values using makeresults.

I have tested for date_minute =1-10 and 1-20. Remember we have to change this in 3 places while testing, main query, first append and second append.

Try this :

| makeresults

| eval date_minute="1 2 3 4 5 6 7 8 9 10" | eval date_minute=split(date_minute," ")

| mvexpand date_minute

| table date_minute,_time

| where isnotnull(date_minute) | rename date_minute as x

| eventstats count(x) as maxcount

| eval perc=0.1*maxcount | streamstats count as row | eval z=maxcount-row

| eval diff=row-perc

| eval diff1=z-perc | where diff <=perc

| where diff >0

| append

[ | makeresults

| eval date_minute="1 2 3 4 5 6 7 8 9 10" | eval date_minute=split(date_minute," ")

| mvexpand date_minute

| table date_minute,_time

| where isnotnull(date_minute) | rename date_minute as x

| eventstats count(x) as maxcount

| eval perc=0.1*maxcount | streamstats count as row | where row>perc | eval z=maxcount-row | where z>=perc]

| append

[ | makeresults

| eval date_minute="1 2 3 4 5 6 7 8 9 10" | eval date_minute=split(date_minute," ")

| mvexpand date_minute

| table date_minute,_time

| where isnotnull(date_minute) | rename date_minute as x

| eventstats count(x) as maxcount

| eval perc=0.1*maxcount | streamstats count as row | eval z=maxcount-row

| eval diff=row-perc

| eval diff1=z-perc

| where diff1<perc

| where diff1>=0]

Here is the screen shot of the output..

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

you can test for values 1-20, replace 1-10 with 1-20 (remember the space between each number 🙂 ) and to make the change in 3 places.

The code works, but it is most probably an issue with your index data the parts before where I start doing the real stuff...but I am sure this will give you a good idea of what to do

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks @Sukisen1981 for your time. The solution helps.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @zacksoft

I have modified the query above. Here is what I understood from your requirements. Firstly, I will try to explain what I have done with the bottom(tail) values for x.

this screen shot is an as is run on the _audit index without any trims being done

This has total 36 events (ref. - column maxcount), trimming 10% of this at the tail means trimming 3.6 , ~ 4 tail rows.

So, my understanding is here we need to trim the rows with values for x=41,41,30.30 AND replace them with the immediate 4 rows above. So the last 4 rows should become x=0,0,45,45 AND this will be the same as the 4 rows above that. In essence we will have 8 rows , with sets of values repeating for 4 rows. That means that the last 8 rows become, x=0,0,45,45,0,0,45,45.

Is this correct?

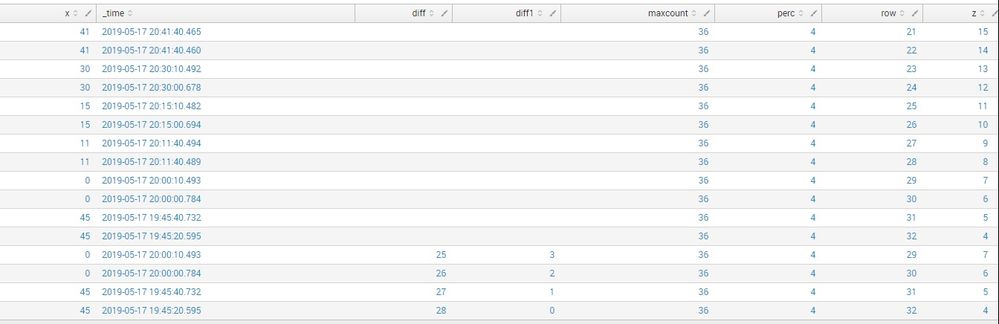

If it is then refer to this screen shot.

see how the last 8 rows now repeat in sets of 4, is this correct? The same logic has been applied to the first 4(or 😎 rows.

Now, on the query -

The query actually consists of 3 queries, the first main one followed by the 2 appends.

a) The first append or the second query is just trimming off the top and bottom 10%(4 rows) in this example.

b) the main search implements the logic for removing the head(top) 4 rows and replacing them by the first 4 rows in the second(first append) query.

c) I suggest you test it like this - use this query first.

index="_audit" | table date_minute,_time

| where isnotnull(date_minute) | rename date_minute as x

| eventstats count(x) as maxcount

| eval perc=0.1*maxcount | streamstats count as row | eval z=maxcount-row

| eval diff=row-perc

| eval diff1=z-perc

Here, i merely removed the where conditions from the main search (the top/head) query part. You can then manually see what applying the 2 where conditions ( | where diff <=perc | where diff >0 ) would do to the overall result.

d) exact same goes for the bottom/tail (second append) query.

Do not get confused by the query. Look at it as 3 parts, the middle part does the trim of the top/bottom 10% and the first and third queries append the values from the middle query, based on the number of rows as per the 10% rule.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Sukisen1981 - Thanks for the response.

After sorting the values , the 10% isn't ONLY to be removed from the tail end. 10% of the head end also to be removed.

like , 1 2 3 4 5 6 7 8 9 10

Lets say I have above 10 values)for simplicity I am considering 1 through 10). So when we say trimming the 10 % on each end it means, the value 1 from left and 10 from right will be removed. .

Lets move to the part where we understand what values to be replaced in place of "1" and "10".

After trimming 10% on both end , the nearest value of the trimmed number is to be replaced with.

Meaning the new set will be , 2 2 3 4 5 6 7 8 9 9

The average now will be calculated as (2+2+3+4+5+6+7+8+9+9)/10

I hope I didn't confuse you.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @zacksoft

I am back again 🙂

Try this -

index="_audit" | table date_minute,_time

| where isnotnull(date_minute) | rename date_minute as x

| eventstats count(x) as maxcount

| eval perc=0.1*maxcount | streamstats count as row | eval z=maxcount-row

| eval diff=row-perc

| eval diff1=z-perc | where diff <=perc

| where diff >0

| append

[search index="_audit" | table date_minute,_time

| where isnotnull(date_minute) | rename date_minute as x

| eventstats count(x) as maxcount

| eval perc=0.1*maxcount | streamstats count as row | where row>perc | eval z=maxcount-row | where z>=perc]

| append

[search

index="_audit" | table date_minute,_time

| where isnotnull(date_minute) | rename date_minute as x

| eventstats count(x) as maxcount

| eval perc=0.1*maxcount | streamstats count as row | eval z=maxcount-row

| eval diff=row-perc

| eval diff1=z-perc

| where diff1<perc

| where diff1>=0]

Once again, run this on the _aidt index before implementing on your actual data.

NOTE - I have used the date_minute field to simulate number values.

It would be good if you can run this on a fixed time range , something like yesterday 2-3 PM or something like that, so that the data becomes easier to verify. Remember the first field x is the only one we are interested in, the rest of the fields are added for verification purposes only.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

hi @zacksoft - Were you able to test this out?

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Does the value of 'x' contain the nearest value after dropping 10 % of extreme values on both end ? Which line in the query is confirming that ?

Also , I added | sort 0 x after | rename command as the values are only to be trimmed after they are sorted. Could you please clarify

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Not yet . I will test it today and will let you know by tomorrow.