- Splunk Answers

- :

- Splunk Administration

- :

- Monitoring Splunk

- :

- Re: Trend analysis with joined input lookups

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark Topic

- Subscribe to Topic

- Mute Topic

- Printer Friendly Page

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I have a joined input lookup as per the below

| union

[ inputlookup int_issue

| search reporter=test1 status!=closed status!=approved status!=cancelled labels!="*test2*" labels!="test3*" issuekey=zz* issuetype=test4 created > "2019-01-01 00:00:00"

| eval createddate=substr(created,1,10)

| where createddate>"2019-07-01 00:00:00.000"

| stats count as createticket by createddate]

[ inputlookup int_case

| search issue_url__c!=null if_parent_case__c="0" type!="Data Migration" type!="Implementation" io_system_area_1__c!="test2" io_system_area_1__c!="test2*" closeddate!=null

| eval dateclosed=substr(closeddate,1,10)

| where dateclosed>"2019-07-01 00:00:00.000"

| stats count as closed by dateclosed

| rename dateclosed as createddate]

| stats sum(createissue) sum(closed) by createddate

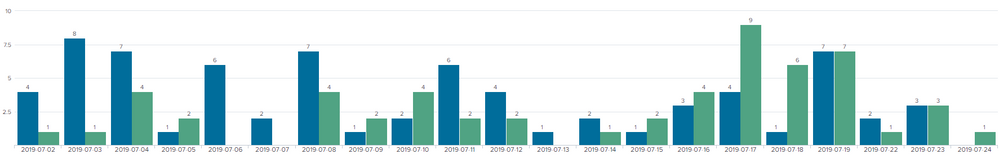

it pulls through like the below:

What i want to do is calculate the difference each day

Ive tried makeresults with no luck and even tried to add a simple | eval difference = createissue - closed

This doesnt work wither. I feel that the inputlookup does not work well with Splunks functions.

What i would like to see is a line overlay that shows the difference between each day and its only a simple calculation of 1 field minus the second field.

Any help appreciated

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Try this:

| inputlookup int_issue

| search reporter=test1 status!=closed status!=approved status!=cancelled labels!="*test2*" labels!="test3*" issuekey=zz* issuetype=test4 created > "2019-01-01 00:00:00"

| eval createddate=substr(created,1,10)

| where createddate>"2019-07-01 00:00:00.000"

| eval which="int_issue"

| inputlookup append=true int_case

| search which="int_issue" OR (issue_url__c!=null if_parent_case__c="0" type!="Data Migration" type!="Implementation" io_system_area_1__c!="test2" io_system_area_1__c!="test2*" closeddate!=null)

| eval which=coalesce(which, "int_case")

| eval dateclosed=if(which="int_case", substr(closeddate,1,10), null())

| where which=="int_issue" OR dateclosed>"2019-07-01 00:00:00.000"

| eval createddate=if(which=="int_issue", createddate, dateclosed)

| stats count(eval(which="int_issue")) AS created count(eval(which="int_case")) AS closed BY createddate

| rename COMMENT AS "Everything above is an optimized version of what you already have; everything below is the new stuff"

| reverse

| streamstats current=f last(closed) AS prev_closed last(created) AS prev_created

| reverse

| foreach prev_* [ eval <<MATCHSTR>> = <<MATCHSTR>> - <<FIELD>> | fields - <<FIELD>> ]

Here is a run-anywhere proof of concept:

index=_*

| bin _time span=1h

| rename _time AS createddate

| stats count(eval(match(sourcetype, "splunkd"))) AS closed count AS created BY createddate

| reverse

| streamstats current=f last(closed) AS prev_closed last(created) AS prev_created

| reverse

| foreach prev_* [ eval <<MATCHSTR>> = <<MATCHSTR>> - <<FIELD>> | fields - <<FIELD>> ]

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Try this:

| inputlookup int_issue

| search reporter=test1 status!=closed status!=approved status!=cancelled labels!="*test2*" labels!="test3*" issuekey=zz* issuetype=test4 created > "2019-01-01 00:00:00"

| eval createddate=substr(created,1,10)

| where createddate>"2019-07-01 00:00:00.000"

| eval which="int_issue"

| inputlookup append=true int_case

| search which="int_issue" OR (issue_url__c!=null if_parent_case__c="0" type!="Data Migration" type!="Implementation" io_system_area_1__c!="test2" io_system_area_1__c!="test2*" closeddate!=null)

| eval which=coalesce(which, "int_case")

| eval dateclosed=if(which="int_case", substr(closeddate,1,10), null())

| where which=="int_issue" OR dateclosed>"2019-07-01 00:00:00.000"

| eval createddate=if(which=="int_issue", createddate, dateclosed)

| stats count(eval(which="int_issue")) AS created count(eval(which="int_case")) AS closed BY createddate

| rename COMMENT AS "Everything above is an optimized version of what you already have; everything below is the new stuff"

| reverse

| streamstats current=f last(closed) AS prev_closed last(created) AS prev_created

| reverse

| foreach prev_* [ eval <<MATCHSTR>> = <<MATCHSTR>> - <<FIELD>> | fields - <<FIELD>> ]

Here is a run-anywhere proof of concept:

index=_*

| bin _time span=1h

| rename _time AS createddate

| stats count(eval(match(sourcetype, "splunkd"))) AS closed count AS created BY createddate

| reverse

| streamstats current=f last(closed) AS prev_closed last(created) AS prev_created

| reverse

| foreach prev_* [ eval <<MATCHSTR>> = <<MATCHSTR>> - <<FIELD>> | fields - <<FIELD>> ]

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks @woodcock

This is getting close but its not bringing back any created data. I might have got the last line wrong which is

| foreach prev_* [eval difference = prev_created - prev_closed | fields - difference]

createddate created closed prev_closed prev_created

1 2019-07-02 0 1 1 0

2 2019-07-03 0 1 4 0

3 2019-07-04 0 4 2 0

4 2019-07-05 0 2 4 0

5 2019-07-08 0 4 2 0

6 2019-07-09 0 2 4 0

7 2019-07-10 0 4 2 0

8 2019-07-11 0 2 2 0

9 2019-07-12 0 2 1 0

10 2019-07-14 0 1 2 0

11 2019-07-15 0 2 4 0

12 2019-07-16 0 4 9 0

13 2019-07-17 0 9 6 0

14 2019-07-18 0 6 7 0

15 2019-07-19 0 7 1 0

16 2019-07-22 0 1 3 0

17 2019-07-23 0 3 1 0

18 2019-07-24 0 1 1 0

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

No, no, no. The foreach command is special and when I write <<MATCSTR>> and <<FIELD>> I literally mean to use those EXACT strings (do not try to substitute in your field names). Give it a try EXACTLY as I typed it.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks @woodcock

I see what you mean now as i have never used that method before.

Its now bringing back figures for those dates but im trying to understand how it correlates with my original query i put above that gave me the sum of total open and closed. Im getting the below results from your query which do not seem to calculate correctly. Are you able to advise?

Your awesome trend query

Column createddate created closed

2 2019-08-02 2 3

3 2019-08-01 2 0

4 2019-07-31 -2 -1

but on the graph i have which shows created and closed its as the below:

my original query

column createddate sum(create) sum(closed)

2 2019-08-02 2 5

3 2019-08-01 3 5

4 2019-07-31 1 4

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

You are going to have to take a small subset of data and figure out which of us is right by doing the work by hand. If I am wrong, then post your small subset of data here so that I can figure out where the problem is and I will double-check. Keep in mind that I have tested my work on fake data and it gives the correct answer for me.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Sorry @woodcock

I was looking at this incorrectly inline with my dat and it does work and lovely stuff. I have another question in regards to trend date but ill raise a separate question for that