- Splunk Answers

- :

- Using Splunk

- :

- Dashboards & Visualizations

- :

- How to display percentage values in the Y-axis?

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark Topic

- Subscribe to Topic

- Mute Topic

- Printer Friendly Page

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

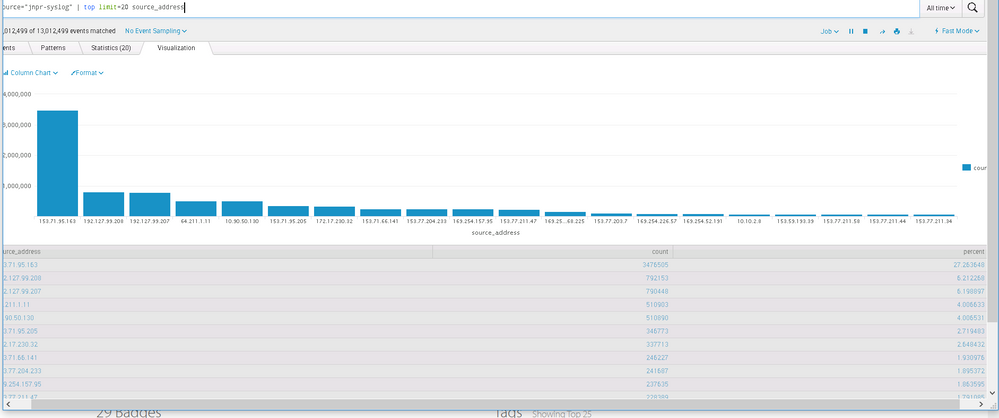

As seen in the above image, you can see that visualization chart displays the top values of the field: Source Address.

X-axis displays the source address and Y-axis the count. I also need to include the percent value which is seen in the table( grayed out in the image) in the chart. How can I do that? Main aim is to derive the source address vs percentage use.

Kindly help me on this quickly.

Thanks & Regards,

Sushma.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

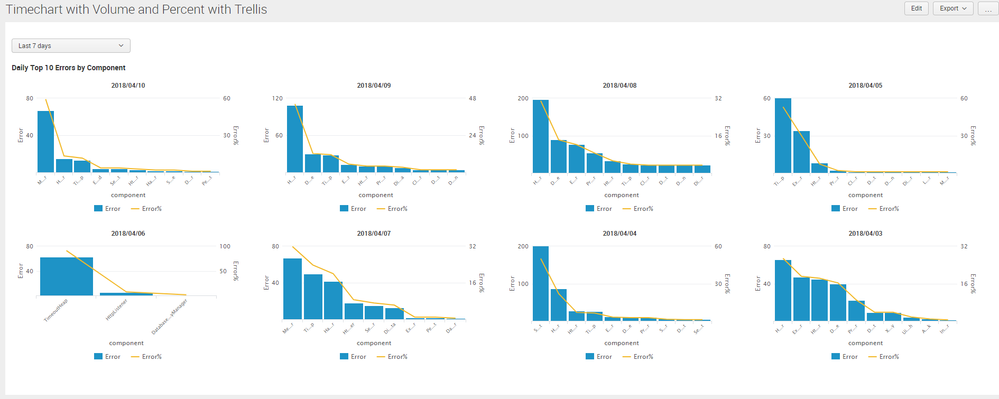

@muralisushma7, please try the following search. If you are on Splunk 6.6 or higher you can use Trellis Layout to break the timechart by Time.

source="jnpr-syslog" policy_name=Internet_Lab_Policy_Gateway_Logging source

| bin _time span=1d

| stats count as Count by source_address _time

| eventstats sum(Count) as Total by _time

| eval "Count%"=round((Count/Total)*100,2)

| fields - Total

| sort - _time Count

| streamstats count as sno by _time

| search sno<=20

| fields - sno

| eval _time=strftime(_time,"%Y/%m/%d")

| rename _time as Time

| stats last(Count) as Count last("Count%") as "Count%" by Time source_address

| sort - Count

Following is a run anywhere dashboard example based on Splunk's _internal index

Please find the Simple XML dashboard code for example above:

<form>

<label>Timechart with Volume and Percent with Trellis</label>

<fieldset submitButton="false"></fieldset>

<row>

<panel>

<input type="time" token="tokTime" searchWhenChanged="true">

<label></label>

<default>

<earliest>-7d@h</earliest>

<latest>now</latest>

</default>

</input>

<chart>

<title>Daily Top 10 Errors by Component</title>

<search>

<query>index=_internal sourcetype=splunkd log_level!=INFO

| bin _time span=1d

| stats count as Error by component _time

| eventstats sum(Error) as Total by _time

| eval "Error%"=round((Error/Total)*100,2)

| fields - Total

| sort - _time Error

| streamstats count as sno by _time

| search sno<=10

| fields - sno

| eval _time=strftime(_time,"%Y/%m/%d")

| rename _time as Time

| stats last(Error) as Error last("Error%") as "Error%" by Time component

| sort - Error</query>

<earliest>$tokTime.earliest$</earliest>

<latest>$tokTime.latest$</latest>

<sampleRatio>1</sampleRatio>

</search>

<option name="charting.axisLabelsX.majorLabelStyle.overflowMode">ellipsisMiddle</option>

<option name="charting.axisLabelsX.majorLabelStyle.rotation">-45</option>

<option name="charting.axisTitleX.visibility">visible</option>

<option name="charting.axisTitleY.visibility">visible</option>

<option name="charting.axisTitleY2.visibility">visible</option>

<option name="charting.axisX.abbreviation">none</option>

<option name="charting.axisX.scale">linear</option>

<option name="charting.axisY.abbreviation">none</option>

<option name="charting.axisY.scale">linear</option>

<option name="charting.axisY2.abbreviation">none</option>

<option name="charting.axisY2.enabled">1</option>

<option name="charting.axisY2.scale">inherit</option>

<option name="charting.chart">column</option>

<option name="charting.chart.bubbleMaximumSize">50</option>

<option name="charting.chart.bubbleMinimumSize">10</option>

<option name="charting.chart.bubbleSizeBy">area</option>

<option name="charting.chart.nullValueMode">gaps</option>

<option name="charting.chart.overlayFields">Error%</option>

<option name="charting.chart.showDataLabels">none</option>

<option name="charting.chart.sliceCollapsingThreshold">0.01</option>

<option name="charting.chart.stackMode">default</option>

<option name="charting.chart.style">shiny</option>

<option name="charting.drilldown">none</option>

<option name="charting.layout.splitSeries">0</option>

<option name="charting.layout.splitSeries.allowIndependentYRanges">0</option>

<option name="charting.legend.labelStyle.overflowMode">ellipsisMiddle</option>

<option name="charting.legend.mode">standard</option>

<option name="charting.legend.placement">bottom</option>

<option name="charting.lineWidth">2</option>

<option name="height">600</option>

<option name="refresh.display">progressbar</option>

<option name="trellis.enabled">1</option>

<option name="trellis.scales.shared">0</option>

<option name="trellis.size">medium</option>

<option name="trellis.splitBy">Time</option>

</chart>

</panel>

</row>

</form>

| makeresults | eval message= "Happy Splunking!!!"

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

Thanks for the query, I ran it and i can see the graph as I would like to. Just to re-confirm, this query gives the first 20 source addresses with highest percentage usage right? Moreover if I save this as a dashboard, will the graph be dynamic always, I mean the first 20 source address and its value keeps on changing forever( getting updated) or do we need some setting to be done.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@muralisushma7, please try the following:

<YourBaseSearch>

| top 20 source_address

| chart sum(count) as Total last(percent) as percent by source_address

| sort - Total

Optional Suggestions 🙂

1. You should also consider option to create a Chart Overlay with percent field over the Total.

2. Another option for you would be to use your current query as the base search for post processing and use the percent field in the post process search to plot a pie chart corresponding to the Column Chart that you have.

| makeresults | eval message= "Happy Splunking!!!"

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Niketnilay,

For the below query, which you helped me out:

source="jnpr-syslog" policy_name=Internet_Lab_Policy_Gateway_Logging source

| bin _time span=1d

| stats count as New_Connections by source_address _time

| eventstats sum(New_Connections) as Total by _time

| eval "%New_Connections"=round((New_Connections/Total)*100,2)

| fields - Total

| sort - _time New_Connections | streamstats count as sno by _time

| search sno<=20

| fields - sno

| eval _time=strftime(_time,"%Y/%m/%d")

| rename _time as Time

| stats last(New_Connections) as New_Connections last("%New_Connections") as "%New_Connections" by source_address

| sort - New_Connections

This query don't return an output when ran in fast mode. When in verbose mode it gives the output. Using this query a dashboard has been created and by default it runs in fast mode. The dashboard also has time as input. As a result, when I select a real time data i.e. 1 minute window, the query does not produce any output as it is in fast mode, when i change it to verbose mode manually it gives the output. How to make the dashboard run permanently with the verbose mode or else how to change the query so that it gives output even when ran in the fast mode.

Let me know if you need any more information.

Regards,

Sushma.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

Yes we are having real time events. Previously we were not bothered about it, but now we even consider it.

As said, I removed the line |bin_time span=1d and ran the query, it does produces the output irrespective of the modes it is in. However the output generated when I remove the line time span in the query for 1 minute window in verbose mode is different from not removing the time span from the query for 1 minute window in the verbose mode. Why is that difference? Why both the outputs are not correct. Which one should i think is correct?

Please guide.

Thanks & Regards,

Sushma.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Sorry I just wanted you to test to see if events are coming in. The reason why we had span=1d initially is because of your previous requirement for showing including Time in the Top stats.

Right now since you want real-time 1 minute window, you need to make following changes

1) | bin _time span=1m

2) | eval _time=strftime(_time,"%H:%M")

Test with the following run anywhere search based on Splunk's _internal index

index=_internal sourcetype=splunkd log_level=INFO

| bin _time span=1m

| stats count as Error by component _time

| eventstats sum(Error) as Total by _time

| eval "Error%"=round((Error/Total)*100,2)

| fields - Total

| sort - _time Error

| streamstats count as sno by _time

| search sno<=10

| fields - sno

| eval _time=strftime(_time,"%H:%M")

| rename _time as Time

| stats last(Error) as Error last("Error%") as "Error%" by Time component

| sort - Error

Following is corresponding Simple XML code with new real-time time window and Trellis configuration which should remain the same:

<form>

<label>Timechart with Volume and Percent with Trellis</label>

<fieldset submitButton="false"></fieldset>

<row>

<panel>

<input type="time" token="tokTime" searchWhenChanged="true">

<label></label>

<default>

<earliest>-7d@h</earliest>

<latest>now</latest>

</default>

</input>

<chart>

<title>Daily Top 10 Errors by Component</title>

<search>

<query>index=_internal sourcetype=splunkd log_level=INFO

| bin _time span=1m

| stats count as Error by component _time

| eventstats sum(Error) as Total by _time

| eval "Error%"=round((Error/Total)*100,2)

| fields - Total

| sort - _time Error

| streamstats count as sno by _time

| search sno<=10

| fields - sno

| eval _time=strftime(_time,"%H:%M")

| rename _time as Time

| stats last(Error) as Error last("Error%") as "Error%" by Time component

| sort - Error</query>

<earliest>$tokTime.earliest$</earliest>

<latest>$tokTime.latest$</latest>

<sampleRatio>1</sampleRatio>

</search>

<option name="charting.axisLabelsX.majorLabelStyle.overflowMode">ellipsisMiddle</option>

<option name="charting.axisLabelsX.majorLabelStyle.rotation">-45</option>

<option name="charting.axisTitleX.visibility">visible</option>

<option name="charting.axisTitleY.visibility">visible</option>

<option name="charting.axisTitleY2.visibility">visible</option>

<option name="charting.axisX.abbreviation">none</option>

<option name="charting.axisX.scale">linear</option>

<option name="charting.axisY.abbreviation">none</option>

<option name="charting.axisY.scale">linear</option>

<option name="charting.axisY2.abbreviation">none</option>

<option name="charting.axisY2.enabled">1</option>

<option name="charting.axisY2.scale">inherit</option>

<option name="charting.chart">column</option>

<option name="charting.chart.bubbleMaximumSize">50</option>

<option name="charting.chart.bubbleMinimumSize">10</option>

<option name="charting.chart.bubbleSizeBy">area</option>

<option name="charting.chart.nullValueMode">gaps</option>

<option name="charting.chart.overlayFields">Error%</option>

<option name="charting.chart.showDataLabels">none</option>

<option name="charting.chart.sliceCollapsingThreshold">0.01</option>

<option name="charting.chart.stackMode">default</option>

<option name="charting.chart.style">shiny</option>

<option name="charting.drilldown">none</option>

<option name="charting.layout.splitSeries">0</option>

<option name="charting.layout.splitSeries.allowIndependentYRanges">0</option>

<option name="charting.legend.labelStyle.overflowMode">ellipsisMiddle</option>

<option name="charting.legend.mode">standard</option>

<option name="charting.legend.placement">bottom</option>

<option name="charting.lineWidth">2</option>

<option name="height">250</option>

<option name="refresh.display">progressbar</option>

<option name="trellis.enabled">1</option>

<option name="trellis.scales.shared">0</option>

<option name="trellis.size">medium</option>

<option name="trellis.splitBy">Time</option>

</chart>

</panel>

</row>

</form>

| makeresults | eval message= "Happy Splunking!!!"

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Let me check on this and get back to you.

Thanks & Regards,

Sushma.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

I validated the above said code and please find my queries below:

Old query:

1) | bin _time span=1d

2) | eval _time=strftime(_time,"%Y/%m/%d")

This worked perfectly, displaying top 20 IP address in the X-axis for selected time range, except for real time events.

New query:

1) | bin _time span=1m

2) | eval _time=strftime(_time,"%H:%M"). or | eval _time=strftime(_time,"%Y/%m/%d") produces same output for timespan=1m. Is there a need to change this?

Moreover when I change timespan=1m , output is produced even for real time events as required but it is listing almost all the IP address in the X-axis making the dashboard look clumsy. Instead I want only top 20 IP address listed for real as well as relative events.

Is that possible?

Thanks & Regards,

Sushma.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@muralisushma7 try to understand the difference between real-time rolling window and historical time window (can be near-real time).

With span=1d for last 7 days or last 30 days data, you can get a daily break up of top 20 IPs. This is good!

Now with Real-Time 1 minute window the historical data will not change as it gets data for only last 1 minute every minute. So only if you have more than 20 IPs push data to Splunk in every 1 minute you would be able to see 20 IPs in the Query adjusted to 1 minute real-time window that I have provided.

So following could be your two options:

1) Use Relative Time in your search as proposed earlier and Auto Refresh Search Panel every 1 minute to get the latest stats. Knowing that only today's stats will change every minute based on how much traffic you are getting from each IPs. Note that previous days data will not change and will be an overhead every time Dashboard is refreshed. (If there is performance issue, you can look into Summary Indexing to collect summarized data every minute for the past minute)

2) Only if you have have plenty of traffic from 20+ IPs every minute, use Real-Time 1 minute Window for a separate panel as suggested in the new query for breaking down data every minute. For Previous days, keep using the first query that was provided with Daily Breakdown.

| makeresults | eval message= "Happy Splunking!!!"

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

I think, I am not very clear with what you said. My concern is that with timespan=1d, dashboard displays top 20 IP address on X-axis, but when I change timespan=1m, real time events are getting displayed but the IP address are not restricted to top 20. More that 20 are appearing and I don't need all of them. I am concerned only about the top 20 Ip address whether it is real or relative.

Thanks & Regards,

Sushma.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Moreover I can add a separate panel for real time events using the new query, however what I want is the number of IP addresses restricted to top 20 on the X-axis. This problem doesn't appear for relative events. It always shows 20 IP address or less and not more.

If you can help me out in restricting this, I think new panel for real time events is not required actually.

Thanks & Regards,

Sushma.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

Did you find time to take a look into it?

Thanks & Regards,

Sushma.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@muralisushma7 I get your problem now. I think somewhere down the line I have missed Top 20 part. I will have to look into the query again. Maybe start from scratch.

| makeresults | eval message= "Happy Splunking!!!"

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Ok.

Thanks & Regards,

Sushma.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

I verified the above piece of code in my environment and here are my queries:

Old Query:

1) | bin _time span=1d

2) | eval _time=strftime(_time,"%Y/%m/%d")

This worked perfect, displaying the top 20 IP address for a given Time range selected. Except that it did not work for real time events.

New query:

1) | bin _time span=1m

2) | eval _time=strftime(_time,"%Y/%m/%d") . Here the eval_time=strftime(_time,"%Y/%m/%d") and eval _time=strftime(_time,"%H:%M") produced same result for time span=1m. I did not see any difference.

This query is producing the real time events as required. But it is showing too many IP address on the X-axis instead of just 20. As a result the dashboard appears clumsy and data is not clear from end user perspective. I want only top 20 IP address to be displayed on X-axis whether it is for real time or relative events.

Is that possible?

Thanks & Regards,

Sushma.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Sorry for the double comments.

I mistakenly did it twice.

Thanks & Regards,

Sushma.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Are you getting data every 1 minute?

Can you take out second line | bin _time span=1d when you run in real-time mode and test?

Also if you have span=1d for your stats, what is the need for real-time 1 min window?

Instead of real-time 1 minute windows can you try adding panel refresh every 1 minute instead?

| makeresults | eval message= "Happy Splunking!!!"

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

Yes we are getting data every minute and real time events exists. Previously we were not bothered about it and the query was good enough but now we even wish to see the real time events graph. As you said I tried removing the | bin_time span=1d, doing so the real time events generated are different from when time span is included. What I exactly mean is, when time span is included and time window of 1 minute is selected, output generated is different from when time span not included and time window of 1 minute is selected. Why is that difference? What can be done?

Coming to adding a panel to refresh every minute. I am not sure how to achieve this.

Kindly respond.

Thanks & Regards,

Sushma.