- Splunk Answers

- :

- Using Splunk

- :

- Splunk Search

- :

- ES incident_review_lookup

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark Topic

- Subscribe to Topic

- Mute Topic

- Printer Friendly Page

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Dear All,

In Splunk ES, is it possible to create a realtime alert for any update in incident_review KV store? The search query ( | inputlookup append=T incident_review_lookup) will always list the entire contents of incident_review KV store. I want to use KV store's time field as Splunk search reference time. Any help is really appreciated.

Thank you,

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

You could run a search every five minutes like this:

|`incident_review` | where _time >= relative_time(now(), "-6m@m") AND _time < relative_time(now(), "-m@m")

That'll look at each new entry exactly once. You rarely really need actual realtime searches to fulfil requirements - if quick reaction is a thing, you could schedule this every minute and reduce the "indexing delay" shift:

|`incident_review` | where _time >= relative_time(now(), "-75s") AND _time < relative_time(now(), "15s")

Anyone reacting to that alert is hardly going to be quick enough for those 75s maximum delay to matter.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

If you have sufficient permissions you could try a different approach: Updates to the incident review lookup should leave a trail in _audit. Building a real-time or scheduled search with the regular timerange controls should be quite simple.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Does this work?

|'incident_review' | convert ctime(time) | eval _time=time

OR

|'incident_review' | convert ctime(time) ctime(_time) | eval _time=time

Ive had to do this so many times, but cant remember exactly how. I usually would use strftime or strptime but i cant make it work today... you might try that in place of my convert and evals...

|'incident_review' | eval _time=strftime(time,"%Y-%m-%d %H:%M:%S.%3N")

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I have tested all the three search queries. No way 😞 . It was dumping entire contents.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

How about this using lookup instead:

|lookup incident_review_lookup OUTPUT time AS _time rule_id As rule ....

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

For using lookup command instead, we need a lookup field, which we don't have.

Error : Error in 'lookup' command: Must specify one or more lookup fields.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Very true... Interesting problem which has peaked my interest ;-D.

So can you take input lookup results to summary index and then query the summary index directly with some time = _time trickery?

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

That "summary index" already exists - it's called _audit.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I guess you lose the real time then. So it's like you need to copy inputlookup.py to inputlookupRT.py and then dive into Python so that it yields one result at a time versus all results.

I am interested in helping with this custom Splunk command if you like.

You might get better and quicker results opening a ticket with Splunk though.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thank you all for the response.

@jkat54,

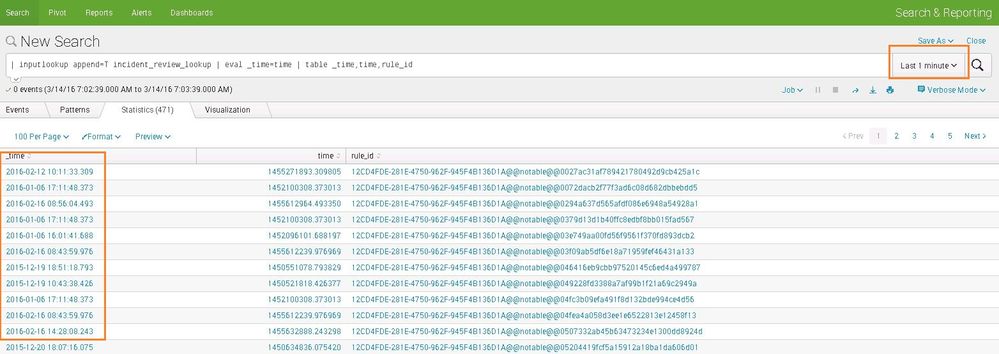

Both time field in the lookup and _time field showing in the results are same, only format is different. Please find attached screenshot.

I searched for last 1min logs, it lists the entire contents of lookup table.

@martin_mueller

The solution you suggested was my workaround. I need a real-time search rather than scheduled search. Because I want to run a script for every search results ( using script argument $8). For real time search alerts only I think the action can be triggered for every search results (Trigger condition is Per result).

Thanks

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks for the detail (I don't have Splunk Es license so I needed to see). I will write the search asap. On mobile right now.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

You could run a search every five minutes like this:

|`incident_review` | where _time >= relative_time(now(), "-6m@m") AND _time < relative_time(now(), "-m@m")

That'll look at each new entry exactly once. You rarely really need actual realtime searches to fulfil requirements - if quick reaction is a thing, you could schedule this every minute and reduce the "indexing delay" shift:

|`incident_review` | where _time >= relative_time(now(), "-75s") AND _time < relative_time(now(), "15s")

Anyone reacting to that alert is hardly going to be quick enough for those 75s maximum delay to matter.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Re your comment under the question: You can run per-result alert actions regardless of realtime or not.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Sorry, my bad. Yes, we can run it for every results.

This is my work-around solution If I am not able to create a real-time alert.

Thanks,

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Just go with the "workaround" - it's extremely rare that someone actually needs a real-time alert as opposed to a frequently running scheduled alert.

Additionally, running a real-time search on a lookup feels really wrong. The real-time facility is there to intercept matching events during indexing, that doesn't happen with lookups.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Lookups are not the same as kv store. I think you've confused the terms. Lookups are csv files on the filesystem. Kv store is a MongoDB instance.

It sounds like you want to use the time field that is in the lookup in conjunction with your time picker. If you'll give us an example of the time field name and value that is returned from the lookup, then we can tell you how to use |eval _time=convert(lookupTimeField) or similar so that the time picked applies to the time stamp in the lookup, etc