Turn on suggestions

Auto-suggest helps you quickly narrow down your search results by suggesting possible matches as you type.

Showing results for

All Apps and Add-ons

Turn on suggestions

Auto-suggest helps you quickly narrow down your search results by suggesting possible matches as you type.

Showing results for

- Apps and Add-ons

- :

- All Apps and Add-ons

- :

- Re: Why are DBconnect 3 sometime unable to write r...

Options

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark Topic

- Subscribe to Topic

- Mute Topic

- Printer Friendly Page

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

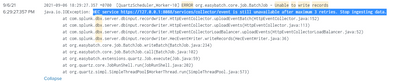

Why are DBconnect 3 sometime unable to write records, read timed out, unable_to_write_batch

dailv1808

Path Finder

08-26-2021

12:59 AM

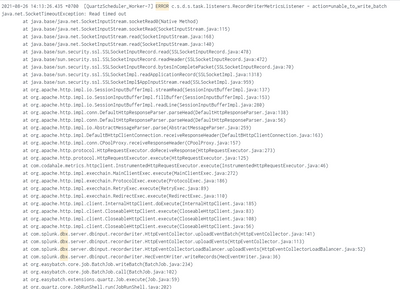

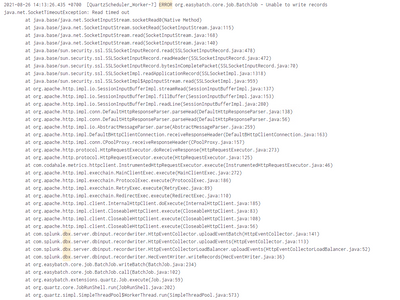

I have DBconnect 3.1.5 running on a 8.1 instance.

sometime i got errors below and it will stop ingesting data.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

codebuilder

Influencer

08-26-2021

07:06 AM

That generally means your query is running too long or returning too many results, and you've exhausted the allocated memory or hit a config limit.

----

An upvote would be appreciated and Accept Solution if it helps!

An upvote would be appreciated and Accept Solution if it helps!

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

dailv1808

Path Finder

08-31-2021

06:44 AM

Thanks your response. In my case, I thinks it returning too many results. is there any way to fix it?

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

codebuilder

Influencer

08-31-2021

08:37 AM

You can limit results by adding "maxrows" to your dbxquery.

maxrows=1000 for example. You can test with different values until you find one that doesn't cause the issue.

----

An upvote would be appreciated and Accept Solution if it helps!

An upvote would be appreciated and Accept Solution if it helps!

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

dailv1808

Path Finder

09-07-2021

12:44 AM

Error in HF (DBC3 is installed)

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

dailv1808

Path Finder

09-06-2021

02:49 AM

i tried many different values "maxrows" from 1000 to unlimit, and fetch size value from 300 to 1000, 3000, 30000,.. But it still got above errors.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

isoutamo

SplunkTrust

08-31-2021

01:54 PM

Another thing what you could try with this is extend time-out.

R. Ismo

R. Ismo

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

dailv1808

Path Finder

09-06-2021

04:36 AM

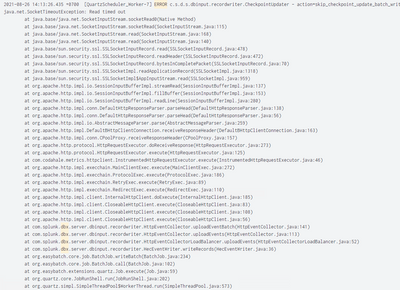

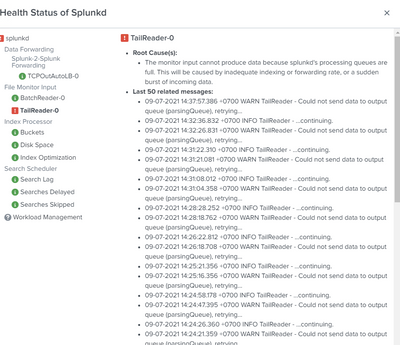

some time i got HEC service error

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

dailv1808

Path Finder

09-06-2021

02:50 AM

i change timeout to 300 seconds, it still got errors.

Get Updates on the Splunk Community!

Announcing Scheduled Export GA for Dashboard Studio

We're excited to announce the general availability of Scheduled Export for Dashboard Studio. Starting in ...

Extending Observability Content to Splunk Cloud

Watch Now!

In this Extending Observability Content to Splunk Cloud Tech Talk, you'll see how to leverage ...

More Control Over Your Monitoring Costs with Archived Metrics GA in US-AWS!

What if there was a way you could keep all the metrics data you need while saving on storage costs?This is now ...