- Apps and Add-ons

- :

- All Apps and Add-ons

- :

- Splunk Add on for Amazon Web Services Bug: cloudwa...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark Topic

- Subscribe to Topic

- Mute Topic

- Printer Friendly Page

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I believe the Splunk Add-on for Amazon Web Services has a new bug.

All inputs that gather Cloudwatch log events now have a trailing >. This is not great. I believe this started after the recent update to Cloudwatch by AWS.

This is the second complete blocker I have come up against. Not only can I not define a custom sourcetype to use while pulling data from s3, now this bug exists.

See here:

https://answers.splunk.com/answers/326400/splunk-appadd-on-for-aws-how-to-modify-awss3-sourc.html

Come on Splunk! Let's get this add-on working and useful, please!

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The answer here is that this is a bug that Splunk was able to reproduce and hopefully we will here from @rmeynov_splunk about a fix date.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The answer here is that this is a bug that Splunk was able to reproduce and hopefully we will here from @rmeynov_splunk about a fix date.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

I can't reproduce this or your other problem. My suggestion is to review http://docs.splunk.com/Documentation/AddOns/released/AWS/Troubleshooting and open a support ticket if that doesn't help uncover it. Software isn't perfect, but you seem to be running into issues that are unique.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I downvoted this post because it was a bug and it was fixed and released by splunk.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks for the quick reply. I am always willing to admit this could be user error.

I will edit my question with more detail regarding version, data, and configuration.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Which version are you on? What does your data look like?

I appreciate the sentiment, but this addon is working fine for many customers, so this must be an edge case. Our addons go through compehensive testing, but we cannot catch everything.

We do love our customers, so we are here to help.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Reproduced the problem and filed a bug with dev. It's going to take a while, but we will address this. I will update this thread with any news.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

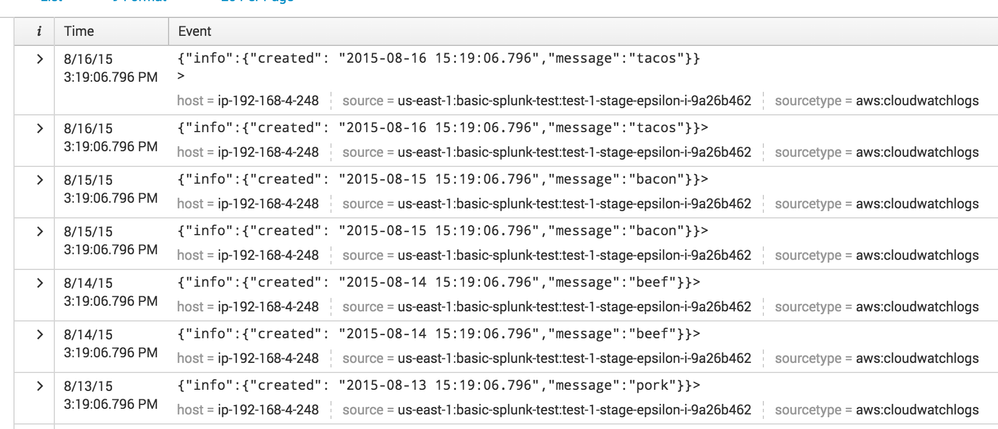

More evidence something is not right. It could still be me.

Per @rmeynov comment above.

I created a new index.

I created a new AWS input of type "CloudWatch Logs",

I configured it to pull from the log group containing test json data from picture in previous answer.

I configured it to use sourcetype = "aws:cloudwatchlogs" as the instructions indicate..

Result:

Events once indexed STILL have a trailing ">"

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@mreynov and @jcoates

I was unable to edit my original question so am adding more detail here.

Splunk version is 6.4.2

Splunk Add-on for AWS version 4.0.0

I have included two screen shots.

One is of my events in cloudwatch. Notice they are well formed json.

The second is of my events in splunk, notice the trailing ">" and they are not well formed json.

As a test I used the command line to add an index and oneshot in this same fake log file via the same sourcetype.

I cant attach a third pic (only two allowed) of that indexed data so you will have to trust me that it worked and it is well formed json during search of that index.

Reference

One shot CL commands executed and test log file

root@ip-192-168-4-248:/opt/splunk/bin# ./splunk add index tshjsonuifromcl

Index "tshjsonuifromcl" added.

root@ip-192-168-4-248:/opt/splunk/bin# ./splunk add oneshot /opt/splunk/data/

fake-log.json test.json

root@ip-192-168-4-248:/opt/splunk/bin# ./splunk add oneshot /opt/splunk/data/fake-log.json -sourcetype tshjsonui -index tshjsonuifromcl

Oneshot '/opt/splunk/data/fake-log.json' added

root@ip-192-168-4-248:/opt/splunk/bin# cat /opt/splunk/data/fake-log.json

{"info":{"created": "2015-08-12 15:19:06.796","message":"chicken"}}

{"info":{"created": "2015-08-13 15:19:06.796","message":"pork"}}

{"info":{"created": "2015-08-14 15:19:06.796","message":"beef"}}

{"info":{"created": "2015-08-15 15:19:06.796","message":"bacon"}}

{"info":{"created": "2015-08-16 15:19:06.796","message":"tacos"}}

Settings

These are my settings for my input and sourcetype (apps/Splunk_TA_aws/local) conf files:

[basic-splunk-test]

account = splunk

delay = 1800

groups = basic-splunk-test

index = chicken4

interval = 600

only_after = 1970-01-01T00:00:00

region = us-east-1

sourcetype = tshjsonui

stream_matcher = .*

[tshjsonui]

DATETIME_CONFIG =

INDEXED_EXTRACTIONS = json

NO_BINARY_CHECK = true

SHOULD_LINEMERGE = false

TIMESTAMP_FIELDS = info.created

TIME_FORMAT = %Y-%d-%m %H:%M:%S.%3Q

category = Custom

description = added via ui

pulldown_type = 1

Screenshots

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- You are not using the sourcetype the addon expects, the link you provided recommends extending existing sourcetype

- You are using indexed_extractions, which are an index time setting, so once your data is indexed, there is nothing you can do to fix it, you would need to re-ingest. But before you do that, try to start by setting your sourcetype to aws:cloudwatch.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@rmeynov I am aware data must be re-index. For each of these tests I am creating new inputs and indices.

Two inputs are available to be configured in this AWS app. "Cloudwatch" and "Cloudwatch logs".

The Cloudwatch input defaults to a template with sourcetype = "aws:cloudwatch". It is for pulling metrics from cloudwatch not log data. Try it in the apps ui and you will see what I mean.

The Cloudwatch Logs input defaults to a template with sourcetype = "aws:cloudwatchlogs:vpcflow".

I alter this to be "aws:cloudwatchlogs" per the instructions (see reference link) and I still get a trailing ">" after each event.

Please try it yourself, I am being told that it works but I have can post evidence of it not working. I will do so in another "Answer" since I cant add a pic here.

Reference Instructions Here:

http://docs.splunk.com/Documentation/AddOns/released/AWS/CloudWatchLogs

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

To test this, I need a sample of the file you are using, not a paste. Email mreynov [at] splunk.com