- Apps and Add-ons

- :

- All Apps and Add-ons

- :

- Re: Sending logs over scp to heavy forwarder, why ...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark Topic

- Subscribe to Topic

- Mute Topic

- Printer Friendly Page

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I'm using heavy forwarder to take logs in from a cloud ESA appliance. The logs sending over every 5 minutes via scp (deleting old files every 2 hours after modtime stops) work fine, line by line and time stamps all good. For some reason splunk is randomly ingesting some events by grabbing from a random place in the file, giving it a time stamp, and calling it an event.

For example, I most commonly get 1 - 3 characters, like ile, or id. Sometimes I get the middle to the end of an event. I don't understand why it's doing this, there is no line breaking (it's disabled) and I have enabled crcsalt by source.

I'm using splunk 6.4.6 on the indexers, and 6.6.1 on the heavy forwarder (started with 6.4.6). If I upload the file to my dev box, it's fine; for some reason the monitor feature of splunk is having issues. I also ingest WSA (but with universal forwarder) over scp, and I don't have these issues.

I have put the ESA app on the heavy forwader and search heads. Also tried just indexers and search heads, only input on heavy forwarder. None this changed anything.

This is all virtualized Linux, Ubuntu 64bit LTS servers.

props.conf:

CHARSET = utf-8

MAX_TIMESTAMP_LOOKAHEAD = 24

NO_BINARY_CHECK = true

SHOULD_LINEMERGE = false

TRUNCATE = 250000

TIME_PREFIX = \w{3}\s

TRANSFORMS-drop=esa_header_drop

transforms.conf

[esa_header_drop]

REGEX=^.*Info:\s(Begin\sLogfile|Logfile\srolled\sover|End\sLogfile|Version\:\s|Time\soffset\sfrom\sUTC\:).*

DEST_KEY=queue

FORMAT=nullQueue

I log amp, mail, gui, auth, http, via scp. They all have the props above, individually configured.

Sample event (good and bad) from one, full auth file:

le

Tue Jun 6 15:12:22 2017 Info: A publickey authentication attempt by the user ***** from 0.0.0.0 failed using an SSH connection.

Tue Jun 6 15:10:40 2017 Info: logout:10.0.0.1 user:- session:blahgfregre

Tue Jun 6 15:09:28 2017 Info: logout:10.0.0.25 user:- blaggj4iogjio3

Tue Jun 6 15:09:20 2017 Info: A publickey authentication attempt by the user ***** from 0.0.0.0 failed using an SSH connection.

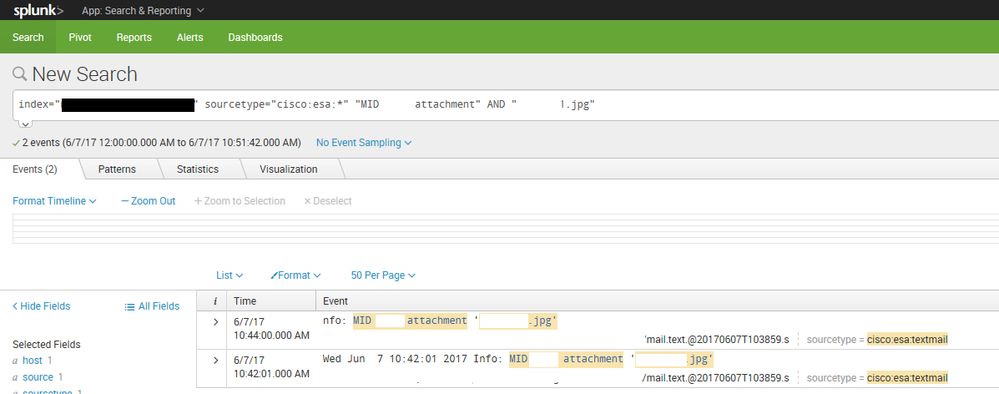

Below is a screenshot of search finding one event, that gets mangled and duplicated (using the suggestion break before regex). Checking the file, there is only one event on one line, no idea why splunk is doing this.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Upgrading to 6.6.2 and it now works fine (testing for 48 hours anyway).

EDIT: To clarify, I only updated the heavy forwarder to 6.6.2, and this appears to have fixed it.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks, I didn't think of hpn patches for splunk, but I've used them before for other large transfers. I'll look at giving that, and the configuration suggestions you mentioned a shot for sure.

Yeah, it's a cloud appliance, so no dice sending that stuff in the clear. I use TLS for syslog-ng receiver when I can, but, Cisco doesn't support it for sending from their appliances. It's pretty well wrapped up as an appliance, otherwise I'd say TLS netcat would be worth a go to.

I understand the ssh performance side, but again, elasticsearch beats handling it without issue really points me back to splunk as being the problem. However, with the WSA, that is on prem (also doing scp - because of syslog message length limitations) and it doesn't have these issues. So, it's possible splunk just doesn't like how long it takes to fully copy the file via scp.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

i'm not sure this is a valid comparison at all. You have already stated that other sources have no issue. Please do follow up with support (i have a similar ticket open) but logging live files heavily depends on the process writing the logs. I know we love to call all unknowns "bugs" round here, but lets see if we can get some better proof.

also, are u saying that elastic is monitoring this exact same file??

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Sure thing. It's happening on both indexers, and the index time is always a few seconds after the original, full event. So, to splunk, it gets queued up as an event without a timestamp. This shows up in splunkd.log, so it gives it a later timestamp based off of the last event because it couldn't find a timestamp. Hopefully that answers your question.

I agree, I'll work on a script to send these over to another folder after modtime hits a certain point. Sounds like a reasonable temporary workaround until a solution is found.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

One thing I can think of is that the files come in slowly and Splunk breaks too early, therefore you get garbage lines. Have a look at the docs http://docs.splunk.com/Documentation/Splunk/latest/Admin/Inputsconf of inputs.conf for the options

time_before_close = <integer>

* Modification time delta required before the file monitor can close a file on

EOF.

* Tells the system not to close files that have been updated in past <integer>

seconds.

* Defaults to 3.

multiline_event_extra_waittime = [true|false]

* By default, the file monitor sends an event delimiter when:

* It reaches EOF of a file it monitors and

* Ihe last character it reads is a newline.

* In some cases, it takes time for all lines of a multiple-line event to

arrive.

* Set to true to delay sending an event delimiter until the time that the

file monitor closes the file, as defined by the 'time_before_close' setting,

to allow all event lines to arrive.

* Defaults to false.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I've tried time_before_close = 120 which didn't do anything.

I'll try the multiline option, however all events are one line and I have line merge disabled (as it should be; all events are one line).

I turned on time before close and added the multi-line option, I'll see what happens.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Nope, still happening.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

can you post the props.conf settings for the used sourcetype and some sample events of good and bad events?

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Changed in edit above.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Why do you say there is no line breaking? The default line breaking is still happening.

Can you please add the raw event for one or two bad examples? There must be something triggering the line break or truncate ....

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I threw in one file parsed by splunk as an example. Top entry is bad (also have unable to parse timestamp error in splunkd.log as well).

When I say no line breaking, all events are always one line, splunk is doing the line breaking (why, I don't know, that's why I'm here). Support is scratching their heads on this so far too.