- Apps and Add-ons

- :

- All Apps and Add-ons

- :

- Is there any way to speed up eStreamer eNcore data...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark Topic

- Subscribe to Topic

- Mute Topic

- Printer Friendly Page

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

We are running the eSteamer eNcore app with default settings but it looks like it's not able to catch up with the FMC. Currently we are receiving data with time-stamp of Aug 7th 7:53 PM. We do see the time-stamp moving forward but it's extremely slow.

Tried changing the monitor.period setting under estreamer.conf to 30 seconds and even tried 1 sec just to see what happens but eNcore log files are still generated at the interval of 120 seconds, which is the default setting app came with. Splunk has been restarted after the change but the new setting doesn't seem to take effect.

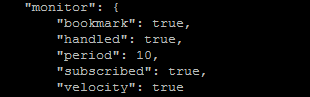

Period changed to 10 seconds:

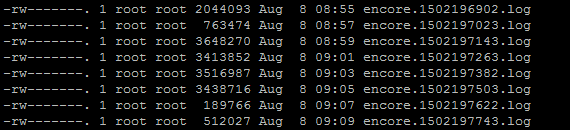

Log monitoring frequency still @ 2 minutes:

Has anyone been able to successfully modify the default settings for this app?

Thanks,

~ Abhi

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

There is no way to speed up eStreamer eNcore through configuration today.

Your options are:

- Limit the events being sent by the server (e.g. turn off connections)

- Increase CPU power (if you're running in a VM then give at least 2 cores)

- Ensure a good network connection to the FMC

- Ensure you have good output disk performance

A few things worth noting

monitor.periodrefers to the frequency with which the monitor thread asks encore how it's doing. You will see the results of this insourcetype=cisco:estreamer:log. It has nothing to do with performance. In fact the more frequently you ask, the slower encore will be. I would recommend you keep this at 120 seconds.- The difference in file timestamp has nothing to do with

monitor.period. Each file is written to until it has 10,000 lines in it (configurable, although not in the Splunk UI). What we're seeing in your screenshot is that on average you're writing about 10000 events per 2 minutes - which is 83 events per second. - I have managed to get 350 events/sec using just one core of a Celeron CPU on a VM (and around 1200 using an i7 with SSD). At 83ev/s I don't think encore will break a sweat.

The question is: if encore is not catching up... is it falling behind? If it's not falling behind then I am willing to bet that the FMC is just delayed in its reporting from sensors. If it is falling behind then it's definitely worth further investigation to find out where the bottleneck is.

EDIT:

After running the Splunk query: sourcetype="cisco:estreamer:log" (ERROR OR WARNING) there was an error like:

2017-08-10 11:51:30,783 estreamer.subscriber ERROR EncoreException: The server returned an invalid version (0) - expected 1. This is likely to be caused by CSCve44987 which is present on both FMC 6.1.0.3 and 6.2.0.1. For more information see: https://bst.cloudapps.cisco.com/bugsearch/bug/CSCve44987. Message={'messageType': 0, 'length': 0, 'version': 0}\nTraceback (most recent call last):\n File "/opt/splunk/etc/apps/TA-eStreamer/bin/encore/estreamer/subscriber.py", line 219, in start\n self.__tryHandleNextResponse()\n File "/opt/splunk/etc/apps/TA-eStreamer/bin/encore/estreamer/subscriber.py", line 158, in __tryHandleNextResponse\n message = self.connection.response()\n File "/opt/splunk/etc/apps/TA-eStreamer/bin/encore/estreamer/connection.py", line 203, in response\n raise estreamer.EncoreException( errMsg.format( version, str(message) ))\nEncoreException: The server returned an invalid version (0) - expected 1. This is likely to be caused by CSCve44987 which is present on both FMC 6.1.0.3 and 6.2.0.1. For more information see: https://bst.cloudapps.cisco.com/bugsearch/bug/CSCve44987. Message={'messageType': 0, 'length': 0, 'version': 0}\n

The important bit: EncoreException: The server returned an invalid version (0) - expected 1. This is likely to be caused by CSCve44987 which is present on both FMC 6.1.0.3 and 6.2.0.1. For more information see: https://bst.cloudapps.cisco.com/bugsearch/bug/CSCve44987

This is a known FMC bug affecting all eStreamer clients: eStreamer keeps crashing and encore keeps reconnecting and stumbling on as best it can. It's probably running only a few seconds out of every 2 minutes and is therefore very slow on average. TAC should be able to sort you out with the correct patch if you pass them the quoted exception.